Spark stream - Kafka

- 1. Spark Streaming Kafka in Action Dori Waldman Big Data Lead

- 2. Spark Streaming with Kafka – Receiver Based Spark Streaming with Kafka – Direct (No Receiver) Statefull Spark Streaming (Demo) Agenda

- 3. What we do … Ad-Exchange Real time trading (150ms average response time) and optimize campaigns over ad spaces. Tech Stack :

- 5. Use Case Tens of Millions of transactions per minute (and growing …) ~ 15TB daily (24/7 99.99999 resiliency) Data Aggregation: (#Video Success Rate) Real time Aggregation and DB update Raw data persistency as recovery backup Retrospective aggregation updates (recalculate) Analytic Data : Persist incoming events (Raw data persistency) Real time analytics and ML algorithm (inside)

- 7. Based on high-level Kafka consumer The receiver stores Kafka messages in executors/workers Write-Ahead Logs to recover data on failures – Recommended ZK offsets are updated by Spark Data duplication (WAL/Kafka) Receiver Approach - ”KafkaUtils.createStream”

- 8. Receiver Approach - Code Spark Partition != Kafka Partition val kafkaStream = { … Basic Advanced

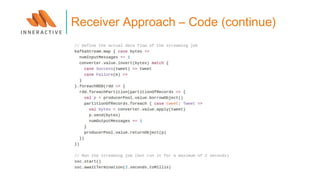

- 9. Receiver Approach – Code (continue)

- 10. Architecture 1.0 Stream Events Events Raw Data Events Consumer Consumer Aggregation Aggregation Spark Batch Spark Stream

- 11. Architecture Pros: Worked just fine with single MySQL server Simplicity – legacy code stays the same Real-time DB updates Partial Aggregation was done in Spark, DB was updated via “Insert On Duplicate Key Update” Cons: MySQL limitations (MySQL sharding is an issue, Cassandra is optimal) S3 raw data (in standard formats) is not trivial when using Spark

- 12. Monitoring

- 14. Architecture 2.0 Stream Events Events Raw Data Events starts from largest “offset” by default – columnar format (FS not DB) atch update C* every few minutes (overwrite) Consumer Consumer Raw Data Raw Data Aggregation

- 15. Architecture Pros: Parquet is ideal for Spark analytics Backup data requires less disk space Cons: DB is not updated in real time (streaming), we could use combination with MySQL for current hour... What has been changed: C* uses counters for “sum/update” which is a “bad” practice (no “insert on duplicate key” using MySQL) Parquet conversion is a heavy job and it seems that streaming hourly conversions (using batch in case of failure) is a better approach

- 16. Direct Approach – ”KafkaUtils.createDirectStream” Based on Kafka simple consumer Queries Kafka for the latest offsets in each topic+partition, define offset range for batch No need to create multiple input Kafka streams and consolidate them Spark creates an RDD partition for each Kafka partition so data is consumed in parallel ZK offsets are not updated by Spark, offsets are tracked by Spark within its checkpoints (might not recover) No data duplication (no WAL)

- 17. S3 / HDFS Save metadata – needed for recovery from driver failures RDD for statefull transformations (RDDs of previous batches) Checkpoint...

- 18. Transfer data from driver to workers Broadcast - keep a read-only variable cached on each machine rather than shipping a copy of it with tasks Accumulator - used to implement counters/sum, workers can only add to accumulator, driver can read its value (you can extends AccumulatorParam[Vector]) Static (Scala Object) Context (rdd) – get data after recovery

- 19. Direct Approach - Code

- 20. def start(sparkConfig: SparkConfiguration, decoder: String) { val ssc = StreamingContext.getOrCreate(sparkCheckpointDirectory(sparkConfig),()=>functionToCreateContext(decoder,sparkConfig)) sys.ShutdownHookThread { ssc.stop(stopSparkContext = true, stopGracefully = true) } ssc.start() ssc.awaitTermination() } In house code

- 21. def functionToCreateContext(decoder: String,sparkConfig: SparkConfiguration ):StreamingContext = { val sparkConf = new SparkConf().setMaster(sparkClusterHost).setAppName(sparkConfig.jobName) sparkConf.set(S3_KEY, sparkConfig.awsKey) sparkConf.set(S3_CREDS, sparkConfig.awsSecret) sparkConf.set(PARQUET_OUTPUT_DIRECTORY, sparkConfig.parquetOutputDirectory) val sparkContext = SparkContext.getOrCreate(sparkConf) // Hadoop S3 writer optimization sparkContext.hadoopConfiguration.set("spark.sql.parquet.output.committer.class", "org.apache.spark.sql.parquet.DirectParquetOutputCommitter") // Same as Avro, Parquet also supports schema evolution. This work happens in driver and takes // relativly long time sparkContext.hadoopConfiguration.set("parquet.enable.summary-metadata", "false") sparkContext.hadoopConfiguration.setInt("parquet.metadata.read.parallelism", 100) val ssc = new StreamingContext(sparkContext, Seconds(sparkConfig.batchTime)) ssc.checkpoint(sparkCheckpointDirectory(sparkConfig)) In house code (continue)

- 22. // evaluate stream value happened only if checkpoint folder is not exist val streams = sparkConfig.kafkaConfig.streams map { c => val topic = c.topic.split(",").toSet KafkaUtils.createDirectStream[String, String, StringDecoder, JsonDecoder](ssc, c.kafkaParams, topic) } streams.foreach { dsStream => { dsStream.foreachRDD { rdd => val offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges for (o <- offsetRanges) { logInfo(s"Offset on the driver: ${offsetRanges.mkString}") } val sqlContext = SQLContext.getOrCreate(rdd.sparkContext) sqlContext.setConf("spark.sql.parquet.compression.codec", "snappy") // Data recovery after crash val s3Accesskey = rdd.context.getConf.get(S3_KEY) val s3SecretKey = rdd.context.getConf.get(S3_CREDS) val outputDirectory = rdd.context.getConf.get(PARQUET_OUTPUT_DIRECTORY) In house code (continue)

- 23. val data = sqlContext.read.json(rdd.map(_._2)) val carpetData = data.count() if (carpetData > 0) { // coalesce(1) – Data transfer optimization during shuffle data.coalesce(1).write.mode(SaveMode.Append).partitionBy "day", "hour").parquet(“s3a//...") // In case of S3Exception will not continue to update ZK. zk.updateNode(o.topic, o.partition.toString, kafkaConsumerGroup, o.untilOffset.toString.getBytes) } } } } ssc } In house code (continue)

- 24. SaveMode (Append/Overwrite) used to handle exist data (add new file / overwrite) Spark Streaming does not update ZK (http://curator.apache.org/) Spark Streaming saves offset in its checkpoint folder. Once it crashes it will continue from the last offset You can avoid using checkpoint for offsets and manage it manually Config...

- 25. val sparkConf = new SparkConf().setMaster("local[4]").setAppName("demo") val sparkContext = SparkContext.getOrCreate(sparkConf) val sqlContext = SQLContext.getOrCreate(sparkContext) val data = sqlContext.read.json(path) data.coalesce(1).write.mode(SaveMode.Overwrite).partitionBy("table", "day") parquet (outputFolder) Batch Code

- 26. Built in support for backpressure Since Spark 1.5 (default is disabled) Reciever – spark.streaming.receiver.maxRate Direct – spark.streaming.kafka.maxRatePerPartition Back Pressure

- 28. Architecture – other spark options We can use hourly window , do the aggregation in spark and overwrite C* raw in real time …

- 30. Architecture 3.0 Stream Events Events Raw Data Events Consumer Consumer Raw Data Aggregation Aggregation Raw Data Analytic data uses spark stream to transfer Kafka raw data to Parquet. Regular Kafka consumer saves raw data backup in S3 (for streaming failure, spark batch will convert them to parquet) Aggregation data uses statefull Spark Streaming (mapWithState) to update C* In case streaming failure spark batch will update data from Parquet to C*

- 31. Architecture Pros: Real-time DB updates Cons: Too many components, relatively expensive (comparing to phase 1) According to documentation Spark upgrade has an issue with checkpoint

- 32. http://www.slideshare.net/planetcassandra/tuplejump-breakthrough-olap-performance-on-cassandra-and-spark? ref=http://www.planetcassandra.org/blog/introducing-filodb/ Whats Next … FiloDB ? (probably not , lots of nodes) Parquet performance based on C*

- 33. Questions?

- 34. val ssc = new StreamingContext(sparkConfig.sparkConf, Seconds(batchTime)) val kafkaStreams = (1 to sparkConfig.workers) map { i => new FixedKafkaInputDStream[String, AggregationEvent, StringDecoder, SerializedDecoder[AggregationEvent]](ssc, kafkaConfiguration.kafkaMapParams, topicMap, StorageLevel.MEMORY_ONLY_SER).map(_._2) // for write ahead log } val unifiedStream = ssc.union(kafkaStreams) // manage all streams as one val mapped = unifiedStream flatMap { event => Aggregations.getEventAggregationsKeysAndValues(Option(event)) // convert event to aggregation object which contains //key (“advertiserId”, “countryId”) and values (“click”, “impression”) } val reduced = mapped.reduceByKey { _ + _ // per aggregation type we created “+” method that //describe how to do the aggregation } K1 = advertiserId = 5 countryId = 8 V1 = clicks = 2 impression = 17 k1(e), v1(e) k1(e), v2(e) k2(e), v3(e) k1(e), v1+v2 k2(e), v3(e) In house Code –

![Transfer data from driver to workers

Broadcast -

keep a read-only variable cached on each machine rather than shipping a copy of it with tasks

Accumulator - used to implement counters/sum, workers can only add to accumulator, driver can read its

value (you can extends AccumulatorParam[Vector])

Static (Scala Object)

Context (rdd) – get data after recovery](https://image.slidesharecdn.com/sparkstream-160218073234/85/Spark-stream-Kafka-18-320.jpg)

}

streams.foreach { dsStream => {

dsStream.foreachRDD { rdd =>

val offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

for (o <- offsetRanges) {

logInfo(s"Offset on the driver: ${offsetRanges.mkString}")

}

val sqlContext = SQLContext.getOrCreate(rdd.sparkContext)

sqlContext.setConf("spark.sql.parquet.compression.codec", "snappy")

// Data recovery after crash

val s3Accesskey = rdd.context.getConf.get(S3_KEY)

val s3SecretKey = rdd.context.getConf.get(S3_CREDS)

val outputDirectory = rdd.context.getConf.get(PARQUET_OUTPUT_DIRECTORY)

In house code (continue)](https://image.slidesharecdn.com/sparkstream-160218073234/85/Spark-stream-Kafka-22-320.jpg)

![val sparkConf = new SparkConf().setMaster("local[4]").setAppName("demo")

val sparkContext = SparkContext.getOrCreate(sparkConf)

val sqlContext = SQLContext.getOrCreate(sparkContext)

val data = sqlContext.read.json(path)

data.coalesce(1).write.mode(SaveMode.Overwrite).partitionBy("table", "day") parquet (outputFolder)

Batch Code](https://image.slidesharecdn.com/sparkstream-160218073234/85/Spark-stream-Kafka-25-320.jpg)

![val ssc = new StreamingContext(sparkConfig.sparkConf, Seconds(batchTime))

val kafkaStreams = (1 to sparkConfig.workers) map {

i => new

FixedKafkaInputDStream[String, AggregationEvent, StringDecoder,

SerializedDecoder[AggregationEvent]](ssc,

kafkaConfiguration.kafkaMapParams,

topicMap,

StorageLevel.MEMORY_ONLY_SER).map(_._2) // for write ahead log

}

val unifiedStream = ssc.union(kafkaStreams) // manage all streams as one

val mapped = unifiedStream flatMap {

event => Aggregations.getEventAggregationsKeysAndValues(Option(event))

// convert event to aggregation object which contains

//key (“advertiserId”, “countryId”) and values (“click”, “impression”)

}

val reduced = mapped.reduceByKey {

_ + _ // per aggregation type we created “+” method that

//describe how to do the aggregation

}

K1 =

advertiserId = 5

countryId = 8

V1 =

clicks = 2

impression = 17

k1(e), v1(e)

k1(e), v2(e)

k2(e), v3(e)

k1(e), v1+v2

k2(e), v3(e)

In house Code –](https://image.slidesharecdn.com/sparkstream-160218073234/85/Spark-stream-Kafka-34-320.jpg)