SQL or NoSQL, that is the question!

- 1. SQL or NoSQL, that is the question! October 2011 Andraž Tori, CTO at Zemanta @andraz [email protected]

- 2. Answering - Why NoSQL? - What is NoSQL? - How does it work?

- 3. SQL is awesome! - Structured Query Language - ACID Atomicity, Consistency, Isolation, Durability - Predictable - Schema - Based on rational algebra - Standardized

- 4. No, really, it's awesome! - Hardened - Free and commercial choices - MySQL, PostgreSQL, Oracle, DB2, MS SQL... - Commercial support - Tooling - Everyone knows it - It's mature!

- 6. So this is the end, right?

- 7. Why the heck would someone not want SQL?

- 8. Why not to use SQL? - Clueless self-thought programmers who use text files - NIH - Not Invented Here syndrome. And I want to design my own CPU! - Because it's hard! - I can't afford it - “This app was first ported from Clipper to DBase”

- 10. Let's say ...

- 11. You are a big tech company, located on west coast of USA

- 13. You are... - big international web company based in San Francisco - 5 data centers around the world - Petabytes of data behind the service - A day of downtown costs you at least millions - And it's not question of when, but if

- 14. You want to - keep the service up no matter what - have it fast - deal with humongous amounts of data - enable your engineers to make great stuff

- 15. You are...

- 16. Some interesting constraints Amazon claim that just an extra one tenth of a second on their response times will cost them 1% in sales.

- 17. So... - Some pretty big and important problems - And brightest engineers in the world - Who loooove to build stuff - Sooner or later even Oracle RAC cluster is not enough

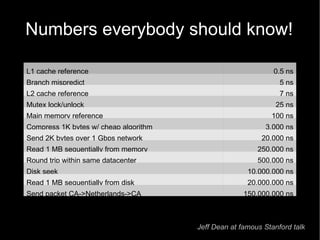

- 18. Numbers everybody should know! Jeff Dean at famous Stanford talk L1 cache reference 0.5 ns Branch mispredict 5 ns L2 cache reference 7 ns Mutex lock/unlock 25 ns Main memory reference 100 ns Compress 1K bytes w/ cheap algorithm 3,000 ns Send 2K bytes over 1 Gbps network 20,000 ns Read 1 MB sequentially from memory 250,000 ns Round trip within same datacenter 500,000 ns Disk seek 10,000,000 ns Read 1 MB sequentially from disk 20,000,000 ns Send packet CA->Netherlands->CA 150,000,000 ns

- 19. Facebook circa 2009 - from 200GB (March 2008) to 4 TB of compressed new data added per day - 135TB of compressed data scanned per day - 7500+ Database jobs on production cluster per day - 80K compute hours per day - And that's just for data warehousing/analysis - plus thousands of MySQL machines acting as Key/Value stores

- 20. Big Data - Internet generates huge amounts of data - First encountered by big guys AltaVista, Google, Amazon … - Need to be handled - Classical storage solutions just don't fit/behave/scale anymore

- 21. So smart guys create solutions to these internal challenges

- 22. And then? - Papers: The Google File System (Google, 2003) MapReduce: Simplified Data Processing on Large Clusters (Google, 2004) Bigtable: A Distributed Storage System for Structured Data (Google, 2006) Amazon Dynamo (Amazon, 2007) - Projects (all open source): Hadoop (coming out of Nutch, Yahoo, 2008) Memcached (LiveJournal, 2003) Voldemort (Linkedin, 2008) Hive (Facebook, 2008) Cassandra (Facebook, 2008) MongoDB (2007) Redis, Tokyo Cabinet , CouchDB, Riak...

- 23. Four papers to rule them all Sanjay Ghemawat, Howard Gobioff, and Shun-Tak Leung, “ The Google File System ”, 19th ACM Symposium on Operating Systems Principles, Lake George, NY, October, 2003. Jeffrey Dean and Sanjay Ghemawat, “ MapReduce: Simplified Data Processing on Large Clusters ”, OSDI'04: Sixth Symposium on Operating System Design and Implementation, San Francisco, CA, December, 2004. Fay Chang, Jeffrey Dean, Sanjay Ghemawat, Wilson C. Hsieh, Deborah A. Wallach, Mike Burrows, Tushar Chandra, Andrew Fikes, and Robert E. Gruber, “ Bigtable: A Distributed Storage System for Structured Data ”, OSDI'06: Seventh Symposium on Operating System Design and Implementation, Seattle, WA, November, 2006. Giuseppe DeCandia, Deniz Hastorun, Madan Jampani, Gunavardhan Kakulapati, Avinash Lakshman, Alex Pilchin, Swami Sivasubramanian, Peter Vosshall and Werner Vogels, “ Dynamo: Amazon's Highly Available Key-Value Store ”, in the Proceedings of the 21st ACM Symposium on Operating Systems Principles, Stevenson, WA, October 2007.

- 24. Solving problems of big guys?

- 25. Total Sites Across All Domains August 1995 - October 2011, NetCraft

- 26. Yesterday's problem of biggest guys Is today's problem of garden variety startup

- 28. And so we end up with Cambrian explosion

- 31. These solutions don't have much in common, Except...

- 32. They definitely aren't SQL

- 33. Not Only SQL

- 34. So what are these beasts?

- 35. That's a hard question... - There is no standard - This is a new technology - new research - survival of the fittest - experimenting - They obviously fulfill some new needs - but we don't yet know which are real and which superficial - Most are extremely use-case specific

- 36. Example use-cases - Shopping cart on Amazon - PageRank calculation at Google - Streams stuff at Twitter - Extreme K/V store at bit.ly - Analytics at Facebook

- 37. At the core, it's a different set of trade-offs and operational constraints

- 38. Trade-offs and operational constraints - Consistent? Eventually consistent? - Highly available? Distributed across continents? - Fault tolerant? Partition tolerant? Tolerant to consumer grade hardware? - Distributed? Across 10, 100, 1000, 10000 machines?

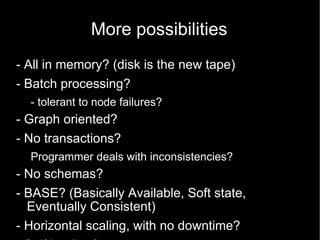

- 39. More possibilities - All in memory? (disk is the new tape) - Batch processing? - tolerant to node failures? - Graph oriented? - No transactions? Programmer deals with inconsistencies? - No schemas? - BASE? (Basically Available, Soft state, Eventually Consistent) - Horizontal scaling, with no downtime? - Self healing?

- 40. A consistent topic: CAP Theorem

- 41. CAP theorem (Eric Brewer, 2000, Symposium on Principles of Distributed Computing) - CAP = Consistency, Availability, Partition tolerance - Pick any two! - Distributed systems have to sacrifice something to be fast - Usually you drop: - consistency – all clients see the same data - availability – the service returns something - Sometimes can even tune the trade-offs!

- 42. "There is no free lunch with distributed data” – HP

- 43. Eventual Consistency - Different clients can read the data and write it, no locking or maybe partitioned nodes - What we know is that given enough time data is synchronized to the same state across all replicas

- 44. But this is horrible!

- 45. … you already are eventually consistent! :) If your database stores how many vases you have in your shop...

- 46. Eventual consistency - Conflict resolution: - Read time - Write time - Asynchronous - Possibilities: - client timestamps - vector clocks, when writing say what your original data version was - Conflict resolution can be server or client based

- 47. There are different kinds of consistencies - Read-your-writes consistency - Monotonic write / monotonic read consistency - Session consistency - Casual consistency

- 48. There's not even a proper taxonomy of features different NoSQL solutions offer

- 49. And this presentation is too short to present whole breadth of possibilities

- 50. Usual taxonomy of NoSQL Usual taxonomy: - Key/Value stores - Column stores - Document stores - Graph stores

- 51. Other attributes - In-memory / on-disk - Latency / throughput (batch processing) - Consistency / Availability

- 52. Key/Value stores - a.k.a. Distributed hashtables! - Amazon Dynamo - Redis, Voldemort, Cassandra, Tokyo Cabinet, Riak

- 53. Document databases - Similar to Key/Value, but value is a document - JSON or something similar, flexible schema - CouchDB, MongoDB, SimpleDB... - May support indexing or not - Usually support more complex queries

- 54. Column stores - one key, multiple attributes - hybrid row/column - BigTable, Hbase, Cassandra, Hypertable

- 55. Graph Databases - Neo4J, Maestro OpenLink, InfiniteGraph, HyperGraphDB, AllegroGraph - Whole semantic web shebang!

- 56. To make the situation even more confusing... - Fast pace of development - In-memory stores gain on-disk support overnight - Indexing capabilities are added

- 57. Two examples - Cassandra - Hadoop - Hive - Mahout

- 59. Cassandra - BigTable + Dynamo - P2P, horizontally scalable - No SPOF - Eventually consistent - Tunable tradeoffs between consistency and availability - number of replicas, writes, reads

- 60. Cassandra – writes - No reads - No seeks - Log oriented writes - Fast, atomic inside ColumnFamily - Always available for writing

- 61. Cassandra - Billions of rows - Mysql: ~ 300ms write ~ 350ms read - Cassandra: ~ 0.12ms write ~ 15ms read

- 62. Not enough time to go into data model...

- 63. Cassandra In production at: Facebook, Digg, Rackspace, Reddit, Cloudkick, Twitter - largest production cluster over 150TB and over 150 machines Other stuff: - pluggable partitioner (Random/OrderPerserving) - rack aware, datacenter aware

- 64. Experiences? - Works pretty good at Zemanta - user preferences store - extending to new use-cases - Digg had some problems - Don't necessary use it as primary store - Not very easy to back-up, situation is improving

- 65. Cassandra - queries - Column by key - Slices (of columns/supercolumns) - Range queries (when using OrderPerservingPartitioner to be efficient)

- 67. Hadoop - GFS + MapReduce - Fault tolerant - (massively) distributed - massive datasets - batch-processing (non real-time responses) - Written in Java - A whole ecosystem

- 68. Hadoop: Why? (Owen O’Malley, Yahoo Inc!, [email protected]) • Need to process 100TB datasets with multi-day jobs • On 1 node: – scanning @ 50MB/s = 23 days – MTBF = 3 years • On 1000 node cluster: – scanning @ 50MB/s = 33 min – MTBF = 1 day • Need framework for distribution – Efficient, reliable, easy to use

- 69. Hadoop @ Facebook - Use Hadoop to store copies of internal log and dimension data sources and use it as a source for reporting/analytics and machine learning. - Currently 2 major clusters: A 1100-machine cluster with 8800 cores and about 12 PB raw storage. A 300-machine cluster with 2400 cores and about 3 PB raw storage. Each (commodity) node has 8 cores and 12 TB of storage. - Heavy users of both streaming as well as the Java apis. They built a higher level data warehousing framework using these features called Hive (see the http://hadoop.apache.org/hive/).

- 70. But also at smaller startups - Zemanta: 2 to 4 node cluster, 7TB - log processing - Hulu 13 nodes - log storage and analysis - GumGum 9 nodes - image and advertising analytics - Universities: Cornell – Generating web graphs (100 nodes) - It's almost everywhere

- 71. Hadoop Architecture - HDFS - HDFS provides a single distributed filesystem - Managed by a NameNode (SPOF) - Append-only filesystem - distributed by blocks (for example 64MB) - It's like one big RAID over all the machines - tunable replication - Rack aware, datacenter aware - It just works, really!

- 74. Hadoop Architecture - MapReduce - Based on an old concept from Lisp - Generally it's not just map-reduce, it's: Map -> shuffle (sort) -> merge-> reduce - Jobs can be partitioned - Jobs can be run and be restarted independently (parallelization, fault tolerance) - Aware of data-locality of HDFS - Speculative execution (toward the end, of tasks machines that stall)

- 75. Infamous word counting example - “One and one is two and one is three” - Two mappers: “One and one is”, “two and one is three” - Pretty “stupid” mappers, just output word and “1” Otuput Mapper1: One 1 And 1 One 1 Is 1 Output Mapper2: Two 1 And 1 One 1 Is 1 Three 1 And 1 And 1 Is 1 Is 1 One 1 One 1 One 1 Two 1 Three 1 And 2 Is 2 One 3 Two 1 Three 1 Sorter Reducer

- 76. Important to know - Mappers can output more than one output per input (or none) - Bucketing for reducers happens immediately after mapping output - Every reducer gets all input records for certain “key” - All parts are highly pluggable – readers, mapping, sorting, reducing … it's java

- 77. Hadoop - You can write your jobs in Java - You get used to thinking inside the constraints - You can use “Hadoop Streaming” to write jobs in any language - It's great not to have to think about the machines, but you can “peep” if you want to see how your job is doing.

- 78. Now, this is a bit wonky, right? - Word counting is a really bad example - However it's like “Hello world”, so get used to it - When you get to real problems it gets much more logical

- 79. Benchmarks, 2009 This doesn't help me much, but... Bytes Nodes Maps Reduces Replication Time 500000000000 1406 8000 2600 1 59 seconds 1000000000000 1460 8000 2700 1 62 seconds 100000000000000 3452 190000 10000 2 173 minutes 1000000000000000 3658 80000 20000 2 975 minutes

- 80. Hive

- 81. Hive - A system built on top of Hive that mimics SQL - Hive Query Language - Built at Facebook, since writing MapReduce jobs in Java is tedious basic tasks - Every operation is one or multiple full index scans - Bunch of heuristics, query optimization

- 82. Hive – Why we love it at Zemanta - Don't need to transform your data on “load time” - Just copy your files to HDFS (preferably compressed and chunked) - Write your own deserializer (50 lines in Java) - And use your file as a table - Plus custom User Defined Functions

- 84. Mahout - Bunch of algorithms implemented Collaborative Filtering User and Item based recommenders K-Means, Fuzzy K-Means clustering Mean Shift clustering Dirichlet process clustering Latent Dirichlet Allocation Singular value decomposition Parallel Frequent Pattern mining Complementary Naive Bayes classifier Random forest decision tree based classifier High performance java collections (previously colt collections) A vibrant community and many more cool stuff to come by this summer thanks to Google summer of code

- 85. General notes

- 86. Some observations - Non-fixed schemas are a blessing when you have to adapt constantly - that doesn't mean you should not have documentation and be thoughtful! - Denormalization is the way to scale - sorry guys - Clients get to manage things more precisely, but also have to manage things more precisely

- 87. Some internals, “fun” tricks - Bloom filter: Is data on this node? Maybe / Definitely not Maybe -> let's go to disk to check out - Vector clocks - Consistent hashing

- 88. Consistent hashing - key -> hash -> “coordinator node” - depending on replication the key is then stored in sequential N nodes - When new node gets added to the ring replication is relatively easy

- 89. And if you don't take anything else from this presentation...

- 93. But there's more to it

- 94. This is the edge today - Tons of interesting research waiting to be made - Ability to leverage these solutions to process terabytes of data cheaply - Ability to seize new opportunities - Innovation is the only thing keeping you/us ahead - Are you preparing yourself for tomorrow's technologies? Tomorrow's research?

- 95. Images http://www.flickr.com/photos/60861613@N00/3526232773/sizes/m/in/photostream/ http://www.zazzle.com/sql_awesome_me_tshirt-235011737217980907 http://geekandpoke.typepad.com/geekandpoke/2011/01/nosql.html http://hadoop.apache.org/common/docs/current/hdfs_design.html http://www.flickr.com/photos/unitednationsdevelopmentprogramme/4273890959/

Editor's Notes

- #2: Kaj sploh je Silicijeva Dolina? Zakaj se to sploh sprašujemo? Mislijo politiki dobesedno? Povedal bom o pozitivnih straneh. V bistvu sem hotel povedati drugo zgodbo