Extending ETSI VNF descriptors and OpenVIM to support Unikernels

- 1. Extending ETSI VNF descriptors and OpenVIM (Rel. TWO) to support Unikernels Paolo Lungaroni (1), Claudio Pisa(2), Stefano Salsano(2,3), Giuseppe Siracusano(3), Francesco Lombardo(2) (1)Consortium GARR, Italy; (2)CNIT, Italy; (3)Univ. of Rome Tor Vergata, Italy Stefano Salsano Project coordinator – Superfluidity project Univ. of Rome Tor Vergata, Italy / CNIT, Italy ETSI OSM-Mid-Release#3 meeting, July 13th, Sophia Antipolis, France A super-fluid, cloud-native, converged edge system

- 2. Outline • Superfluidity project goals and approach • Unikernels and their orchestration using VIMs (Virtual Infrastructure Managers) • Unikernels orchestration over OpenStack, OpenVIM and Nomad – Performance evaluation • Extending ETSI NFV models and OpenVIM to support Unikernels orchestration – Live demo • Details of OpenVIM extensions for Unikernels support (proposal for a patch…) 2

- 3. Superfluidity project Superfluidity Goals • Instantiate network functions and services on-the-fly • Run them anywhere in the network (core, aggregation, edge), across heterogeneous infrastructure environments (computing and networking), taking advantage of specific hardware features, such as high performance accelerators, when available Superfluidity Approach • Decomposition of network components and services into elementary and reusable primitives (“Reusable Functional Blocks – RFBs”) • Platform-independent abstractions, permitting reuse of network functions across heterogeneous hardware platforms 3

- 4. The Superfluidity vision 4 Current NFV technology Granularity Time scale Superfluid NFV technology Days, Hours Minutes Seconds Milliseconds Big VMs Small components Micro operations • From VNF Virtual Network Functions to RFB Reusable Functional Blocks • Heterogeneous RFB execution environments - Hypervisors - Modular routers - Packet processors …

- 5. Outline • Superfluidity project goals and approach • Unikernels and their orchestration using VIMs (Virtual Infrastructure Managers) • Unikernel orchestration over OpenStack, OpenVIM and Nomad – Performance evaluation • Extending ETSI NFV models and OpenVIM to support Unikernels orchestration – Live demo • Details of OpenVIM extensions for Unikernels support 5

- 6. Unikernels: a tool for superfluid virtualization Containers e.g. Docker • Lightweight (not enough?) • Poor isolation 6 Hypervisors (traditional VMs) e.g. XEN, KVM, wmware… • Strong isolation • Heavyweight Unikernels Specialized VMs (e.g. MiniOS, ClickOS…) • Strong isolation • Very Lightweight • Very good security properties They break the “myth” of VMs being heavy weight…

- 7. What is a Unikernel? • Specialized VM: single application + minimalistic OS • Single address space, co-operative scheduler so low overheads • Unikernel virtualization platforms extend existing hypervisors (e.g. XEN) driver1 driver2 app1 (e.g., Linux, FreeBSD) KERNELSPACEUSERSPACE app2 appNdriverN Vdriver1 vdriver2 app SINGLEADDRESS SPACE 7 General purpose OS Unikernel a minimalistic OS (e.g., MiniOS, Osv)

- 8. ClickOS Unikernel • ClickOS Unikernel combines: – Click modular router • a software architecture to build flexible and configurable routers – MiniOS • a minimalistic Unikernel OS available with the Xen sources • ClickOS VMs – Are small: ~6MB – Boot quickly: ~ few ms – Add little delay: ~45µs – Support ~10Gb/s throughput for almost all packet sizes 13/07/2017 CNIT 8

- 9. Unikernels (ClickOS) memory footprint and boot time VM configuration: MiniOS, 1 VCPU, 8MB RAM, 1 VIF • 4 ms • 87.77 ms 9 Boot time, state of the art results Recent results from Superfluidity, by redesigning the XEN toolstack Memory footprint • Hello world guest VM : 296 KB • Ponger (ping responder) guest VM : ~700KB

- 10. Unikernels (ClickOS) memory footprint and boot time VM configuration: MiniOS, 1 VCPU, 8MB RAM, 1 VIF 10 Boot time, state of the art results Memory footprint • Hello world guest VM : 296 KB • Ponger (ping responder) guest VM : ~700KB Recent results from Superfluidity, by redesigning the XEN toolstack • 4 ms • 87.77 ms

- 11. VM instantiation and boot time typical performance (no Unikernels) 11 Orchestrator request VIM operations Virtualization Platform Guest OS (VM) Boot time 1-2 s 5-10 s ~1 s

- 12. VM instantiation and boot time typical performance (no Unikernels) 12 Orchestrator request VIM operations Virtualization Platform Guest OS (VM) Boot time 1-2 s ~1 ms ~1 ms XEN Hypervisor Enhancements Unikernels Unikernels and Hypervisor can provide low instantiation times for “Micro-VNF”

- 13. VM instantiation and boot time typical performance (no Unikernels) 13 Orchestrator request VIM operations Virtualization Platform Guest OS (VM) Boot time 1-2 s ~1 ms ~1 ms XEN Hypervisor Enhancements Unikernels Can we improve VIM performances? Unikernels and Hypervisor can provide low instantiation times for “Micro-VNF”

- 14. Outline • Superfluidity project goals and approach • Unikernels and their orchestration using VIMs (Virtual Infrastructure Managers) • Unikernels orchestration over OpenStack, OpenVIM and Nomad – Performance evaluation • Extending ETSI NFV models and OpenVIM to support Unikernels orchestration – Live demo • Details of OpenVIM extensions for Unikernels support (proposal for a patch…) 15

- 15. Performance analysis and Tuning of Virtual Infrastructure Managers (VIMs) for Unikernel VNFs • We considered 3 VIMs (OpenStack, Nomad, OpenVIM) 16 - General model of the VNF instantiation process, mapping of the operations of the 3 VIMs in the general model - (Quick & dirty) modifications to VIMs to instantiate Micro-VNFs based on ClickOS Unikernel - Performance Evaluation

- 16. Virtual Infrastructure Managers (VIMs) We considered three VIMs : • OpenStack Nova – OpenStack is composed by subprojects – Nova: orchestration and management of computing resources ---> VIM – 1 Nova node (scheduling) + several compute nodes (which interact with the hypervisor) – Not tied to a specific virtualization technology • Nomad by HashiCorp – Minimalistic cluster manager and job scheduler – Nomad server (scheduling) + Nomad clients (interact with the hypervisor) – Not tied to a specific virtualization technology • OpenVIM – NFV specific VIM, originally developed by the OpenMANO open source project, now maintained in the context of ETSI OSM 17

- 17. Results – ClickOS instantiation times (OpenStack, Nomad, OpenVIM) 20 OpenStack Nova Nomad seconds seconds OpenVIM seconds

- 18. Results – ClickOS instantiation times - OpenVIM 13/07/2017 CNIT 24 • Measurements taken on claudlab.us • We have split the scheduling time into the actual scheduling decision time and the database access time • Can database access time be reduced?

- 19. Outline • Superfluidity project goals and approach • Unikernels and their orchestration using VIMs (Virtual Infrastructure Managers) • Unikernels orchestration over OpenStack, OpenVIM and Nomad – Performance evaluation • Extending ETSI NFV models and OpenVIM to support Unikernels orchestration – Live demo • Details of OpenVIM extensions for Unikernels support (proposal for a patch…) 25

- 20. Extending the ETSI NFV models to support Unikernels 26 • In the NFV models, a Virtual Network Function (VNF) is decomposed in Virtual Deployment Units (VDU) • We extended the VDU information elements in the model to support Unikernel VDUs (based on the ClickOS Unikernel) • “Regular” VDUs based on traditional VMs and Unikernel VDUs can coexist in the same VNF Descriptor

- 21. Working prototype (see the live demo!) 27 Orchestrator prototype RDCL 3D VIM OpenVIM XEN We configured XEN to support both regular VMs (HVM) and Click Unikernels NSD NSD NSD ETSI release 2 descriptors NSD NSD VNFD Our orchestrator prototype (RDCL 3D) uses the enhanced VDU descriptors and interacts with OpenVIM OpenVIM has been enhanced to support XEN and Unikernels

- 22. Working prototype (see the live demo!) 28 This is a regular VM (XEN HVM) These are 3 Unikernel VMs (ClickOS)

- 23. Regular VM (Alpine) Unikernels Chaining Proof of Concept 29 OpenVSwitch ICMP responder (ClickOS) Firewall (ClickOS) OpenVIM Firewall Descriptor ICMP Responder Descriptor VLAN Encapsulator/ Decapsulator Descriptor VLAN Encap/ Decap (ClickOS) “Regular” Linux Alpine VM Descriptor 3 Unikernel VMs1 “regular” VM

- 24. Unikernel Chaining Proof of Concept • Regular VM – Pings towards the ICMP responder over a VLAN • VLAN Encapsulator/Decapsulator – Decapsulates the VLAN header (and re-encapsulates in the return path) • Firewall – Lets through only ARP and IP packets with TOS == 0xcc • ICMP Responder – Responds to ARP and ICMP echo requests 13/07/2017 CNIT 30

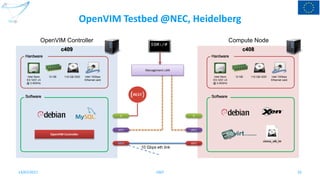

- 25. Some details of the working prototype 31 RDCL 3D GUI VIM OpenVIM XEN NSDNSDNSD ETSI release 2 descriptorsNSDNSDVNFD ClickOS images are prepared “on the fly” by the RDCL 3D agent using the Click Configuration files RDCL 3D Agent libvirt ClickOS images NSDNSDClick Click Configurations The RDCL 3D web application and Agent are running in our lab @uniroma2, Roma OpenVIM and the Host/Compute Node are running in a testbed @NEC, Heidelberg

- 26. OpenVIM Testbed @NEC, Heidelberg 13/07/2017 CNIT 32 c409 Intel Xeon E3-1231 v3 @ 3.40GHz OpenVIM Controller Hardware eth1 eth3 12 GB Intel 10Gbps Ethernet card Software lo c408 Hardware eth1 eth3 Software lo clickos_x86_64 10 Gbps eth link 110 GB HDD Intel Xeon E3-1231 v3 @ 3.40GHz 12 GB Intel 10Gbps Ethernet card 110 GB HDD OpenVIM Controller Compute Node Management LAN

- 27. Outline • Superfluidity project goals and approach • Unikernels and their orchestration using VIMs (Virtual Infrastructure Managers) • Unikernel orchestration over OpenStack, OpenVIM and Nomad – Performance evaluation • Extending ETSI NFV models and OpenVIM to support Unikernels orchestration – Live demo • Details of OpenVIM extensions for Unikernels support (proposal for a patch…) 33

- 28. OpenVIM Extensions details Extensions to OpenVIM to support Unikernel VMs • Xen hypervisor support – Unikernel support (in particular, ClickOS Unikernels) – Full HVM machines support – Coexistence on the same compute node of Unikernels and HVM VMs In order to specify that we are using the Xen hypervisor and the Unikernels, the openvimd.cfg configuration file is extended by adding a new value for the tag “mode” • mode: “unikernel” 3413/07/2017 CNIT

- 29. OpenVIM Patch • New mode called “unikernel” is added “unikernel” mode is an extension of the Development mode: – excludes the EPA features – enables the support for Xen and same-node VNF chaining • Backward compatibility is granted with the original OpenVIM’s modes (“normal”, “test”, “host only”, “OF only”, “development”) • NB: “unikernel” mode extends “development” mode, therefore it can run with hardware not meeting all the requirements for “normal” OpenVIM mode 3513/07/2017 CNIT

- 30. New descriptor tags Server (VMs) descriptor new tags: • hypervisor [kvm|xen-unik|xenhvm] defines which hypervisor is used. “kvm” reflects the original mode, while "xen-unik" and "xenhvm" start xen with support for Unikernels and full VM respectively. • osImageType: [clickos] defines the type of Unikernel image to start. It is mandatory if hypervisor = xen-unik. Currently, only ClickOS Unikernel are supported, but this tag allows future support of different types of Unikernels. Host (Compute Nodes) descriptor new flag: • hypervisors (comma separated list of kvm,xen-unik,xenhvm) defines the hypervisors supported by the compute node. NB: in a compute node kvm and xen* are mutually exclusive, while xenhvm and xen-unik can coexist. 3613/07/2017 CNIT

- 31. Scheduling enhancements • The Compute Node is now selected based on the available resources AND the type of hypervisor. • If a specific Compute Node is requested for a Server (using the “hostId” tag), a consistency check between the requested hypervisor type and the supported hypervisor type in the Compute Node is performed. An error is returned if the hypervisor type is not supported. 13/07/2017 CNIT 37

- 32. OpenVIM Flavor and Image descriptors • Flavor 1 flavor: 2 name: CloudVM_1C_8M 3 description: clickos cloud image with 8M, 1core 4 ram: 8 5 vcpus: 1 $ openvim flavor-create flavor_1C_8M.yaml 5a258552-0a51-11e7-a086-0cc47a7794be CloudVM_1C_8M • Image 1 image: 2 name: clickos-ping 3 description: click-os ping image 4 path: /var/lib/libvirt/images/clickos_ping 5 metadata: 6 use_incremental: "no" $ openvim image-create vmimage-clickos-ping.yaml c418a8ec-10c1-11e7-ad8f-0cc47a7794be clickos-ping 38

- 33. OpenVIM Network descriptor • Net 1 network: 2 name: firewall_ping 3 type: bridge_data 4 provider: OVS:200 5 enable_dhcp: false 6 shared: false $ openvim net-create net-firewall_ping.yaml f136bd32-3fd8-11e7-ad8f-0cc47a7794be firewall_ping ACTIVE 13/07/2017 CNIT 39

- 34. ClickOS «Server» descriptor (extended) • Server 1 server: 2 name: vm-clickos-ping2 3 description: ClickOS ping vm with simple requisites. 4 imageRef: 'c418a8ec-10c1-11e7-ad8f-0cc47a7794be' 5 flavorRef: '5a258552-0a51-11e7-a086-0cc47a7794be' 6 # hostId: '195d4fb2-54fe-11e7-ad8f-0cc47a7794be' 7 start: "yes" 8 hypervisor: "xen-unik" 9 osImageType: "clickos" 10 networks: 11 - name: vif0 12 uuid: f136bd32-3fd8-11e7-ad8f-0cc47a7794be 13 mac_address: "00:15:17:15:5d:74“ $ openvim net-create net-firewall_ping.yaml 56c0edb0-5b4c-11e7-ad8f-0cc47a7794be vm-clickos-ping2 Created 13/07/2017 CNIT 40 New tags

- 35. Host descriptor (extended) • Host 1 { 2 "host":{ 3 "name": "nec-test-408-eth3", 4 "user": "compute408", 5 "password": "*****", 6 "ip_name": "10.0.11.2" 7 }, 8 "host-data": 9 { 10 "name": "nec-test-408-eth3", 11 "ranking": 300, 12 "description": "compute host for openvim testing", 13 "ip_name": "10.0.11.2", 14 "features": "lps,dioc,hwsv,ht,64b,tlbps", 15 "hypervisors": "xen-unik,xenhvm", 16 "user": "compute408", 17 "password": "*****", ... 292 } 13/07/2017 CNIT 41 New tag

- 36. Libvirt XML descriptor for ClickOS generated by OpenVIM <domain type='xen'> <name>vm-clickos-ping2_56c0edb0-5b4c-11e7-ad8f- 0cc47a7794be</name> <uuid>56c0edb0-5b4c-11e7-ad8f-0cc47a7794be</uuid> <memory unit='KiB'>8192</memory> <currentMemory unit='KiB'>8192</currentMemory> <vcpu>1</vcpu> <os> <type arch='x86_64' machine='xenpv'>xen</type> <kernel>/var/lib/libvirt/images/clickos_ping</kernel> </os> <features> <acpi/> <apic/> <pae/> </features> <cpu mode='host-model'></cpu> <clock offset='utc'/> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <devices> <console type='pty'> <target type='xen' port='0'/> </console> <interface type='bridge'> <source bridge='ovim-firewall_ping'/> <script path='vif-openvswitch'/> <mac address='00:15:17:15:5d:74'/> </interface> </devices> </domain> 13/07/2017 CNIT 42

- 37. Installation of the extended OpenVIM • Download the extended version of OpenVIM in your system from our repository: $ git clone https://github.com/superfluidity/openvim4unikernels.git • Install OpenVIM via bash script: openvim/scripts$ ./install-openvim.sh –noclone • Our extensions are in the “unikernel” branch: openvim/scripts$ git checkout unikernel unikernel/scripts$ ./unikernels_patch_vim_db.sh –u vim –p vimpw install After updating the database, you can start OpenVIM as usual. 4313/07/2017 CNIT

- 38. Repository structure • Unikernel folder contains some tool ad example that is useful to start work with our patch. • Descriptors contains some preconfigured ClickOS images and the descriptors to use as an example to start working with the Unikernels. • Docs contains documentation • Scripts folder contains a bash scripts that updates OpenVIM database to support the new fields for unikernel operations and a script for a quick example to start work with ClickOS. 4413/07/2017 CNIT

- 39. Status checks after VM startup 13/07/2017 CNIT 45 After the completion of the VM startup, we can check the status via the Libvirt and Xen command line tools in the target compute node • On Libvirt CLI: $ virsh -c xen:/// list Id Name State ---------------------------------------------------- 105 vm-clickos-ping2_56c0edb0-5b4c-11e7-ad8f-0cc47a7794be running • On Xen console: $ sudo xl list Name ID Mem VCPUs State Time(s) Domain-0 0 10238 8 r----- 96646.2 vm-clickos-ping2_56c0edb0-5b4c-11e7-ad8f-0cc47a7794be 105 8 1 r----- 227.6

- 40. OpenVIM Networking extensions NB These extensions are independent from the previous ones, we have used them to support the VNF chaining in the proposed example • Networking enhancements – Different networking model: a different OVS datapath (within the same OVS instance) is associated to each OpenVIM network • It is easier to supports the VNF chaining on the same compute node • We plan to extend it to work across multiple compute nodes (with VXLAN tunneling) 4613/07/2017 CNIT

- 41. Conclusions – Feedbacks ? • We have designed and implemented a solution for the combined orchestration of regular VMs and Unikernels • ETSI NFV descriptors have been extended. Feedback ? • OpenVIM implementation has been extended. We would like to propose a patch upstream… Feedback ? • In the demo, we use our orchestrator prototype RDCL 3D… any interest to extend the OSM orchestrator to support Unikernel based scenarios ? 47

- 42. Thank you. Questions? Contacts Stefano Salsano University of Rome Tor Vergata / CNIT [email protected] These tools are available on github (Apache 2.0 license) https://github.com/superfluidity/RDCL3D https://github.com/superfluidity/openvim4unikernels https://github.com/netgroup/vim-tuning-and-eval-tools http://superfluidity.eu/ The work presented here only covers a subset of the work performed in the project 48

- 43. References • SUPERFLUIDITY project Home Page http://superfluidity.eu/ • G. Bianchi, et al. “Superfluidity: a flexible functional architecture for 5G networks”, Transactions on Emerging Telecommunications Technologies 27, no. 9, Sep 2016 • P. L. Ventre, C. Pisa, S. Salsano, G. Siracusano, F. Schmidt, P. Lungaroni, N. Blefari-Melazzi, “Performance Evaluation and Tuning of Virtual Infrastructure Managers for (Micro) Virtual Network Functions”, IEEE NFV-SDN Conference, Palo Alto, USA, 7-9 November 2016 http://netgroup.uniroma2.it/Stefano_Salsano/papers/salsano-ieee-nfv-sdn-2016-vim-performance-for-unikernels.pdf • S. Salsano, F. Lombardo, C. Pisa, P. Greto, N. Blefari-Melazzi, “RDCL 3D, a Model Agnostic Web Framework for the Design and Composition of NFV Services”, submitted paper, https://arxiv.org/abs/1702.08242 49

- 44. References – Speed up of Virtualization Platforms / Guests • J. Martins, M. Ahmed, C. Raiciu, V. Olteanu, M. Honda, R. Bifulco, F. Huici, “ClickOS and the art of network function virtualization”, NSDI 2014, 11th USENIX Conference on Networked Systems Design and Implementation, 2014. • F. Manco, J. Martins, K. Yasukata, J. Mendes, S. Kuenzer, F. Huici, “The Case for the Superfluid Cloud”, 7th USENIX Workshop on Hot Topics in Cloud Computing (HotCloud 15), 2015 • Recent unpublished results are included in this presentation: S. Salsano, F. Huici, “Superfluid NFV: VMs and Virtual Infrastructure Managers speed-up for instantaneous service instantiation”, invited talk at EWSDN 2016 workshop, 10 October 2016, The Hague, Netherlands http://www.slideshare.net/stefanosalsano/superfluid-nfv-vms-and-virtual-infrastructure-managers-speedup-for-instantaneous-service-instantiation 50

- 45. The SUPERFLUIDITY project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No.671566 (Research and Innovation Action). The information given is the author’s view and does not necessarily represent the view of the European Commission (EC). No liability is accepted for any use that may be made of the information contained. 51

![New descriptor tags

Server (VMs) descriptor new tags:

• hypervisor [kvm|xen-unik|xenhvm] defines which hypervisor is used. “kvm”

reflects the original mode, while "xen-unik" and "xenhvm" start xen with support

for Unikernels and full VM respectively.

• osImageType: [clickos] defines the type of Unikernel image to start. It is

mandatory if hypervisor = xen-unik. Currently, only ClickOS Unikernel are

supported, but this tag allows future support of different types of Unikernels.

Host (Compute Nodes) descriptor new flag:

• hypervisors (comma separated list of kvm,xen-unik,xenhvm) defines the

hypervisors supported by the compute node. NB: in a compute node kvm and

xen* are mutually exclusive, while xenhvm and xen-unik can coexist.

3613/07/2017 CNIT](https://image.slidesharecdn.com/superfluidity-openvim-etsi-osm-sophia-antipolis-2017-07-v06ss-170713145310/85/Extending-ETSI-VNF-descriptors-and-OpenVIM-to-support-Unikernels-30-320.jpg)