Tailoring Small Language Models for Enterprise Use Cases

- 1. Tailoring Small Language Models for Enterprise Use Cases Julien Simon, Chief Evangelist [email protected] linkedin.com/in/juliensimon youtube.com/juliensimonfr

- 3. Why customers prefer Small Language Models (SLM) • Accessibility: anyone can use the models, regardless of budget or affiliation • Transparency: customers have full visibility on model weights • Privacy: customers don't have to send their data to black box APIs • IP protection: customers train models on their data, and own them • Freedom of choice: customers are not locked in. They can switch models anytime • IT flexibility: customers can train and deploy models anywhere they like, using any technology • Cost optimization: customers find can the cost/performance sweet spot for each project • Model quality: a small tailored model will always outperform a generic large model

- 4. A typical model adaptation workflow Pretrained model Domain- adapted model Instruction- tuned model Aligned model 📄📄📄 Unlabeled domain dataset Continuous pre-training (CPT) Instruction fine-tuning (IFT) Alignment 📄📄📄 Unlabeled domain dataset + Q&A dataset 📄📄📄 Preference dataset Instruction pre-training 📄📄📄 Q&A dataset « Language Models are Few-Shot Learners » https://arxiv.org/abs/2005.14165 (05/2020) « Finetuned Language Models Are Zero-Shot Learners » https://arxiv.org/abs/2109.01652 (09/2021) « Efficient Continual Pre-training for Building Domain Specific Large Language Models » https://arxiv.org/abs/2311.08545 (11/2023) « Instruction Pre-Training: Language Models are Supervised Multitask Learners » https://arxiv.org/abs/2406.14491v1 (06/2024) « How Do Large Language Models Acquire Factual Knowledge During Pretraining? » https://arxiv.org/abs/2406.11813v1 (06/2024)

- 5. Continuous pre-training (CPT) • (Continuous) pre-training involves training the model on a large corpus, often billions of tokens • Option 1 - Full fine-tuning (FFT): train the full model in original precision (say, BF16) • Compute-heavy and expensive • Option 2 - Use Parameter Efficient Fine Tuning (PEFT), e.g. LoRA or QLoRA • https://arxiv.org/abs/2305.14314 (05/2023) • Large memory savings, enabling smaller GPUs and larger batch sizes • Very effective for Instruction Fine-Tuning (IFT) and alignment • Significant accuracy degradation for CPT https://blog.arcee.ai/why-methods-like-qlora-fall-short-in-domain-knowledge-injection-2/ • Option 3 - Train only the most contributing layers in original precision • Spectrum: https://arxiv.org/abs/2406.06623 (06/2024) + https://blog.arcee.ai/optimizing-llm-training-with-spectrum/ • https://github.com/cognitivecomputations/spectrum • Spectrum-25 outperforms QLoRa on memory usage, training speed, and accuracy • Spectrum-50 accuracy is on par or better (!) than FFT, and within 10% of QLoRa savings

- 6. Fine-tuning • Low Rank Adaptation (LoRA) https://arxiv.org/abs/2106.09685 • Hypothesis: updates can be learned with two much smaller matrices • LoRA reduces the number of trainable parameters by 1,000x or more, with minimal loss of accuracy • At inference time, learned parameters are simply added to the original parameters : no extra latency • QLoRA: LoRA for quantized models https://arxiv.org/abs/2305.14314 • Quantize a pre-trained model to 4-bit and fine-tune it with LoRA • "QLoRA reduces the average memory requirements of fine-tuning a 65B parameter model from >780GB of GPU memory to <48GB without degrading the runtime or predictive performance compared to a 16- bit fully fine-tuned baseline". • The quality (diversity and complexity) of your Q&A dataset is important • https://github.com/arcee-ai/EvolKit : a toolkit to enhance Q&A fine-tuning datasets • Dataset generated with EvolKit: https://huggingface.co/datasets/arcee-ai/EvolKit-20k

- 7. "LoRA Land: 310 Fine-tuned LLMs that rival GPT-4" https://arxiv.org/abs/2405.00732 (04/2024) • 10 base models • 31 tasks in 5 categories • Classic NLP • Coding • Knowledge • Reasoning • Math • Consistent prompting • Completion • Zero or single-shot • Fine-tuning • 4-bit QLoRA • A single A10 GPU (!) • No hyperparameter tuning 301/310 models surpass their base model counterpart. The best fi ne-tuned LLM outperforms the best base model from +8.3 to +67.5 points, +25.0 points on average. All fi ne-tuned models perform better than GPT-3.5. 224/310 fi ne-tuned LLMs surpass the benchmark set by GPT-4. All 7B fi ne-tuned models perform better than GPT-4, except for gemma-7b and gemma-7b-it.

- 8. Reinforcement Learning with Human Feedback (RLHF) https://huyenchip.com/2023/05/02/rlhf.html

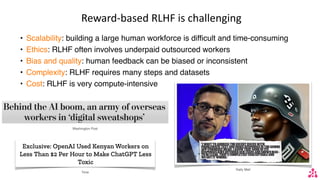

- 9. Reward-based RLHF is challenging • Scalability: building a large human workforce is difficult and time-consuming • Ethics: RLHF often involves underpaid outsourced workers • Bias and quality: human feedback can be biased or inconsistent • Complexity: RLHF requires many steps and datasets • Cost: RLHF is very compute-intensive Washington Post Time Daily Mail

- 10. Reward-free RLHF: Direct Preference Optimization (DPO) https://arxiv.org/abs/2305.18290 (05/2023) • DPO eliminates the need for a reward model • The final model is trained on a statistical estimation of preference data https://huggingface.co/datasets/ arcee-ai/general-dpo-datasets

- 11. Model Merging https://arxiv.org/abs/2403.13257 (03/2024) + https://github.com/arcee-ai/mergekit • Building a "great" model is challenging. • Multiple training and fine-tuning steps are time- consuming and compute-intensive • Instead, can we build a model by merging several models that already have the properties we need? • Combine multiple task-specific models into a single multitask model without any additional training • Not an ensembling technique: there's only one model at the end • Merging only requires lightweight CPU compute • Fast process, no extra cost for training and inference, no extra inference latency models: - model: mistralai/Mistral-7B-Instruct-v0.2 parameters: density: 0.5 weight: 0.5 - model: BioMistral/BioMistral-7B parameters: density: 0.5 weight: 0.5 merge_method: ties base_model: mistralai/Mistral-7B-v0.1 parameters: normalize: false int8_mask: true dtype: float16

- 12. A modern model adaptation workflow Pretrained model Domain- adapted model Instruction- tuned model Aligned model Alignment Merging instead of fine-tuning Instruction- tuned model Merging instead of training Domain- adapted model Merging instead of aligning Aligned model Merging steps can be combined, e.g., merge with a domain-adapted and aligned model 📄📄📄 Unlabeled domain dataset 📄📄📄 Preference dataset 📄📄📄 Q&A dataset Continuous pre-training (CPT) Instruction fine-tuning (IFT) Spectrum DPO LoRA EvolKit

- 13. Arcee Cloud https://app.arcee.ai + https://docs.arcee.ai

- 14. Arcee SuperNova 70B (September 10th) https://blog.arcee.ai/meet-arcee-supernova-our-flagship-70b-model-alternative-to-openai/ https://blog.arcee.ai/arcee-supernova-training-pipeline-and-model-composition/ A distilled version of Llama-3.1-405B, merged with two other in-house Llama-3.1-70B models Best 70B model available today Outperforms Llama-3.1-405B, Claude-3.5 and GPT-4o on IFEval https://arxiv.org/abs/2311.07911 Chat with SuperNova (web) Available on the AWS Marketplace

- 15. Llama-3.1-SuperNova-Lite 8B (September 10th) https://huggingface.co/arcee-ai/Llama-3.1-SuperNova-Lite A distilled version of Llama-3.1-405B Best 8B model available today #1 on the Hugging Face Open LLM leaderboard Chat with Llama SuperNova Lite (ollama, Q5_K_S) SuperNova Lite on Inferentia2 SuperNova Lite on Graviton4

- 16. Summing things up No model rules them all : find the most appropriate one for each use case Small, tailored open models are the way to go New training and fine-tuning techniques are changing the model adaptation game Visit arcee.ai to learn how you can build yours with Arcee Cloud (SaaS) or Arcee Enterprise (VPC deployment) https://arcee.ai/blog https://huggingface.co/arcee-ai https://github.com/arcee-ai/aws-samples https://youtube.com/c/juliensimonfr Julien Simon, Chief Evangelist, Arcee AI [email protected]