[Hanoi-August 13] Tech Talk on Caching Solutions

- 2. 2 - We can win or lose projects/customers if response time is not good. Caching is the most importance to think about to improve performance and can be easily applied in projects. - Cache usually means we save frequently used resources into some easy and quick to access area. - Caching can provide big magnitude of performance, save CPU, network bandwidth, disk access. - Cache usually is not permanent storage, but should provide very fast access to resources and has limited capacity, such as RAM - Cache is a volatile storage, your data can be recollected at anytime if computer is in short of memory so always check null when getting data from cache What is caching

- 3. 3 Caching discussion - Server side caching: Introduction to popular data caching methods. Few patterns: Cache cloning & dependency tree. Caching in ORM frameworks. Caching with load balancing & scalabilities. Output caching. Caching with high concurrency. - Down stream caching: CDN caching Brower and proxy caching

- 4. 4 Introduction to popular data caching methods Different ways of storing data for easy and quick access: sessions, static variables, application state, http cache, distributed cache. - Static variables and application state are in global context, can create memory leaks if not managed well and can increase contention rate which will decrease throughout put - Sessions (in proc mode): can only store per user data, have limited time life (session timeout) - Http cache: support cache dependencies (SQL, file and cache key dependency), absolute expire time, sliding expire time, cache invalidation callback. Local cache, super fast but difficult to grow very large. MS Enterprise Caching Application Block is local cache similar to Http cache but can be used in win form. - Distributed cache: can be shared by multiple servers, slower than local cache, can be designed to grow very large (ex. Memcached)

- 5. 5 Few patterns: Cache cloning & dependency tree • Cache cloning pattern: – Need to prevent other threads seeing the changes when we’re editing an object in memory – Should clone an object in cache to create a new object for the editing user to edit that object – Need to build this cloning pattern into your business object models Cached object Writable clone Writing request Create writable clone

- 6. 6 Few patterns: Cache cloning & dependency tree • Cache dependency and how to build a hierarchical cache key systems: - Http cache support caching an object with its own key but make it depends on another cache key, when this cache key is clear, the object also removed from cache. - Create a master key “Master” with an empty object, then create “Sub- master1”, and “Sub- master2” which depends on “Master” key with empty objects. Then we cache some objects which depends on “Sub-master1” and some other objects which depends on “Sub-master2”. Master cache key Sub master 2 Sub master1 Sub master 3 Cache object 1 Cache object 2 Cache object n Cache object 1’ Cache object 2’ Cache object 2’ Sub master 4

- 7. 7 Caching with ORM frameworks • Most of ORM frameworks (EntityFramework, LINQ to SQL, Nhibernate etc..) has first layer of caching but has problem for distributed application where often the context is out of scope • Caching object outside of ORM layer (layer 2 cache), to work with distributed environment, has difficulties in manipulate objects because those object are not associated to any context. Layer 2 cache problems in ORM: http://queue.acm.org/detail.cfm?id=1394141 • There were a open source to develop a level 2 cache layer for EF framework http://code.msdn.microsoft.com/EFProviderWrappers • Above solution works quite close to DB layer, we have to analyze SQL query, commands, tables to build cache key. It doesn’t cache query with output parameters, offer limit cache policy control

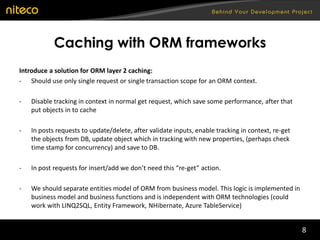

- 8. 8 Caching with ORM frameworks Introduce a solution for ORM layer 2 caching: - Should use only single request or single transaction scope for an ORM context. - Disable tracking in context in normal get request, which save some performance, after that put objects in to cache - In posts requests to update/delete, after validate inputs, enable tracking in context, re-get the objects from DB, update object which in tracking with new properties, (perhaps check time stamp for concurrency) and save to DB. - In post requests for insert/add we don’t need this “re-get” action. - We should separate entities model of ORM from business model. This logic is implemented in business model and business functions and is independent with ORM technologies (could work with LINQ2SQL, Entity Framework, NHibernate, Azure TableService)

- 9. 9 Caching with load balancing & scalabilities In load balancing environment, there are many servers so we do we put cache ? If all server has its own local cache, how to clear cache and update cache ? And if we need to increase the cache capacity to large or very large ? • Synchronized local cache: - We provide a cache wrapper layer to a good local cache option such as Http cache. - In this wrapper provide the infrastructure to connect all servers together, so that when 1 server clear a cache key, it sends this message to others. - WCF (using tcp or udp protocols), web services can be used as communication infrastructure. - Works quite well in big configurations but can’t grow very large. Web app Local cache Communication channel Local cache Web app Communication channel

- 10. 10 Caching with load balancing & scalabilities • Distributed cache: - Frist variants: cache data is stored on a separate/dedicated cache server(s) - Second variants: cache data is distributed among the servers - Both configurations are designed to grow very large, specially second variant will have bring more cache capacity and CPU power whenever a new server is added - A very successful/common implementation of this distributed cache is Memcached: http://memcached.org/ Memcached server Memcached client Web application Memcached server Memcached client Web application

- 11. 11 Caching with load balancing & scalabilities • Scale up: increase your server hardware config to get more power: increasing CPU, RAM, disk space on your servers, 4x expensive but only get 2x performance. • Scale out strategy: adding more server boxes to our cluster should increase your system power linearly. • It’s usually hard to scale out databases: - Sharding: we can split our data across many data base servers, algorithm to retrieve/save data become so much more complex, transactional integrity between shards is difficult. - NoSQL: not an option for OLTP, immediate consistency, and real-time analytics. - SQL replication: usually for back up only, can share the reading load if we can deliver out dated data to end user.

- 12. 12 Caching with load balancing & scalabilities • We have Memcached to reduce database reads a lot, but to further reduce its load on analytical queries, search queries on data, we can introduce a Solr server in each server box • We can consider “cache first save later“ strategy for non-critical data. Web Server Memcached Solr slave Web Server Memcached Solr slave Web Server Memcached Solr slave Database Server Solr master

- 13. 13 Output caching • If our data don’t change and our logic in code don’t change then the final output (HTML pages) won’t change, why don’t we cache it ? • Output cache uasually means cache the final output of a request, HTML pages, XML content, JSON content etc.. after all server processing is done. It also serve content very early, before most of server processing is started. • Output cache kicks in very early stage and also very late in the life cycle of a request. • We use a filter stream, assigned to HttpResponse.Filter property to tap into all content written into a response object to implement output caching. And we put content in to output cache at step 19. It’s can be a chanllenge to clear the output cache.

- 14. 14 Caching with too high concurrency • For some reasons it take long time like 500ms to get a resource, if your web site has very high concurrency and the cache for that object is expired, there can be many thread try to start retrieve that resource until first request succeed and add it to cache. • Consider locking with timeouts to avoid indefinite locking. Use cross thread locks and should not hold too much thread in waiting. Cache of time consuming resource is expired or resource is updated Only 1stst request is allowed to get resource, lock other requests Get the resource and add it to cache and release lock Other request must wait or get the extended old cache Get new resource from cache Requests

- 15. 15 Down stream caching • CDN networks, what are they and benefits we can get: – Content Delivery Network: they are massive number of servers that are distributed across the globe to deliver our content quicker to the user anywhere. – Akamai as example: they have >100 000 servers, provide 1 hop away connection to 70% internet user . – They can deliver static content and dynamic content, provide fallback site etc.. – They’re maybe the best defend against DOS attack. • Browser and proxy caching: – Browser can help us to cache static files (js, css, images), but also the whole HTML page and ajax responses. – Browser determine caching policies based on response headers: Cache- control, Etag, Last-Modified, Expired. – Most proxy cache also works based on response headers like browsers, and some of them don’t cache resources that has query string path in the URI

- 16. 16 Conclusion • Cache what you can, wherever and whenever you can • Email me for discussion: [email protected]