Tensorflow - Intro (2017)

- 1. TensorFlow - 101 Alessio Tonioni - [email protected] Computer Vision and image processing

- 2. TensorFlow Open source software library for numerical computation using data flow graphs. • Graph: abstract pipeline of mathematical operation on tensors (multi-dimensional array). Each graph is composed of: • Nodes: operations on data. • Edges: multidimensional data arrays (tensors) passing between operations. Web Page Tensorflow Playground Github project pageTensorFlow 101 - Alessio Tonioni 2

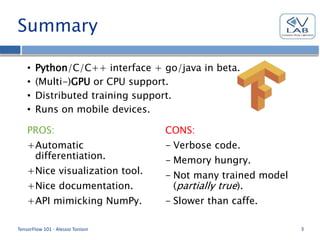

- 3. Summary PROS: +Automatic differentiation. +Nice visualization tool. +Nice documentation. +API mimicking NumPy. CONS: - Verbose code. - Memory hungry. - Not many trained model (partially true). - Slower than caffe. TensorFlow 101 - Alessio Tonioni 3 • Python/C/C++ interface + go/java in beta. • (Multi-)GPU or CPU support. • Distributed training support. • Runs on mobile devices.

- 4. Higher level API TensorFlow programs can be quite verbose, lots of low-level API. Use higher level API built on top of TensorFlow that can speed up the development of standard machine learning models. Easy to use, hard to debug if something goes wrong… Some popular framework: • TFLearn: specially designed for TensorFlow • Keras: works on top of both Theano or TensorFlow. TensorFlow 101 - Alessio Tonioni 4

- 5. Requirements & Installation TensorFlow is available both for python 2.7 and 3.3+ on linux, mac and, from version 0.12, windows. GPU support requires a Nvidia GPU and CUDA toolkit (>= 7.0) + cuDNN (>=v3). Standard installation available as pip package (automatically solves all the dependence issues): $ sudo apt install python3-pip python3-dev $ pip3 install tensorflow (only cpu) $ pip3 install tensorflow-gpu (cpu + gpu) If it’s not working or if you want to install from source support page. TensorFlow 101 - Alessio Tonioni 5

- 6. Program Structure TensorFlow programs are usually structured in two wells separated phases: 1. Construction phase: definition of the graph of operations that will be executed on the input tensors. Only definition, no numerical values associated to tensors or operations yet. Everything defined through TensorFlow API calls. 2. Execution phase: given some inputs evaluate an operation to get its real numerical value. The framework can automatically infer which previous operations must be executed to obtain the required outputs. Execution through TensorFlow ‘session’ object. Hello_TensorFlow.ipynb TensorFlow 101 - Alessio Tonioni 6

- 7. TensorFlow Graph TensorFlow 101 - Alessio Tonioni 7 X W b * + y [32,128] [128,10] [32,10] [10]

- 8. Tensors Formally: multi-linear mapping from vector space to real numbers 𝑓: 𝑉 𝑥 … 𝑥 𝑉 → ℝ Practically: multi-dimensional array of numbers. In TensorFlow almost all the API functions take tensors as inputs and return tensors as output. Almost everything can be mapped into a tensor: scalars, vectors, matrices, strings, files… Every tensor inside the computational graph must have a unique name, either user defined or auto-TensorFlow 101 - Alessio Tonioni 8

- 9. Tensors Three main type of tensors in TensorFlow (tensors_type.ipynb): • tf.constant(): tensor with constant and immutable value. After creation it has a shape, a datatype and a value. TensorFlow 101 - Alessio Tonioni 9 #graph definition a = tf.constant([[1,0],[0,1]], name="const_1") … #graph evaluation inside session #constant are automatically initialized a_val = sess.run(a) #a_val is a numpy array with shape=(2,2)

- 10. Tensors • tf.Variable(): tensor with variable (and trainable) values. At creation time it can have a shape, a data type and an initial value. The value can be changed only by other TensorFlow ops. All variables must be initialized inside a session before use. TensorFlow 101 - Alessio Tonioni 10 #graph definition a=tf.Variable([[1,0],[0,1]], trainable=False, name="var_1") … #graph evaluation inside session #manual initialization sess.run(tf.global_variables_initializer()) a_val = sess.run(a) #a_val is a numpy array with shape=(2,2)

- 11. Tensors • tf.placeholder(): dummy tensor node. Similar to variables, it has shape and datatype, but it is not trainable and it has no initial value. A placeholder it’s a bridge between python and TensorFlow, it only takes value when it is populated inside a session using the feed_dict of the session.run()method. TensorFlow 101 - Alessio Tonioni 11 #graph definition a = tf.placeholder(tf.int64, shape=(2,2),name="placeholder_1") … #graph evaluation inside session #a takes the value passed by the feed_dict a_val = sess.run(a, feed_dict={a:[[1,0],[0,1]]}) #a_val is a numpy array with shape=(2,2)

- 12. Tensors and operations allocation The default behavior of TensorFlow is to create everything inside the GPU memory (if compiled with GPU support). However it is possible to manually specify were each op and tensor should live with tf.device(). TensorFlow 101 - Alessio Tonioni 12 with tf.device("/cpu:0"): a = tf.Variable(…) #a will be allocated on the cpu memory with tf.device("/gpu:0"): b = tf.Variable(…) #b will be allocated on the cuda device 0 (default) with tf.device("/gpu:1"): c = tf.Variable(…) #c will be allocated on the cuda device 1

- 13. Tensors and operations allocation Some tips: • Device placement of the tensors can be logged creating a session with log_device_placement set to True. sess=tf.Session(config=tf.ConfigProto(log_device_placement=True) • Some I/O operations can not be executed on the GPU. Always create a session with allow_soft_placement set to True to let the system automatically swap some ops to CPU. sess=tf.Session(config=tf.ConfigProto(allow_soft_placement=True) • Try to reduce the unnecessary swap between CPU and GPU inside the graph other ways the execution time will be heavily penalized. TensorFlow 101 - Alessio Tonioni 13

- 14. Variable Scopes tf.variable_scope():Namespacing mechanism to ease the definition of complicated models. All tensors and operations defined inside a scope will have their names prefixed with the scope name to create unique identifiers inside the graph. TensorFlow 101 - Alessio Tonioni 14 a=tf.Variable(1,name="weights") #a variable name: weights with tf.variable_scope("conv_1") as scope: … b = tf.Variable(1, name="weights") #b variable name: conv_1/weights

- 15. Variable Scopes Nice visualization inside TensorGraph (we will talk about visualization tools later…) TensorFlow 101 - Alessio Tonioni 15

- 16. Variable Scopes + get_variable Scopes are important for the shared variables between models: • Use helper function tf.get_variable() to ease the creation of variables with a certain shape, datatype, name, initializer and regularizer. • If reuse=false at scope definition, this method will create a new variable. • If reuse=true at scope definition, the method will search for an already created variable with the same name inside the scope; if it can not be found it will raise a ValueError. TensorFlow 101 - Alessio Tonioni 16

- 17. Inputs How can we input external data in our models? (e.g. batch of examples) • Placeholders: the examples and batches are manually read and passed to the model using placeholder and feed_dict. More easy to handle but can became slow (data must be copied from python environment to the TensorFlow one all the time). • Native I/O Operations: all the operations to read and decode batch of samples are implemented inside TensorFlow graph using queues. Can be counter intuitive but grant a huge boost in performance (everything lives inside the TensorFlow environment). • Dataset API: new and partially simplified interface to handle inputs of data in the model, can sometimes ease the implementation.TensorFlow 101 - Alessio Tonioni 17

- 18. Input Queues Mechanism used in TensorFlow for asynchronous computation. 1. Create a FIFO-Queue to hold the examples 2. Refill the queue using multiple thread where each one adds one or more examples through the enqueue operation 3. Read a batch of examples using dequeue-many operation. TensorFlow 101 - Alessio Tonioni 18

- 19. Input Queues Seems too complicated? Use tf.train.batch() or tf.train.shuffle_batch()instead! Both functions take cares of creating and handling an input queues for the examples. Moreover automatically spawn multiple threads to keep the queue always full. TensorFlow 101 - Alessio Tonioni 19 image = tf.get_variable(…) #tensors containing a single image label = tf.get_variable(…) #tensors with the label associated to image #create two minibatch of 16 example one filled with image one with labels image_batch,label_batch = tf.train.shuffle_batch([image,label], batch_size=16, num_threads=16,capacity=5000, min_after_dequeue=1000)

- 20. Reading data How can we actually read input data from disk? • Feeding: use any external library and inject data inside the graph through placeholders. • Image Files: use ad-hoc op to read and decode data directly from filesystem, e.g. tf.WholeFileReader() + tf.image.decode_image(). • Binary Files: data encoded directly in binary format, read using tf.FixedLengthRecordReader(). • TFRecord Files: recommended format for TensorFlow, information encoded in binary files using protocol buffers. Create files using tf.python_io.TFRecordWriter(), read using tf.TFRecordReader() + tf.parse_example(). Inputs_example.Ipynb TensorFlow 101 - Alessio Tonioni 20

- 21. Dataset API (new) - Docs Simplifies the creation of input queues removing the need for explicit initialization of the input threads and allowing the creation of reinitializable inputs. Main components: tf.contrib.data.Dataset and tf.contrib.data.iterator. TensorFlow 101 - Alessio Tonioni 21 image = tf.get_variable(…) #tensors containing a single image label = tf.get_variable(…) #tensors with the label associated to image #create a dataset object to read batch of image and labels dataset = tf.contrib.data.Dataset.from_tensor_slices((image,label)) dataset = dataset.batch(16) iterator = dataset.make_one_shot_iterator() Image_batch,label_batch = iterator.get_next()

- 22. Dataset API - Iterator Different type of Iterator can be used: • One-shot: only iterates once through the dataset, no need for initialization. • Initializable: before using the iterator you should run iterator.initializer(), using this trick it is possible to reset the dataset when needed. • Reinitializable: used to switch between different datasets. • Feedable: used together with placeholders to selecat what iterator to use in each call to session.run(). TensorFlow 101 - Alessio Tonioni 22

- 23. Reading Data Some useful tips about reading inputs: • Performance boost if everything is implemented using TensorFlow symbolic ops. • Using binary files or protobuf reduces the number of access to disk improving efficiency. • Massive performance boost if all your dataset fits into GPU memory (unlikely to happen ). • If you have at least one queue always remember to start input reader threads inside a session before evaluating any ops, other way the program will become unresponsive. coord=tf.train.Coordinator() threads=tf.train.start_queue_runners(sess=session,coord=co ord) TensorFlow 101 - Alessio Tonioni 23

- 24. Data augmentation Some op can be used to augment the number of training images easily, they take as input a tensor and return a random distorted version of it: • tf.image.random_flip_up_down() • tf.image.random_flip_left_right() • tf.random_crop() • tf.image.random_contrast() • tf.image.random_hue() • tf.image.random_saturation() TensorFlow 101 - Alessio Tonioni 24

- 25. Operations • All the basic NN operations are implemented in the TensorFlow API as node in the graph; they takes one or more tensors as inputs and produces one or more tensors as output. • The framework automatically handle everything necessary to implement forward and backward pass. Don't worry (too much) about derivatives as long as you use standard TensorFlow op. • It is straightforward to re-implement famous CNN model, the hard part is training them to get the same result.TensorFlow 101 - Alessio Tonioni 25

- 26. Operations – Convolution2D tf.nn.conv2d(): standard convolution with bi-dimensional filters. It takes as input two 4D tensors, one for the mini- batch and one for the filters. tf.nn.bias_add(): add bias to a tensor taken as input, special case of the more general tf.add() op. TensorFlow 101 - Alessio Tonioni 26 input = … #4D tensor [#batch_example,height,width,#channels] kernel = … #4D tensor [filter_height, filter_width, filter_depth, #filters] strides = … #list of 4 int, stride across the 4 batch dimension padding = … #one of "SAME"/"VALID" enable or disable 0 padding of inputs bias = … #tensors with one dimension shape [#kernel filters] conv = tf.nn.conv2d(input,kernel,strides,padding) conv = tf.nn.bias_add(conv,bias)

- 27. Operations – Deconvolution tf.nn.conv2d_transpose(): deconvolution with bi- dimensional filters. It takes as input two 4D tensors, one for the mini-batch and one for the filters. TensorFlow 101 - Alessio Tonioni 27 input = … #4D tensor [#batch_example,height,width,#channels] kernel = … #4D tensor [filter_height, filter_width, output_channels, in_channels] output_shape = … #1D tensor representing the output shape of the deconvolution op strides = … #list of 4 int, stride across the 4 batch dimension padding = … #one of "SAME"/"VALID" enable or disable 0 padding of inputs bias = … #tensors with one dimension shape [#kernel filters] deconv = tf.nn.conv2d(input,kernel,output_shape,strides,padding)

- 28. Operations – BatchNormalization tf.contrib.layers.batch_norm: batch normalization layer with trainable parameters. TensorFlow 101 - Alessio Tonioni 28 input = … #4D tensor [#batch_example,height,width,#channels] is_training = … #Boolean True if the network is in training #apply batch norm to input Normed = tf.contrib.layers.batch_norm(input)

- 29. Operations – fully connected tf.matmul(): fully connected layers can be implemented as matrix multiplication between the input values and the weights matrix followed by bias addition. TensorFlow 101 - Alessio Tonioni 29 input=… #3D tensor [#batch size, #features, #nodes previous layer] weights=… #2D tensor [#features, #nodes] bias=… #1D tensor [#nodes] fully_connected=tf.matmul(input,weights)+bias #operator overloading to add bias (syntactic sugar)

- 30. Operations – activations tf.nn.relu(), tf.sigmoid(), tf.tanh(): each one implements an activation function, they takes a tensor as input and apply the activation function to each element. (More activation functions are available in the framework, check online API) TensorFlow 101 - Alessio Tonioni 30 conv=… #result of the application of a convolution relu =tf.nn.relu(conv) #activation after relu non-linearity tanh = tf.tanh(conv) #activation after tanh non-linearity sigmoid = tf.sigmoid(conv) #activation after sigmoid non-linearity

- 31. Operations - pooling tf.nn.avg_pool(), tf.nn.max_pool(): pooling operations, takes a 4D tensor as input and performs spacial pooling according to the parameter passed as input. TensorFlow 101 - Alessio Tonioni 31 input=… #4D tensor with shape[batch_size,height,width,channels] k_size=… #list of 4 ints with the dimension of the pooling window strides=… #list of 4 ints with the stride across the 4 batch dimension max_pooled = tf.nn.max_pool(input,k_size,strides) avg_pooled = tf.nn.avg_pool(input,k_size,strides)

- 32. Operations - predictions tf.nn.softmax(): takes a tensor as input and apply softmax operations across one dimension (default: last dimension) TensorFlow 101 - Alessio Tonioni 32 input=… #multi dimensional tensor Softmaxed = tf.nn.softmax(input,dim=-1) #softmax on last dimension

- 33. Loss functions • Inside TensorFlow a loss function is just an operation that produce a singular value as output. Every operation can automatically be treated as a loss function. Behold the power of auto-differentiation! • The most common loss functions for classification and regression are already implemented in the framework. • Custom loss functions can be created and used easily if they mix already implemented TensorFlow operation. TensorFlow 101 - Alessio Tonioni 33

- 34. Loss functions – cross_entropy tf.nn.sigmoid_cross_entropy_with_logits(), tf.nn.softmax_cross_entropy_with_logits(): standard loss function for discrete classification, takes as inputs the correct label and the network output, apply sigmoid or softmax respectively and compute cross_entropy. TensorFlow 101 - Alessio Tonioni 34 labels=… #tensor with one hot encoding for a classification task logits=… #un normalized output of a neural network Sigmoid_ce = tf.nn.sigmoid_cross_entropy_with_logits(labels,logits) Softmax_ce = tf.nn.softmax_cross_entropy_with_logits(labels,logits)

- 35. Optimization • Given a loss function use one of the tf.train.Optimizers() subclasses to train a network to minimize it. • Each subclass implements a minimize() method that accept a nodes of the graph as input and takes care of both computing the gradients and applying them to the trainable variables of the graph. • If you want more control combines call to the compute_gradients() and apply_gradients() methods of the subclasses to obtain the same result. TensorFlow 101 - Alessio Tonioni 35

- 36. Optimization Different optimizers available out of the box: • tf.train.GradientDescentOptimizer() • tf.train.MomentumOptimizer() • tf.train.FtrlOptimizer() • tf.train.AdagraDOptimizer() • tf.train.AdadeltaOptimizer() • tf.train.RMSPropOptimizer() • tf.train.AdamOptimizer() TensorFlow 101 - Alessio Tonioni 36

- 37. Optimization All the optimizer after creation can be used with same interface. minimize() method returns a meta node that when evaluated inside a session compute gradients and apply them to update the model variable. TensorFlow 101 - Alessio Tonioni 37 loss=… #value of the loss function, smaller is better learning_rate=… #value of the learning rate, can be a tensors or a float global_step=… #variable with the number of optimization step executed #the optimizer automatically increment the variable after each #evaluation train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss, global_step=global_step) #create train op … sess.run(train_op) #perform one step of optimization on a single batch

- 38. Putting all together To train any machine learning model in tensorflow: 1. Create an input pipeline that load samples from disk. 2. Create the machine learning model. 3. Create a loss function for the model. 4. Create a minimizer that optimize the loss function. 5. Create a TensorFlow session. 6. Run a loop evaluating the train_op untill convergence. 7. Save the model to disk. TensorFlow 101 - Alessio Tonioni 38 Construction Evaluation

- 39. Save models Once trained, it can be useful to save a model to disk for later reuse. In TensorFlow this can be implemented effortless using the tf.train.Saver() class. TensorFlow 101 - Alessio Tonioni 39 #create a saver to save and restore variables during graph definition saver=tf.train.Saver() … #inside a session use the .save() method to save the model to disk with tf.Session() as sess: … filename = 'models/final.ckpt' save_path = saver.save(sess,filename) #save current graph

- 40. Restore Model Restore a model saved to disk using the same tf.train.Saver() class. TensorFlow 101 - Alessio Tonioni 40 #create a saver to save and restore variables during graph definition saver=tf.train.Saver() … #inside a session use the .restore() method to restor the model from disk with tf.Session() as sess: filename = 'models/final.ckpt' saver.restore(sess,filename) …

- 41. A study case - MNIST • Let's try to put everything together and train a softmax regressor and a two layer neural network to classify MNIST digits (mnist.ipynb). TensorFlow 101 - Alessio Tonioni 41

- 42. TensorBoard: Visualizing Learning TensorBoard is a suite of visualization tools completely integrated in TensorFlow. Allows the visualization of the computational graph and a lot of useful statistics about the training process. TensorFlow 101 - Alessio Tonioni 42

- 43. TensorBoard TensorBoard operates on TensorFlow events file: protobuf files saved on disk that contains summary data about nodes we have decide to monitor. To create such files and launch TensorBoard: 1. Add to the graph some summary operations: meta nodes that creates a suitable summary of their inputs. 2. Create an op that collect all summary nodes with tf.summary.merge_all(). 3. Inside session, evaluate the above operation to have a representation of the summaries. 4. Save the representation to disk using tf.summary.FileWriter(). TensorFlow 101 - Alessio Tonioni 43

- 44. TensorBoard 5. Start the backend from command line $ tensorboard –logdir="path/to/log/dir" 6. Connect with a browser (chrome/firefox) to "localhost:6006". Original image: linkTensorFlow 101 - Alessio Tonioni 44

- 45. A study case – jap faces • Let's build a small CNN model to classify a 40 category dataset of japanese idol models. • Implementation of both training and evaluation step with two graphs with shared variables. • Add summary operation for TensorBoard visualization. • Save checkpoint of the model periodically. TensorFlow 101 - Alessio Tonioni 45

- 46. Some additional material • TensorFlow tutorials • TensorFlow How-Tos • Awesome TensorFlow curated list of TensorFlow experiments, libraries and projects • Tensorflow-101 tensorflow tutorials as jupyter notebooks. • Tensorflow-models official repository with example code for a lot of different deep learning model with weights available TensorFlow 101 - Alessio Tonioni 46

![TensorFlow Graph

TensorFlow 101 - Alessio Tonioni 7

X

W

b

*

+ y

[32,128]

[128,10]

[32,10]

[10]](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-7-320.jpg)

![Tensors

Three main type of tensors in TensorFlow

(tensors_type.ipynb):

• tf.constant(): tensor with constant and immutable

value.

After creation it has a shape, a datatype and a value.

TensorFlow 101 - Alessio Tonioni 9

#graph definition

a = tf.constant([[1,0],[0,1]], name="const_1")

…

#graph evaluation inside session

#constant are automatically initialized

a_val = sess.run(a)

#a_val is a numpy array with shape=(2,2)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-9-320.jpg)

![Tensors

• tf.Variable(): tensor with variable (and trainable)

values.

At creation time it can have a shape, a data type and

an initial value. The value can be changed only by

other TensorFlow ops.

All variables must be initialized inside a session

before use.

TensorFlow 101 - Alessio Tonioni 10

#graph definition

a=tf.Variable([[1,0],[0,1]], trainable=False, name="var_1")

…

#graph evaluation inside session

#manual initialization

sess.run(tf.global_variables_initializer())

a_val = sess.run(a)

#a_val is a numpy array with shape=(2,2)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-10-320.jpg)

![Tensors

• tf.placeholder(): dummy tensor node. Similar to

variables, it has shape and datatype, but it is not

trainable and it has no initial value. A placeholder

it’s a bridge between python and TensorFlow, it only

takes value when it is populated inside a session

using the feed_dict of the session.run()method.

TensorFlow 101 - Alessio Tonioni 11

#graph definition

a = tf.placeholder(tf.int64, shape=(2,2),name="placeholder_1")

…

#graph evaluation inside session

#a takes the value passed by the feed_dict

a_val = sess.run(a, feed_dict={a:[[1,0],[0,1]]})

#a_val is a numpy array with shape=(2,2)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-11-320.jpg)

![Input Queues

Seems too complicated?

Use tf.train.batch() or

tf.train.shuffle_batch()instead!

Both functions take cares of creating and handling an

input queues for the examples. Moreover

automatically spawn multiple threads to keep the

queue always full.

TensorFlow 101 - Alessio Tonioni 19

image = tf.get_variable(…) #tensors containing a single image

label = tf.get_variable(…) #tensors with the label associated to image

#create two minibatch of 16 example one filled with image one with labels

image_batch,label_batch = tf.train.shuffle_batch([image,label],

batch_size=16, num_threads=16,capacity=5000, min_after_dequeue=1000)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-19-320.jpg)

![Operations – Convolution2D

tf.nn.conv2d(): standard convolution with bi-dimensional

filters. It takes as input two 4D tensors, one for the mini-

batch and one for the filters.

tf.nn.bias_add(): add bias to a tensor taken as input,

special case of the more general tf.add() op.

TensorFlow 101 - Alessio Tonioni 26

input = … #4D tensor [#batch_example,height,width,#channels]

kernel = … #4D tensor [filter_height, filter_width, filter_depth, #filters]

strides = … #list of 4 int, stride across the 4 batch dimension

padding = … #one of "SAME"/"VALID" enable or disable 0 padding of inputs

bias = … #tensors with one dimension shape [#kernel filters]

conv = tf.nn.conv2d(input,kernel,strides,padding)

conv = tf.nn.bias_add(conv,bias)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-26-320.jpg)

![Operations – Deconvolution

tf.nn.conv2d_transpose(): deconvolution with bi-

dimensional filters. It takes as input two 4D tensors,

one for the mini-batch and one for the filters.

TensorFlow 101 - Alessio Tonioni 27

input = … #4D tensor [#batch_example,height,width,#channels]

kernel = … #4D tensor [filter_height, filter_width, output_channels, in_channels]

output_shape = … #1D tensor representing the output shape of the deconvolution op

strides = … #list of 4 int, stride across the 4 batch dimension

padding = … #one of "SAME"/"VALID" enable or disable 0 padding of inputs

bias = … #tensors with one dimension shape [#kernel filters]

deconv = tf.nn.conv2d(input,kernel,output_shape,strides,padding)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-27-320.jpg)

![Operations –

BatchNormalization

tf.contrib.layers.batch_norm: batch normalization

layer with trainable parameters.

TensorFlow 101 - Alessio Tonioni 28

input = … #4D tensor [#batch_example,height,width,#channels]

is_training = … #Boolean True if the network is in training

#apply batch norm to input

Normed = tf.contrib.layers.batch_norm(input)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-28-320.jpg)

![Operations – fully connected

tf.matmul(): fully connected layers can be

implemented as matrix multiplication between the

input values and the weights matrix followed by bias

addition.

TensorFlow 101 - Alessio Tonioni 29

input=… #3D tensor [#batch size, #features, #nodes previous layer]

weights=… #2D tensor [#features, #nodes]

bias=… #1D tensor [#nodes]

fully_connected=tf.matmul(input,weights)+bias

#operator overloading to add bias (syntactic sugar)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-29-320.jpg)

![Operations - pooling

tf.nn.avg_pool(), tf.nn.max_pool(): pooling

operations, takes a 4D tensor as input and performs

spacial pooling according to the parameter passed as

input.

TensorFlow 101 - Alessio Tonioni 31

input=… #4D tensor with shape[batch_size,height,width,channels]

k_size=… #list of 4 ints with the dimension of the pooling window

strides=… #list of 4 ints with the stride across the 4 batch dimension

max_pooled = tf.nn.max_pool(input,k_size,strides)

avg_pooled = tf.nn.avg_pool(input,k_size,strides)](https://image.slidesharecdn.com/tensorflow2017-170302111547/85/Tensorflow-Intro-2017-31-320.jpg)