Trends in Systems and How to Get Efficient Performance

- 1. Trends in systems and how to get efficient performance Martin Hilgeman HPC Consultant [email protected] Internal Use - Confidential

- 2. 2 of 38 Internal Use - Confidential The landscape is changing “We are no longer in the general purpose era… the argument of tuning software for hardware is moot. Now, to get the best bang for the buck, you have to tune both.” https://www.nextplatform.com/2017/03/08/arm-amd-x86-server-chips-get-mainstream-lift-microsoft/amp/ - Kushagra Vaid, general manager of server engineering, Microsoft Cloud Solutions

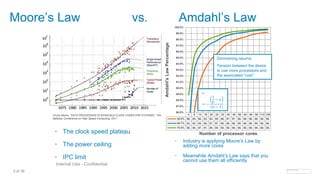

- 3. 3 of 38 Internal Use - Confidential Moore’s Law vs. Amdahl’s Law • The clock speed plateau • The power ceiling • IPC limit • Industry is applying Moore’s Law by adding more cores • Meanwhile Amdahl’s Law says that you cannot use them all efficiently 4 8 12 16 20 24 32 40 48 56 64 80 96 112 128 50.0% 66. 85. 90. 93. 94. 95. 96. 97. 97. 98. 98. 98. 98. 99. 99. 66.7% 83. 92. 95. 96. 97. 97. 98. 98. 98. 99. 99. 99. 99. 99. 99. 75.0% 88. 95. 97. 97. 98. 98. 98. 99. 99. 99. 99. 99. 99. 99. 99. 86.0% 87.0% 88.0% 89.0% 90.0% 91.0% 92.0% 93.0% 94.0% 95.0% 96.0% 97.0% 98.0% 99.0% 100.0% Amdahl'sLawPercentage Number of processor cores Diminishing returns: Tension between the desire to use more processors and the associated “cost” 𝑒 = − 1 𝑝 − 𝑛 𝑛 − 1 Chuck Moore, "DATA PROCESSING IN EXASCALE-CLASS COMPUTER SYSTEMS", The Salishan Conference on High Speed Computing, 2011

- 4. 4 of 38 Internal Use - Confidential Moore’s Law vs Amdahl's Law - “too Many Cooks in the Kitchen” Meanwhile Amdahl’s Law says that you cannot use them all efficiently Industry is applying Moore’s Law by adding more cores

- 5. 5 of 38 Internal Use - Confidential System trend over the years (1) C C C C ≈≈≈ ~1970 - 2000 ≈≈≈ 2005 Multi-core: TOCK

- 6. 6 of 38 Internal Use - Confidential System trend over the years (2) 2007 Integrated Memory controller: TOCK Integrated PCIe controller: TOCK 2012 ≈≈≈≈≈≈

- 7. 7 of 38 Internal Use - Confidential Future Integrated Network Fabric Adapter: TOCK SoC designs: TOCK ≈≈≈ ≈≈≈ ≈≈≈ ≈≈≈

- 8. 8 of 38 Internal Use - Confidential Improving performance - what levels do we have? • Challenge: Sustain performance trajectory without massive increases in cost, power, real estate, and unreliability • Solutions: No single answer, must intelligently turn “Architectural Knobs” 𝐹𝑟𝑒𝑞 × 𝑐𝑜𝑟𝑒𝑠 𝑠𝑜𝑐𝑘𝑒𝑡 × #𝑠𝑜𝑐𝑘𝑒𝑡𝑠 × 𝑖𝑛𝑠𝑡 𝑜𝑟 𝑜𝑝𝑠 𝑐𝑜𝑟𝑒 × 𝑐𝑙𝑜𝑐𝑘 × 𝐸𝑓𝑓𝑖𝑐𝑖𝑒𝑛𝑐𝑦 Hardware performance What you really get 1 2 3 4 5 Software performance

- 9. 9 of 38 Internal Use - Confidential Turning the knobs 1 - 4 Frequency is unlikely to change much Thermal/Power/Leakage challenges Moore’s Law still holds: 130 -> 14 nm. LOTS of transistors Number of sockets per system is the easiest knob. Challenging for power/density/cooling/networking IPC still grows FMA3/4, AVX, FPGA implementations for algorithms Challenging for the user/developer 1 2 3 4

- 10. 10 of 38 Internal Use - Confidential What is Intel telling us?

- 11. 11 of 38 Internal Use - Confidential New capabilities according to Intel SSSE3 SSE4 AVX AVX AVX2 AVX2 2007 2009 2012 2013 2014 2015 2017 AVX-512

- 12. 12 of 38 Internal Use - Confidential The state of ISV software Segment Applications Vectorization support CFD Fluent, LS-DYNA, STAR CCM+ Limited SSE2 support CSM CFX, RADIOSS, Abaqus Limited SSE2 support Weather WRF, UM, NEMO, CAM Yes Oil and Gas Seismic processing Not applicable Reservoir Simulation Yes Chemistry Gaussian, GAMESS, Molpro Not applicable Molecular dynamics NAMD, GROMACS, Amber,… PME kernels support SSE2 Biology BLAST, Smith-Waterman Not applicable Molecular mechanics CPMD, VASP, CP2k, CASTEP Yes Bottom line: ISV support for new instructions is poor. Less of an issue for in-house developed codes, but programming is hard

- 13. 13 of 38 Internal Use - Confidential Meanwhile the bandwidth is suffering 0 0.5 1 1.5 2 2.5 3 3.5 Intel Xeon X5690 Intel Xeon E5- 2690 Intel Xeon E5- 2690v2 Intel Xeon E5- 2690v3 Intel Xeon E5- 2690v4 Skylake Cores Clock QPI Memory

- 14. 14 of 38 Internal Use - Confidential Add to this the Memory Bandwidth and System Balance Obtained from: http://sc16.supercomputing.org/2016/10/07/sc16-invited-talk-spotlight-dr-john-d-mccalpin-presents-memory-bandwidth-system-balance-hpc-systems/

- 15. 15 of 38 Internal Use - Confidential And data is becoming sparser (think “Big Data”) X = Sparse Matrix “A” • Most entries are zero • Hard to exploit SIMD • Hard to use caches A x y • This has very low arithmetic density and hence memory bound • Common in CFD, but also in genetic evaluation of species

- 16. 16 of 38 Internal Use - Confidential What does Intel do about these trends? Problem Westmere Sandy Bridge Ivy Bridge Haswell Broadwell Skylake QPI bandwidth No problem Even better Two snoop modes Three snoop modes Four (!) snoop modes • UPI • COD snoop modes Memory bandwidth No problem Extra memory channel Larger cache Extra load/store units Larger cache • Extra load/store units • +50% memory channels Core frequency No problem • More cores • AVX • Better Turbo • Even more cores • Above TDP Turbo • Still more cores • AVX2 • Per-core Turbo • Again even more cores • optimized FMA • Per-core Turbo based on instruction type • More cores • Larger OOO engine • AVX-512 • 3 different core frequency modes

- 17. Optimizing without having the source code

- 18. 18 of 38 Internal Use - Confidential Tuning knobs for performance Hardware tuning knobs are limited, but there’s far more possible in the software layer Hardware Operating system Middleware Application BIOS P-states Memory profile I/O cache tuning Process affinity Memory allocation MPI (parallel) tuning Use of performance libs (Math, I/O, IPP) Compiler hints Source changes Adding parallelism easy hard

- 19. 19 of 38 Internal Use - Confidential dell_affinity.exe – automatically adjust process affinity for the architecture Mode Time (s) Original 6342 Platform MPI 6877 dell_affinity 4844 Memory Memory QPI QPI QPI MemoryMemory Original: 152 seconds Memory Memory QPI QPI QPI MemoryMemory With dell_affinity: 131 seconds

- 20. 20 of 38 Internal Use - Confidential MPI profiling interface – dell_toolbox • Works with every application using MPI - Plugin-and-play! • Provides insight in computation/computation ratio • Allows to dig deeper into MPI internals • Generates CSV files that can be imported into Excel 0 100 200 300 400 500 600 700 800 900 0 32 64 96 128 160 192 224 Wallclocktime(s) MPI Rank MPI_Allreduce MPI_Irecv MPI_Scatterv MPI_Gather MPI_Gatherv MPI_Isend MPI_Allgather MPI_Alltoallv MPI_Bcast MPI_Wait App 0 1E+09 2E+09 3E+09 4E+09 5E+09 6E+09 7E+09 8E+09 MPI_Bcast MPI_Gather MPI_Irecv MPI_Isend MPI_Recv MPI_Reduce MPI_Send MPI_Wait MPI_Waitany Numberofmessages 1 node 2 nodes 4 nodes 8 nodes 16 nodes MPI_Bcast 0-512 bytes 512-4096 bytes 4096- 16384 bytes MPI_Irecv 0-512 bytes 512-4096 bytes 4096- 16384 bytes MPI_Isend 0-512 bytes 512-4096 bytes 4096- 16384 bytes MPI_Recv 0-512 bytes 512-4096 bytes 4096- 16384 bytes

- 21. 21 of 38 Internal Use - Confidential STAR CCM+ MPI tuning for F1 team 0 500 1000 1500 2000 2500 3000 0 32 64 96 128 160 192 224 0 32 64 96 128 160 192 224 Wallclocktime(s) MPI rank R022, lemans_trim_105m, 256 cores, PPN=16 MPI_Waitany MPI_Waitall MPI_Wait MPI_Sendrecv MPI_Send MPI_Scatterv MPI_Scatter MPI_Recv MPI_Isend MPI_Irecv MPI_Gatherv MPI_Gather MPI_Bcast MPI_Barrier MPI_Alltoall MPI_Allreduce MPI_Allgatherv AS-IS: 2812 seconds Tuned MPI: 2458 seconds 12.6 % speedup

- 22. 22 of 38 Internal Use - Confidential 0 200 400 600 800 1000 1200 1400 1600 0 40 80 120 160 200 240 280 0 40 80 120 160 200 240 280 0 40 80 120 160 200 240 280 Wallclocktime(s) MPI Rank Neon benchmark 16x M620, E5-2680v2, Intel MPI MPI_Waitany MPI_Wait MPI_Send MPI_Reduce MPI_Recv MPI_Isend MPI_Irecv MPI_Gather MPI_Bcast App 14.4 % faster 1507 seconds 1417 seconds 1287 seconds Altair Hyperworks MPI tuning As-is Tuning knob #1 Tuning knob #2

- 24. Case study: 3D anti- leakage FFT

- 25. 25 of 38 Internal Use - Confidential Seismic reflection and processing Oil companies use reflection seismology to estimate the properties of the Earth’s surface by analyzing the reflection of seismic waves Image obtained from: https://en.wikipedia.org/wiki/Reflection_seismology#/media/File:Seismic_Reflection_Principal.png

- 26. 26 of 38 Internal Use - Confidential Seismic data processing Data processing is being used to reconstruct the reflected seismic waves into a picture (deconvolution) These events can be relocated into space or time to the exact location by discrete Fourier Transformations Image obtained from: http://groups.csail.mit.edu/netmit/wordpress/wp-content/themes/netmit/images/sFFT.png F 𝜔 = −∞ ∞ f 𝑡 𝑒−𝑖𝜔𝑡 𝑑𝑡

- 27. 27 of 38 Internal Use - Confidential Benchmarking and profiling • A single record was used to limit the job turnaround time • All cores (including logical processors) are used • Profiling was done using Intel® Advisor XE – Shows application hotspots with most time consuming loops – Shows data dependencies – Shows potential performance gains (only with 2016 compilers) – Makes compiler output easier to read (only with 2016 compilers) Image obtained from: https://software.intel.com/en-us/intel-advisor-xe

- 28. 28 of 38 Internal Use - Confidential Profile from a single record • 76.5% scalar code • 23.5% vectorized • Two routines make up for the majority of the wall clock time

- 29. 29 of 38 Internal Use - Confidential Data alignment and loop simplification float *amp_tmp = mSub.amp_panel; int m = 0; int m1 = (np - ifq) * ny * nx; for (int n1 = 0; n1 < ny; n1++) { for (int n2 = 0; n2 < nx; n2++) { float a = four_data[m1].r + four_out[m1].r; float b = four_data[m1].i + four_out[m1].i; amp_tmp[m] = (a * a + b * b); m1 ++; m ++; } } mSub.amp_panel = (float *) _mm_malloc(mySize * sizeof(float), 32); float *amp_tmp = mSub.amp_panel; int m1 = (np - ifq) * ny * nx; for (int n1 = 0; n1 < ny * nx; n1++) { __assume_aligned(amp_tmp, 32); __assume_aligned(four_data, 32); __assume_aligned(four_out, 32); amp_tmp[n1] = ((four_data[m1 + n1].r + four_out[m1 + n1].r) * (four_data[m1 + n1].r + four_out[m1 + n1].r)) + ((four_data[m1 + n1].i + four_out[m1 + n1].i) * (four_data[m1 + n1].i + four_out[m1 + n1].i)); } AS-IS OPT

- 30. 30 of 38 Internal Use - Confidential Compiler diagnostic output LOOP BEGIN at test3d_subs.cpp(701,4) remark #15389: vectorization support: reference four_data has unaligned access [ test3d_subs.cpp(702,40) ] remark #15389: vectorization support: reference four_out has unaligned access [ test3d_subs.cpp(702,40) ] remark #15389: vectorization support: reference amp_panel has unaligned access [ test3d_subs.cpp(704,7) ] remark #15381: vectorization support: unaligned access used inside loop body remark #15305: vectorization support: vector length 16 remark #15309: vectorization support: normalized vectorization overhead 0.393 remark #15300: LOOP WAS VECTORIZED remark #15442: entire loop may be executed in remainder remark #15450: unmasked unaligned unit stride loads: 2 remark #15451: unmasked unaligned unit stride stores: 1 remark #15460: masked strided loads: 4 remark #15475: --- begin vector loop cost summary --- remark #15476: scalar loop cost: 29 remark #15477: vector loop cost: 3.500 remark #15478: estimated potential speedup: 6.310 remark #15488: --- end vector loop cost summary --- LOOP END LOOP BEGIN at test3d_subs.cpp(692,8) remark #15388: vectorization support: reference amp_panel has aligned access [ test3d_subs.cpp(696,4) ] remark #15389: vectorization support: reference four_data has unaligned access [ test3d_subs.cpp(696,4) ] remark #15389: vectorization support: reference four_out has unaligned access [ test3d_subs.cpp(696,4) ] remark #15381: vectorization support: unaligned access used inside loop body remark #15305: vectorization support: vector length 16 remark #15309: vectorization support: normalized vectorization overhead 0.120 remark #15300: LOOP WAS VECTORIZED remark #15449: unmasked aligned unit stride stores: 1 remark #15450: unmasked unaligned unit stride loads: 2 remark #15460: masked strided loads: 8 remark #15475: --- begin vector loop cost summary --- remark #15476: scalar loop cost: 43 remark #15477: vector loop cost: 3.120 remark #15478: estimated potential speedup: 9.070 remark #15488: --- end vector loop cost summary --- LOOP END AS-IS OPT

- 31. 31 of 38 Internal Use - Confidential Expensive type conversions for (int i = 0; i < nx; i++){ int ii = i - nxh; float fii = (float) ii*scale + (float) (nxh) ; if (fii >= 0.0) { ii = (int) fii; ip = ii+1; if(ip < nx && ii >= 0) { t = (fjj - (float) jj); u = (fii - (float) ii); int n1 = jj*nx + ii; int n2 = jp*nx + ii; int n3 = jp*nx + ip; int n4 = jj*nx + ip; amp[m] += ((1.0-t)*(1.0-u)*amp_tmp[n1]+t*(1.0-u)*amp_tmp[n2]+t*u*amp_tmp[n3]+(1.0-t)*u*amp_tmp[n4]); } } m ++; } remark #15417: vectorization support: number of FP up converts: single precision to double precision 8 [ test3d_subs.cpp(784,26) ] remark #15418: vectorization support: number of FP down converts: double precision to single precision 1 [ test3d_subs.cpp(784,26) ] remark #15300: LOOP WAS VECTORIZED remark #15475: --- begin vector loop cost summary --- remark #15476: scalar loop cost: 48 remark #15477: vector loop cost: 25.870 remark #15478: estimated potential speedup: 1.780 remark #15487: type converts: 12 AS-IS

- 32. 32 of 38 Internal Use - Confidential Expensive type conversions - solution union convert_var_type { int i; float f; }; union convert_var_type convert_fii_1, convert_fii_2; for (ii = -nxh; ii < nx - nxh; ii++) { convert_fii_1.f = ii * scale + nxh; fii = convert_fii_1.f; if (fii >= 0.0f) { convert_fii_2.i = fii; iii = convert_fii_2.i; if(iii < nx - 1 && iii >= 0) { convert_iii.f = iii; t = fjj - jjf; u = fii - convert_iii.f; n1 = jjj * nx + iii; n2 = (jjj + 1) * nx + iii; amp[m] += ((1.0f-t)*(1.0f-u)*amp_tmp[n1]+t*(1.0f-u)*amp_tmp[n2] +t*u*amp_tmp[n2+1]+(1.0f-t)*u*amp_tmp[n1+1]); } } m ++; } remark #15300: LOOP WAS VECTORIZED remark #15475: --- begin vector loop cost summary --- remark #15476: scalar loop cost: 42 remark #15477: vector loop cost: 20.370 remark #15478: estimated potential speedup: 1.960 remark #15487: type converts: 3 OPT

- 33. 33 of 38 Internal Use - Confidential What about parallelization? Window processing is embarrassingly parallel #pragma omp parallel for schedule(dynamic, 1) for (int iwin = 0; iwin < NumberOfWindows; iwin++) { /* thread number handling the window */ int tn = omp_get_thread_num(); << Do computation >> } Memory allocation is not for (int i = 0; i < numThreads; i++) { mySize = maxntr_win; if(!(mem_sub[i].trcid_win = new int[mySize])) ierr = 1; memset(mem_sub[i].trcid_win, 0, sizeof(int) * mySize); mb += sizeof(int) * mySize; }

- 34. 34 of 38 Internal Use - Confidential What happens? Memory allocation is done by thread CPU 0 CPU 1 CPU 0 CPU 1 All references for other threads end up on the NUMA domain of thread

- 35. 35 of 38 Internal Use - Confidential Correct way of “first touch” memory allocation Do the memory allocation and population in parallel so that each thread initializes its own memory for (int i = 0; i < numThreads; i++) { mySize = maxntr_win; if(!(mem_sub[i].trcid_win = new int[mySize])) ierr = 1; } #pragma omp parallel { int i = omp_get_thread_num(); memset(mem_sub[i].trcid_win, 0, sizeof(int) * mySize); mb += sizeof(int) * mySize; } CPU 0 CPU 1

- 36. 36 of 38 Internal Use - Confidential Final result of optimized code • 76.5% vectorized • 23.5% scalar code

- 37. 37 of 38 Internal Use - Confidential Final results Node Original version Optimized version E5-2670 @ 2.6 GHz, 32 threads 192 seconds 145 seconds (+24.4%) E5-2680 v3 @ 2.5 GHz, 48 threads 156 seconds 112 seconds (+28.2%) Node With AVX With FMA + AVX2 E5-2680 v3 @ 2.5 GHz, 48 threads 86 seconds 68 seconds (+20.9%) • Even better with 16.1 compilers, optimized version: • 24.4% speedup obtained on SNB, 28.2% on HSW

![29 of 38

Internal Use - Confidential

Data alignment and loop simplification

float *amp_tmp = mSub.amp_panel;

int m = 0;

int m1 = (np - ifq) * ny * nx;

for (int n1 = 0; n1 < ny; n1++) {

for (int n2 = 0; n2 < nx; n2++) {

float a = four_data[m1].r + four_out[m1].r;

float b = four_data[m1].i + four_out[m1].i;

amp_tmp[m] = (a * a + b * b);

m1 ++;

m ++;

}

}

mSub.amp_panel = (float *) _mm_malloc(mySize * sizeof(float), 32);

float *amp_tmp = mSub.amp_panel;

int m1 = (np - ifq) * ny * nx;

for (int n1 = 0; n1 < ny * nx; n1++) {

__assume_aligned(amp_tmp, 32);

__assume_aligned(four_data, 32);

__assume_aligned(four_out, 32);

amp_tmp[n1] = ((four_data[m1 + n1].r + four_out[m1 + n1].r) *

(four_data[m1 + n1].r + four_out[m1 + n1].r)) +

((four_data[m1 + n1].i + four_out[m1 + n1].i) *

(four_data[m1 + n1].i + four_out[m1 + n1].i));

}

AS-IS

OPT](https://image.slidesharecdn.com/mhilgemanefficientparallelcomputingandperformancewed04122017-170504152447/85/Trends-in-Systems-and-How-to-Get-Efficient-Performance-29-320.jpg)

![30 of 38

Internal Use - Confidential

Compiler diagnostic output

LOOP BEGIN at test3d_subs.cpp(701,4)

remark #15389: vectorization support: reference four_data has unaligned access [ test3d_subs.cpp(702,40) ]

remark #15389: vectorization support: reference four_out has unaligned access [ test3d_subs.cpp(702,40) ]

remark #15389: vectorization support: reference amp_panel has unaligned access [ test3d_subs.cpp(704,7) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15305: vectorization support: vector length 16

remark #15309: vectorization support: normalized vectorization overhead 0.393

remark #15300: LOOP WAS VECTORIZED

remark #15442: entire loop may be executed in remainder

remark #15450: unmasked unaligned unit stride loads: 2

remark #15451: unmasked unaligned unit stride stores: 1

remark #15460: masked strided loads: 4

remark #15475: --- begin vector loop cost summary ---

remark #15476: scalar loop cost: 29

remark #15477: vector loop cost: 3.500

remark #15478: estimated potential speedup: 6.310

remark #15488: --- end vector loop cost summary ---

LOOP END

LOOP BEGIN at test3d_subs.cpp(692,8)

remark #15388: vectorization support: reference amp_panel has aligned access [ test3d_subs.cpp(696,4) ]

remark #15389: vectorization support: reference four_data has unaligned access [ test3d_subs.cpp(696,4) ]

remark #15389: vectorization support: reference four_out has unaligned access [ test3d_subs.cpp(696,4) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15305: vectorization support: vector length 16

remark #15309: vectorization support: normalized vectorization overhead 0.120

remark #15300: LOOP WAS VECTORIZED

remark #15449: unmasked aligned unit stride stores: 1

remark #15450: unmasked unaligned unit stride loads: 2

remark #15460: masked strided loads: 8

remark #15475: --- begin vector loop cost summary ---

remark #15476: scalar loop cost: 43

remark #15477: vector loop cost: 3.120

remark #15478: estimated potential speedup: 9.070

remark #15488: --- end vector loop cost summary ---

LOOP END

AS-IS

OPT](https://image.slidesharecdn.com/mhilgemanefficientparallelcomputingandperformancewed04122017-170504152447/85/Trends-in-Systems-and-How-to-Get-Efficient-Performance-30-320.jpg)

![31 of 38

Internal Use - Confidential

Expensive type conversions

for (int i = 0; i < nx; i++){

int ii = i - nxh;

float fii = (float) ii*scale + (float) (nxh) ;

if (fii >= 0.0) {

ii = (int) fii;

ip = ii+1;

if(ip < nx && ii >= 0) {

t = (fjj - (float) jj);

u = (fii - (float) ii);

int n1 = jj*nx + ii;

int n2 = jp*nx + ii;

int n3 = jp*nx + ip;

int n4 = jj*nx + ip;

amp[m] += ((1.0-t)*(1.0-u)*amp_tmp[n1]+t*(1.0-u)*amp_tmp[n2]+t*u*amp_tmp[n3]+(1.0-t)*u*amp_tmp[n4]);

}

}

m ++;

}

remark #15417: vectorization support: number of FP up converts: single precision to double precision 8 [

test3d_subs.cpp(784,26) ]

remark #15418: vectorization support: number of FP down converts: double precision to single precision 1 [

test3d_subs.cpp(784,26) ]

remark #15300: LOOP WAS VECTORIZED

remark #15475: --- begin vector loop cost summary ---

remark #15476: scalar loop cost: 48

remark #15477: vector loop cost: 25.870

remark #15478: estimated potential speedup: 1.780

remark #15487: type converts: 12

AS-IS](https://image.slidesharecdn.com/mhilgemanefficientparallelcomputingandperformancewed04122017-170504152447/85/Trends-in-Systems-and-How-to-Get-Efficient-Performance-31-320.jpg)

![32 of 38

Internal Use - Confidential

Expensive type conversions - solution

union convert_var_type { int i; float f; };

union convert_var_type convert_fii_1, convert_fii_2;

for (ii = -nxh; ii < nx - nxh; ii++) {

convert_fii_1.f = ii * scale + nxh;

fii = convert_fii_1.f;

if (fii >= 0.0f) {

convert_fii_2.i = fii;

iii = convert_fii_2.i;

if(iii < nx - 1 && iii >= 0) {

convert_iii.f = iii;

t = fjj - jjf;

u = fii - convert_iii.f;

n1 = jjj * nx + iii;

n2 = (jjj + 1) * nx + iii;

amp[m] += ((1.0f-t)*(1.0f-u)*amp_tmp[n1]+t*(1.0f-u)*amp_tmp[n2]

+t*u*amp_tmp[n2+1]+(1.0f-t)*u*amp_tmp[n1+1]);

}

}

m ++;

}

remark #15300: LOOP WAS VECTORIZED

remark #15475: --- begin vector loop cost summary ---

remark #15476: scalar loop cost: 42

remark #15477: vector loop cost: 20.370

remark #15478: estimated potential speedup: 1.960

remark #15487: type converts: 3

OPT](https://image.slidesharecdn.com/mhilgemanefficientparallelcomputingandperformancewed04122017-170504152447/85/Trends-in-Systems-and-How-to-Get-Efficient-Performance-32-320.jpg)

![33 of 38

Internal Use - Confidential

What about parallelization?

Window processing is embarrassingly parallel

#pragma omp parallel for schedule(dynamic, 1)

for (int iwin = 0; iwin < NumberOfWindows; iwin++) {

/* thread number handling the window */

int tn = omp_get_thread_num();

<< Do computation >>

}

Memory allocation is not

for (int i = 0; i < numThreads; i++) {

mySize = maxntr_win;

if(!(mem_sub[i].trcid_win = new int[mySize])) ierr = 1;

memset(mem_sub[i].trcid_win, 0, sizeof(int) * mySize);

mb += sizeof(int) * mySize;

}](https://image.slidesharecdn.com/mhilgemanefficientparallelcomputingandperformancewed04122017-170504152447/85/Trends-in-Systems-and-How-to-Get-Efficient-Performance-33-320.jpg)

![35 of 38

Internal Use - Confidential

Correct way of “first touch” memory allocation

Do the memory allocation and population in parallel so that each thread initializes its own memory

for (int i = 0; i < numThreads; i++) {

mySize = maxntr_win;

if(!(mem_sub[i].trcid_win = new int[mySize])) ierr = 1;

}

#pragma omp parallel

{

int i = omp_get_thread_num();

memset(mem_sub[i].trcid_win, 0, sizeof(int) * mySize);

mb += sizeof(int) * mySize;

}

CPU 0 CPU 1](https://image.slidesharecdn.com/mhilgemanefficientparallelcomputingandperformancewed04122017-170504152447/85/Trends-in-Systems-and-How-to-Get-Efficient-Performance-35-320.jpg)