Troubleshooting TCP/IP

- 1. TCP/IP and Network Performance Tuning Oyya-info www.oyya.synthasite.com http://oyya.synthasite.com/contact.php

- 2. Unique HPC Environment The Internet is being optimized for: millions of users behind low-speed soda straws thousands of high-bandwidth servers serving millions of soda straw streams Single high-speed to high-speed flows get little commercial attention

- 3. What’s on the Internet? Well over 90% of it is TCP Most flows are less than 30 packets long InternetMCI, 1998, k. claffy

- 4. TCP Throughput Limit #1 bps <= available bandwidth (unused bandwidth on slowest speed link) SSC-SD Border Router, 26 April 2000

- 5. Things You Can Do Throw out your low speed interfaces and networks! Make sure Routes and DNS report high-speed interfaces Don’t over-utilize your links (<50%?) Use routers sparingly, host routers not at all (routed -q)

- 6. High “Speed” Networks OC3 155 Mbps DS3 45 Mbps Capacity

- 7. Packet Lengths in Fiber Mbps Bits/Mile Miles/1500B Pkt T1 1.5 11.7 1026 Eth 10 78.1 154 T3 45 351 34 FEth 100 781 15 OC3 155 1210 10 OC12 622 4860 2.5 OC48 2488 19440 3260 feet

- 8. Bandwidth*Delay Product Bandwidth * Delay = number of bytes in flight to fill path TCP needs a receive window (rwin) equal to or greater than the BW * Delay product to achieve maximum throughput TCP often needs sender side socket buffers of 2*BW*Delay to recover from errors You need to send about 3*BW*Delay bytes for TCP to achieve maximum speed

- 9. TCP Throughput Limit #2 bps <= recv_window_size / round_trip_time E.g. 8kB window, 87 msec ping time = 750 kbps E.g. 64kB window, 14 msec rtt = 37 Mbps

- 10. Maximum TCP/IP Data Rate 64 KB Window Size

- 11. Things You Can Do Make sure your HPC apps offer sufficient receive windows and use sufficient send buffers But don’t run your system out of memory Find out the RTT with ping Check your path via traceroute

- 12. FreeBSD Tuning # FreeBSD 3.4 defaults are 524288 max, 16384 default /sbin/sysctl -w kern.ipc.maxsockbuf=1048576 /sbin/sysctl -w net.inet.tcp.sendspace=32768 /sbin/sysctl -w net.inet.tcp.recvspace=32768 Enabling High Performance Data Transfers on Hosts: http://www.psc.edu/networking/perf_tune.html

- 13. TCPTune A TCP Stack Tuner for Windows http://moat.nlanr.net/Software/TCPtune/ Makes sure high performance parameters are set Many such utilities for modems , e.g. DunTweak, but they reduce performance on high speed networks

- 14. Traceroute Matt's traceroute [v0.41] damp-ssc.spawar.navy.mil Sun Apr 23 23:29:51 2000 Keys: D - Display mode R - Restart statistics Q - Quit Packets Pings Hostname %Loss Rcv Snt Last Best Avg Worst 1. taco2-fe0.nci.net 0% 24 24 0 0 0 1 2. nccosc-bgp.att-disc.net 0% 24 24 1 1 1 6 3. pennsbr-aip.att-disc.net 0% 24 24 84 84 84 86 4. sprint-nap.vbns.net 0% 24 24 84 84 84 86 5. cs-hssi1-0.pym.vbns.net 0% 23 24 89 88 152 407 6. jn1-at1-0-0-0.pym.vbns.net 0% 23 23 88 88 88 90 7. jn1-at1-0-0-13.nor.vbns.net 0% 23 23 88 88 88 90 8. jn1-so5-0-0-0.dng.vbns.net 0% 23 23 89 88 91 116 9. jn1-so5-0-0-0.dnj.vbns.net 0% 23 23 112 111 112 113 10. jn1-so4-0-0-0.hay.vbns.net 0% 23 23 135 134 135 135 11. jn1-so0-0-0-0.rto.vbns.net 0% 23 23 147 147 147 147 12. 192.12.207.22 5% 22 23 98 98 113 291 13. pinot.sdsc.edu 0% 23 23 152 152 152 156 14. ipn.caida.org 0% 23 23 152 152 152 160

- 15. Path Performance: Latency vs. Bandwidth The highest bandwidth path is not always the highest throughput path! vBNS DREN SprintNAP, NJ SDSC, CA Host A Perryman, MD Host B Aberdeen, MD OC3 Path DS3 Path Host A&B are 15 miles apart DS3 path is ~250 miles OC3 path is ~6000 miles The network chose the OC3 path with 24x the rtt

- 16. MPing - A Windowed Ping Sends windows full of ICMP Echo or UDP Port Unreachable packets Shows packet throughput and loss under varying load (window sizes) 5 4 3 2 1 bad things happen Example: window size = 5 transmit 1 2 5 comes back 6 recv 1 (can send ack 1 + win 5 = 6) 7 recv 2 (can send ack 2 + win 5 = 7) 10 recv 5 (can send ack 5 + win 5 = 10) 11 recv 6 (can send ack 6 + win 5 = 11) 9 8

- 17. MPing on a “Normal” Path Rtt window not yet full Slope = 1 / rtt Stable queueing region Tail-drop behavior

- 18. MPing on a “Normal” Path Stable data rate 3600*1000*8 = 29 Mbps Queue size (280-120)*1000 = 160 KB Effective BW*Delay Product 120*1000 = 120 KB Packet Loss 1 - 3600/4800 = 25% Rtt = 120/3600 = 33 msec

- 19. Some MPing Results #1 RTT is increasing as load increases Slow packet processing? Fairly normal behavior Discarded packets are costing some performance loss

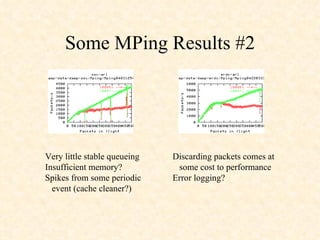

- 20. Some MPing Results #2 Very little stable queueing Insufficient memory? Spikes from some periodic event (cache cleaner?) Discarding packets comes at some cost to performance Error logging?

- 21. Some MPing Results #3 Fairly constant packet loss, even under light load Major packet loss, ~7/8 or 88% Hump at 50 may be duplex problem Both turned out to be an auto-negotiation duplex problem Setting to static full-duplex fixed these!

- 22. Some MPing Results #4 Oscillations with little loss Rate shaping? Decreasing performance with increasing queue length Typical of Unix boxes with poor queue insertion

- 23. TCP - Not Your Father’s Protocol TCP, RFC793, Sep 1981 Reno, BSD, 1990 Path MTU Discovery, RFC1191, Nov 1990 Window Scale, PAWS, RFC1323, May 1992 SACK, RFC2018, Oct 1996 NewReno, April 1999 More on the way!

- 24. TCP Reno Most modern TCP’s are “Reno” based Reno defined (refined) four key mechanisms Slow Start Congestion Avoidance Fast Retransmit Fast Recovery NewReno refined fast retransmit/recovery when partial acknowledgements are available

- 25. Important Points About TCP TCP is adaptive It is constantly trying to go faster It always slows down when it detects a loss How much it sends is controlled by windows When it sends is controlled by received ACK’s (or timeouts)

- 26. TCP Throughput Limit #3 Once window size and available bandwidth aren’t the limit 0.7 * Max Segment Size (MTU) Bandwidth < Round Trip Time (latency) sqrt[loss] M. Mathis, et.al. Double the MTU, double the throughput Halve the latency, double the throughput (shortest path matters) Halve the loss rate, 40% higher throughput

- 27. Maximum Transmission Unit (MTU) Issues http://sd.wareonearth.com/woe/jumbo.html New York to Los Angeles. Round Trip Time (rtt) is about 32 msec, and let's say packet loss is 0.1% (0.001). With an MSS of 1500 bytes, TCP throughput will have an upper bound of about 8.3 Mbps! And no, that is not a window size limitation, but rather one based on TCP's ability to detect and recover from congestion (loss). With 9000 byte frames, TCP throughput could reach about 50 Mbps.

- 28. Things You Can Do Use only large MTU interfaces/routers/links (no Gigabit Ethernet?) Never reduce the MTU (or bandwidth) on the path between each/every host and the WAN Make sure your TCP uses Path MTU Discovery Compare your throughput to Treno

- 29. Treno http://www.psc.edu/networking/treno_info.html Treno tells you what a good TCP should be able to achieve (Bulk Transfer Capacity) Easy 10 second test, no server required damp-mhpcc% treno damp-pmrf MTU=8166 MTU=4352 MTU=2002 MTU=1492 .......... Replies were from damp-pmrf [198.97.151.50] Average rate: 63470.5 kbp/s (55241 pkts in + 87 lost = 0.16%) in 10.03 s Equilibrium rate: 63851.9 kbp/s (54475 pkts in + 86 lost = 0.16%) in 9.828 s Path properties: min RTT was 8.77 ms, path MTU was 1440 bytes XXX Calibration checks are still under construction, use -v

- 30. TCP Connection Establishment Three-way handshake SYN SYN + ACK ACK tcpdump : Look for window sizes, window scale, timestamps, MTU, SackOK, Don’t-Fragment 15:52:08.756479 dexter.20 > tesla.1391: S 137432:137432(0) win 65535 <mss 1460,nop,wscale 1,nop,nop,timestamp 12966275 0> 15:52:08.756524 tesla.1391 > dexter.20: S 294199:294199(0) ack 137433 win 16384 <mss 1460,nop,wscale 0,nop,nop,timestamp 12966020 12966275>

- 31. Things You Can Do Check your TCP for high performance features “ Do the math” i.e. know what kind of throughput and loss to expect for your situation Look for sources of loss Watch out for duplex problems (late collisions?)

- 32. “Do The Math” Calculate needed peak window in bytes. Note that is twice the window needed to fill just barely fill the link... Wb = 2*RTT*Rb (Rb is the desired data rate in Bytes per second) Calculate needed window in packets.... Wp = Wb/MSS Calculate needed window scale.... Ws= ln2(Wb/64k) E.g. if Wb = 100kB, Ws=1 Calculate needed packet rate.... Rp = Rb/MSS Calculate needed loss interval. This is the approximate number of bytes that must be sent between successive loss intervals to meet the target data rate.... Li = 3/8 Wb * Wp testrig pages at NCNE/PSC Rb = 11.25 MBps (90Mbps) Rtt = 0.040 sec (40 msec) MSS = 1440 Bytes Wb = 900 KBytes Wp = 625 packets Ws = 4 Rp = 7813 pps Li = 211 MBytes! ploss = 5x10^-6! (0.0005%)

- 33. TCP’s Sliding Window 1 2 3 4 5 6 7 8 9 10 11 ... Offered receiver window Sent and ACKed Sent, not ACKed Can send ASAP Can’t send until window moves Usable window W. R. Stevens, 20.3

- 34. TCP Congestion Window Congestion window (cwnd) controls startup and limits throughput in the face of loss. cwnd gets larger after every new ACK cwnd get smaller when loss is detected Usable window = min(rwin, cwnd)

- 35. Cwnd During Slowstart cwnd increased by one for every new ACK cwnd doubles every round trip time cwnd is reset to zero after a loss

- 36. Slowstart and Congestion Avoidance Together

- 37. Delayed ACKs TCP receivers send ACK’s: after every second segment after a delayed ACK timeout on every segment after a loss (missing segment) A new segment sets the ACK timer (0-200 msec) A second segment (or timeout) triggers an ACK and zeros the delayed ACK timer

- 38. ACK Clocking A queue forms in front of a slower speed link The slower link causes packets to spread The spread packets result in spread ACK’s The spread ACK’s end up clocking the source packets at the slower link rate 987 6 5 4 3 2 1

- 39. Detecting Loss Packets get discarded when queues are full (or nearly full) Duplicate ACK’s get sent after missing or out of order packets Most TCP’s retransmit after the third duplicate ACK (“triple duplicate ACK”)

- 40. Discards arriving packets as a function of queue length Gives TCP better congestion indications (drops) Avoids “Global Synchronization” Increases total number of drops Increases link utilization Many variations (weighted, classed, etc.) Random Early Detection (RED) 10 Mbps 155 Mbps Queue

- 41. SACK TCP Selective Acknowledgement Specifies exactly which bytes were missed Better measures the “right edge” of the congestion window Does a very good job keeping your queues full Will cause latencies to go way up Without RED, will cause global sync faster Win98, Win2k, Linux have SACK

- 42. Things You Can Do Consider using RED on your routers before wide scale deployment of SACK TCP SACK won’t care very much but your old TCP’s will thank you Consider a priority class of service for interactive traffic?

- 43. A preconfigured TCP test rig http://www.ncne.nlanr.net/TCP/testrig/

- 44. TCP connection 1: host a: sd.wareonearth.com:1095 host b: amp2.sd.wareonearth.com:56117 complete conn: yes first packet: Sun Apr 23 23:35:29.645263 2000 last packet: Sun Apr 23 23:35:41.108465 2000 elapsed time: 0:00:11.463202 total packets: 107825 filename: trace.0.20000423233526 a->b: b->a: total packets: 72032 total packets: 35793 ack pkts sent: 72031 ack pkts sent: 35793 pure acks sent: 2 pure acks sent: 35791 unique bytes sent: 104282744 unique bytes sent: 0 actual data pkts: 72029 actual data pkts: 0 actual data bytes: 104282744 actual data bytes: 0 rexmt data pkts: 0 rexmt data pkts: 0 rexmt data bytes: 0 rexmt data bytes: 0 outoforder pkts: 0 outoforder pkts: 0 pushed data pkts: 72029 pushed data pkts: 0 SYN/FIN pkts sent: 1/1 SYN/FIN pkts sent: 1/1 req 1323 ws/ts: Y/Y req 1323 ws/ts: Y/Y adv wind scale: 0 adv wind scale: 4 req sack: Y req sack: N sacks sent: 0 sacks sent: 0 mss requested: 1460 bytes mss requested: 1460 bytes max segm size: 1448 bytes max segm size: 0 bytes min segm size: 448 bytes min segm size: 0 bytes avg segm size: 1447 bytes avg segm size: 0 bytes max win adv: 32120 bytes max win adv: 750064 bytes min win adv: 32120 bytes min win adv: 65535 bytes zero win adv: 0 times zero win adv: 0 times avg win adv: 32120 bytes avg win adv: 30076 bytes initial window: 2896 bytes initial window: 0 bytes initial window: 2 pkts initial window: 0 pkts ttl stream length: 104857600 bytes ttl stream length: 0 bytes missed data: 574856 bytes missed data: 0 bytes truncated data: 101833758 bytes truncated data: 0 bytes truncated packets: 72029 pkts truncated packets: 0 pkts data xmit time: 11.461 secs data xmit time: 0.000 secs idletime max: 372.0 ms idletime max: 246.8 ms throughput: 9097174 Bps throughput: 0 Bps Tcptrace -l

- 51. A Little More Loss

- 54. Receiving host/application is too slow

- 55. Too Straight - non-TCP limit

- 56. TCP Futures/Ideas Different retransmit/recovery schemes TCP Taho, Vegas, ... Pacing - removing burstiness by spreading the packets over a round trip time (BLUE) Rate-halving to recover ACK clocking more quickly Receiver mods to prevent sender “cheating” Autotuning buffer space usage Kick-starting TCP after timeouts

- 57. Resources Enabling High Performance Data Transfers on Hosts: http://www.psc.edu/networking/perf_tune.html Internet Measurement Tool Taxonomy http://www.caida.org/tools/taxonomy/measurement/ http://www.oyya.synthasite.com/ http://oyya.synthasite.com/contact.php

![Traceroute Matt's traceroute [v0.41] damp-ssc.spawar.navy.mil Sun Apr 23 23:29:51 2000 Keys: D - Display mode R - Restart statistics Q - Quit Packets Pings Hostname %Loss Rcv Snt Last Best Avg Worst 1. taco2-fe0.nci.net 0% 24 24 0 0 0 1 2. nccosc-bgp.att-disc.net 0% 24 24 1 1 1 6 3. pennsbr-aip.att-disc.net 0% 24 24 84 84 84 86 4. sprint-nap.vbns.net 0% 24 24 84 84 84 86 5. cs-hssi1-0.pym.vbns.net 0% 23 24 89 88 152 407 6. jn1-at1-0-0-0.pym.vbns.net 0% 23 23 88 88 88 90 7. jn1-at1-0-0-13.nor.vbns.net 0% 23 23 88 88 88 90 8. jn1-so5-0-0-0.dng.vbns.net 0% 23 23 89 88 91 116 9. jn1-so5-0-0-0.dnj.vbns.net 0% 23 23 112 111 112 113 10. jn1-so4-0-0-0.hay.vbns.net 0% 23 23 135 134 135 135 11. jn1-so0-0-0-0.rto.vbns.net 0% 23 23 147 147 147 147 12. 192.12.207.22 5% 22 23 98 98 113 291 13. pinot.sdsc.edu 0% 23 23 152 152 152 156 14. ipn.caida.org 0% 23 23 152 152 152 160](https://image.slidesharecdn.com/956481/85/Troubleshooting-TCP-IP-14-320.jpg)

![TCP Throughput Limit #3 Once window size and available bandwidth aren’t the limit 0.7 * Max Segment Size (MTU) Bandwidth < Round Trip Time (latency) sqrt[loss] M. Mathis, et.al. Double the MTU, double the throughput Halve the latency, double the throughput (shortest path matters) Halve the loss rate, 40% higher throughput](https://image.slidesharecdn.com/956481/85/Troubleshooting-TCP-IP-26-320.jpg)

![Treno http://www.psc.edu/networking/treno_info.html Treno tells you what a good TCP should be able to achieve (Bulk Transfer Capacity) Easy 10 second test, no server required damp-mhpcc% treno damp-pmrf MTU=8166 MTU=4352 MTU=2002 MTU=1492 .......... Replies were from damp-pmrf [198.97.151.50] Average rate: 63470.5 kbp/s (55241 pkts in + 87 lost = 0.16%) in 10.03 s Equilibrium rate: 63851.9 kbp/s (54475 pkts in + 86 lost = 0.16%) in 9.828 s Path properties: min RTT was 8.77 ms, path MTU was 1440 bytes XXX Calibration checks are still under construction, use -v](https://image.slidesharecdn.com/956481/85/Troubleshooting-TCP-IP-29-320.jpg)