Tutorial: Image Generation and Image-to-Image Translation using GAN

- 1. Implementation Tutorial Day-2: Image Generation and Image-to-Image Translation using GAN Wu Hyun Shin MLAI, KAIST 19. Feb. 2019.

- 2. Overview This tutorial is twofold as follows: 1. Image Generation using GAN (DCGAN) – 90 min. 2. Image Translation using GAN (CycleGAN) – 90 min. *The codes are referring to one of the most-starred public GitHub repositories. *Both the codes and the dataset for this tutorial will be provided by the instructor. *The provided version may have been *slightly* modified from the original codes.

- 3. Environments Prerequisites • Linux or macOS • Python 3 • NVIDA GPU + CUDA CuDNN • PyTorch 1.0 Github Repositories • DCGAN: https://github.com/pytorch/examples/tree/master/dcgan • CycleGAN: https://github.com/aitorzip/PyTorch-CycleGAN

- 4. Part I: Image Generation using GAN (DCGAN)

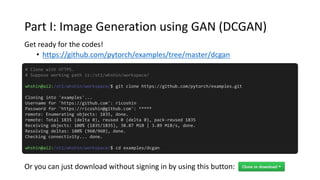

- 5. Part I: Image Generation using GAN (DCGAN) Get ready for the codes! • https://github.com/pytorch/examples/tree/master/dcgan # Clone with HTTPS. # Suppose working path is:/st1/whshin/workspace/ whshin@ai2:/st1/whshin/workspace/$ git clone https://github.com/pytorch/examples.git Cloning into 'examples'... Username for 'https://github.com': ricoshin Password for 'https://[email protected]': ***** remote: Enumerating objects: 1835, done. remote: Total 1835 (delta 0), reused 0 (delta 0), pack-reused 1835 Receiving objects: 100% (1835/1835), 38.87 MiB | 3.89 MiB/s, done. Resolving deltas: 100% (960/960), done. Checking connectivity... done. whshin@ai2:/st1/whshin/workspace/$ cd examples/dcgan Or you can just download without signing in by using this button:

- 6. Part I: Image Generation using GAN (DCGAN) Get ready for the dataset! 10: import urllib2 ... ... 19: urllib2.urlopen(url) try: # For Python 3.0 and later from urllib.request import urlopen except ImportError: # Fall back to Python 2's urllib2 from urllib2 import urlopen urlopen(url) *downloading will take about more than 7 hours.. • CelebA dataset (aligned version) : This is the one we will use today! • LSUN – bedroom (optional) • NOTE: For Python 3 compatibility, you should modify lsun/download.py! whshin@ai2:/st1/whshin/workspace/dcgan$ python datasets/download.py

- 7. Part I: Image Generation using GAN (DCGAN) # Code Explorer -tutorial_gan/ ㄴdcgan/ ㄴdatasets/ ㄴcelebA/ # CelebA dataset ㄴImg/ ㄴimg_align_celeba/ ㄴ000001.jpg ㄴ000002.jpg ㄴ ... ㄴdownload.py ㄴoutput/ ㄴreal_samples.png ㄴfake_samples_epoch_000.png ㄴ ... ㄴnetG_epoch_0.pth ㄴnetD_epoch_0.pth ㄴ ... ㄴmain.py ㄴcyclegan/ This is what you can see when you are ready.

- 8. Part I: Image Generation using GAN (DCGAN) How to run You can run the program with this command: whshin@ai2:/st1/whshin/workspace/dcgan$ python main.py --dataset folder --dataroot datasets/celebA/Img –-outf my_output --cuda You can see the results that have generated beforehand: -tutorial_gan/ ㄴdcgan/ ㄴoutput/ ㄴreal_samples.png ㄴfake_samples_epoch_000.png ㄴ ... ㄴnetG_epoch_0.pth ㄴnetD_epoch_0.pth ㄴ ...

- 9. Part I: Image Generation using GAN (DCGAN) We need look no further than just 1 file: main.py • Let’s briefly scan it! import modules parser.add_argument() dataset, dataloader def weights_init(m): class Generator(nn.Module): netG = Generator(ngpu).to(device) netG.apply(weights_init) class Discriminator(nn.Module): netD = Discriminator(ngpu).to(device) netD.apply(weights_init) optimizerD, optimizerG for epoch in range(opt.niter): for i, data in enumerate(dataloader, 0): # train!

- 10. Part I: Image Generation using GAN (DCGAN) Module Import from __future__ import print_function import argparse import os import random import torch import torch.nn as nn import torch.nn.parallel import torch.backends.cudnn as cudnn import torch.optim as optim import torch.utils.data import torchvision.datasets as dset import torchvision.transforms as transforms import torchvision.utils as vutils Note that you also have to install torchvision apart from the core torch package.

- 11. Part I: Image Generation using GAN (DCGAN) Parsers – 17 arguments - 15 optional arguments - 2 required arguments: dataset, dataroot parser = argparse.ArgumentParser() parser.add_argument('--dataset', required=True, help='cifar10 | lsun | mnist |imagenet | folder | lfw | fake') parser.add_argument('--dataroot', required=True, help='path to dataset') parser.add_argument('--workers', type=int, help='number of data loading workers', default=2) parser.add_argument('--batchSize', type=int, default=64, help='input batch size') parser.add_argument('--imageSize', type=int, default=64, # architecture must be changed to vary this. help='the height / width of the input image to network') parser.add_argument('--nz', type=int, default=100, help='size of the latent z vector') parser.add_argument('--ngf', type=int, default=64) # Depth of feature maps carried through the G parser.add_argument('--ndf', type=int, default=64) # Depth of feature maps propagated through the D parser.add_argument('--niter', type=int, default=25, help='number of epochs to train for') ...

- 12. Part I: Image Generation using GAN (DCGAN) Parsers – 17 arguments - 15 optional arguments - 2 required arguments: dataset, dataroot ... parser.add_argument('--lr', type=float, default=0.0002, help='learning rate, default=0.0002') parser.add_argument('--beta1', type=float, default=0.5, help='beta1 for adam. default=0.5') parser.add_argument('--cuda', action='store_true', help='enables cuda') parser.add_argument('--ngpu', type=int, default=1, help='number of GPUs to use') # If 0, use CPU parser.add_argument('--netG', default='', help="path to netG (to continue training)") parser.add_argument('--netD', default='', help="path to netD (to continue training)") parser.add_argument('--outf', default=‘output', help='folder to output images and model checkpoints') parser.add_argument('--manualSeed', type=int, help='manual seed') Now, we can understand what this command meant: whshin@ai2:/st1/whshin/workspace/dcgan$ python main.py --dataset folder --dataroot datasets/celebA/Img –-outf my_output --cuda

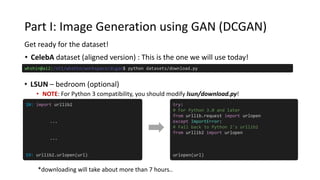

- 13. Part I: Image Generation using GAN (DCGAN) Reproducibility Reproducibility can be more crucial when it comes to GAN frameworks due to the instability in convergence. By manually setting the random seed, we can guarantee reproducible results. - But it is true only on the same platform and PyTorch release. - e.g. reproducibility between CPU and GPU execution need not be guaranteed. # parser.add_argument('--manualSeed', type=int, help='manual seed') if opt.manualSeed is None: opt.manualSeed = random.randint(1, 10000) print("Random Seed: ", opt.manualSeed) random.seed(opt.manualSeed) torch.manual_seed(opt.manualSeed)

- 14. Part I: Image Generation using GAN (DCGAN) Reproducibility (Supplementary) According to the PyTorch Docs, when running on the CuDNN backend, you have to make the model deterministic as follows: # When running on the CuDNN backend, two further options must be set. torch.backends.cudnn.deterministic = True torch.backends.cudnn.benchmark = False # this is set to True in the code, which is contradictory. *https://discuss.pytorch.org/t/what-does-torch-backends-cudnn-benchmark-do/5936 • NOTE: Deterministic mode can have performance impact, depending on your model.

- 15. Part I: Image Generation using GAN (DCGAN) Reproducibility (Supplementary) And if you are using Numpy, you should do this as well: # Plus, if you (or any libraries you’re using) rely on Numpy: numpy.random.seed(opt.manualSeed) # This is the one you can see in the code. torch.manual_seed(opt.manualSeed) # for all devices (both CPU and CUDA) # These guys are silently ignored when not using GPU. torch.cuda.manual_seed(opt.manualSeed) # for the current GPU. (Silently ignored when not using GPU) torch.cuda.manual_seed_all(opt.manualSeed) # for all the GPUs. (Silently ignored when not using GPU) There are a set of funcs that can manually set the seed for different device scopes:

- 16. Part I: Image Generation using GAN (DCGAN) Data Loading and Processing Next, we are going to gear up for the data. if opt.dataset in ['imagenet', 'folder', 'lfw’]: dataset = ... nc = 3 elif opt.dataset == 'lsun’: dataset = ... nc = 3 elif opt.dataset == 'cifar10’: dataset = ... nc = 3 elif opt.dataset == 'mnist’: dataset = ... nc = 1 elif opt.dataset == 'fake’: dataset = ... nc = 3 And they we would like to treat them differently according to the name. So the next question is, “how can we handle those data more efficiently?”

- 17. torchvision.transforms. Compose Composed callable transform class. Part I: Image Generation using GAN (DCGAN) Data Loading and Processing Let’s see how to load and preprocess data using the tools PyTorch package provides. torch.utils.data.Dataset an abstract class representing a dataset. torchvision.datasets Provided common dataset. e.g. MNIST, CIFAR10 MyDataset Customized dataset. Subclass Transform Class Callable class for preprocessing. Transform Class Transform Class Torch.utils.data.DataLoader Single- or multi-process iterators over the dataset. Torch.utils.data.Sampler Base class for Samplers. Collate function Function for mini-batching. … … or optional Argument

- 18. Part I: Image Generation using GAN (DCGAN) Data Loading and Processing: Dataset torch.utils.data.Dataset is an abstract class representing a dataset. Your custom dataset should inherit Dataset and override the following methods: • __len__ so that len(dataset) returns the size of the dataset. • __getitem__ to support the indexing such that dataset[i] can be used to get 𝑖-th sample • We can handle our data more easily by using torch.utils.data.Dataset. • We can make our custom dataset by subclassing this abstract class: from torch.utils.data import Dataset class MyDataset(Dataset): ... dataset = MyDataset(...) • Or, you can just use torchvision package providing some common datasets: import torchvision.datasets # All datasets in this package are subclasses of torch.utils.data.Dataset dataset = datasets.MNIST(root=opt.dataroot)

- 19. Part I: Image Generation using GAN (DCGAN) Data Loading and Processing: Transforms • It is recommendable to use a callable transform class to preprocess the data. • These callable transforms can be merged into a single transform as follows: # Make custom class class Transform(Dataset): def __init__(self, *args, **kwargs): ... def __call__(self, sample): ... transform = Transform(*init_args_list) transformed_sample = transform(sample) • After all, a (composed) transform class can be passed as an argument for: dataset = datasets.MNIST(root=opt.dataroot, transform=composed_transform) import torchvision.transforms as transforms composed_transform = transforms.Compose( [Transform_1(*init_arg_1), Transform_2(*init_arg_2), ... , Transform_n(*init_arg_n)]) transformed_sample = composed_transform(sample) # Load transforms on PIL Image import torchvision.transforms as transforms transform = transforms.CenterCrop(*args) # transform = transforms.ColorJitter(*args) # transform = transforms.Grayscale(*args) transformed_sample = transform(sample) or

- 20. Part I: Image Generation using GAN (DCGAN) Data Loading and Processing: DataLoader • Now, you can iterate through the processed dataset with simple for loop. dataset = datasets.MNIST(root=opt.dataroot, transform=composed_transform) for i in range(len(dataset)): sample = dataset[i] do_something(sample) ... • The more sophisticated way of doing that is to use Torch.utils.data.DataLoader, which is an iterator that can help with batching, shuffling, and multiprocessing. from torch.utils.data import DataLoader dataset = datasets.MNIST(root=opt.dataroot, transform=composed_transform) dataloader = DataLoader(dataset, batch_size=opt.batchSize, shuffle=True, num_workers=int(opt.workers)) for i, batch in enumerate(dataloader): do_something(batch) ...

- 21. Part I: Image Generation using GAN (DCGAN) Data Loading and Processing: ImageFolder • We will use torchvision.datasets.ImageFolder, which is a generic data loader where the images are arranged in subdiretories. import torchvision.datasets dataset = datasets.ImageFolder(root=dataroot, transform=composed_transforms) Let’s see what we are going to do with our Celeb-A dataset. CLASS torchvision.datasets.ImageFolder(root, transform=None, target_transform=None, loader=<function default_loader>) -> Returns (sample, target) A generic data loader where the images are arranged in this way: root/class_x/001.ext root/class_x/002.ext … root/class_y/aaa.ext root/class_y/bbb.ext …

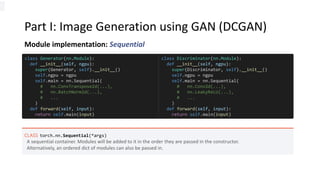

- 22. Part I: Image Generation using GAN (DCGAN) Module implementation: Sequential CLASS torch.nn.Sequential(*args) A sequential container. Modules will be added to it in the order they are passed in the constructor. Alternatively, an ordered dict of modules can also be passed in. class Generator(nn.Module): def __init__(self, ngpu): super(Generator, self).__init__() self.ngpu = ngpu self.main = nn.Sequential( # nn.ConvTranspose2d(...), # nn.BatchNorm2d(...), # ... ) def forward(self, input): return self.main(input) class Discriminator(nn.Module): def __init__(self, ngpu): super(Discriminator, self).__init__() self.ngpu = ngpu self.main = nn.Sequential( # nn.Conv2d(...), # nn.LeakyReLU(...), # ... ) def forward(self, input): return self.main(input)

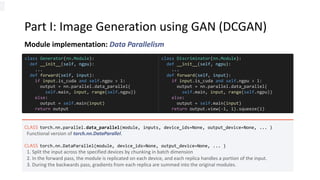

- 23. Part I: Image Generation using GAN (DCGAN) Module implementation: Data Parallelism CLASS torch.nn.parallel.data_parallel(module, inputs, device_ids=None, output_device=None, ... ) Functional version of torch.nn.DataParallel. CLASS torch.nn.DataParallel(module, device_ids=None, output_device=None, ... ) 1. Split the input across the specified devices by chunking in batch dimension 2. In the forward pass, the module is replicated on each device, and each replica handles a portion of the input. 3. During the backwards pass, gradients from each replica are summed into the original modules. class Generator(nn.Module): def __init__(self, ngpu): ... def forward(self, input): if input.is_cuda and self.ngpu > 1: output = nn.parallel.data_parallel( self.main, input, range(self.ngpu)) else: output = self.main(input) return output class Discriminator(nn.Module): def __init__(self, ngpu): ... def forward(self, input): if input.is_cuda and self.ngpu > 1: output = nn.parallel.data_parallel( self.main, input, range(self.ngpu)) else: output = self.main(input) return output.view(-1, 1).squeeze(1)

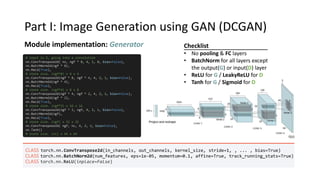

- 24. Part I: Image Generation using GAN (DCGAN) Module implementation: Generator # input is Z, going into a convolution nn.ConvTranspose2d( nz, ngf * 8, 4, 1, 0, bias=False), nn.BatchNorm2d(ngf * 8), nn.ReLU(True), # state size. (ngf*8) x 4 x 4 nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False), nn.BatchNorm2d(ngf * 4), nn.ReLU(True), # state size. (ngf*4) x 8 x 8 nn.ConvTranspose2d(ngf * 4, ngf * 2, 4, 2, 1, bias=False), nn.BatchNorm2d(ngf * 2), nn.ReLU(True), # state size. (ngf*2) x 16 x 16 nn.ConvTranspose2d(ngf * 2, ngf, 4, 2, 1, bias=False), nn.BatchNorm2d(ngf), nn.ReLU(True), # state size. (ngf) x 32 x 32 nn.ConvTranspose2d( ngf, nc, 4, 2, 1, bias=False), nn.Tanh() # state size. (nc) x 64 x 64 CLASS torch.nn.ConvTranspose2d(in_channels, out_channels, kernel_size, stride=1, , ... , bias=True) CLASS torch.nn.BatchNorm2d(num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) CLASS torch.nn.ReLU(inplace=False) Checklist • No pooling & FC layers • BatchNorm for all layers except the output(G) or input(D) layer • ReLU for G / LeakyReLU for D • Tanh for G / Sigmoid for D

- 25. Part I: Image Generation using GAN (DCGAN) Module implementation: Discriminator # input is (nc) x 64 x 64 nn.Conv2d(nc, ndf, 4, 2, 1, bias=False), nn.LeakyReLU(0.2, inplace=True), # state size. (ndf) x 32 x 32 nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False), nn.BatchNorm2d(ndf * 2), nn.LeakyReLU(0.2, inplace=True), # state size. (ndf*2) x 16 x 16 nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False), nn.BatchNorm2d(ndf * 4), nn.LeakyReLU(0.2, inplace=True), # state size. (ndf*4) x 8 x 8 nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False), nn.BatchNorm2d(ndf * 8), nn.LeakyReLU(0.2, inplace=True), # state size. (ndf*8) x 4 x 4 nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False), nn.Sigmoid() CLASS torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, , ... , bias=True) CLASS torch.nn.BatchNorm2d(num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) CLASS torch.nn.ReLU(negative_slope=0.01, inplace=False) 𝐷(𝑥) 1 Checklist • No pooling & FC layers • BatchNorm for all layers except the output(G) or input(D) layer • ReLU for G / LeakyReLU for D • Tanh for G / Sigmoid for D

- 26. Part I: Image Generation using GAN (DCGAN) Weight initialization CLASS torch.nn.Module.apply(fn) Applies fn recursively to every submodule (as returned by .children()) as well as self. Typical use includes initializing the parameters of a model (see also torch.nn.init). # custom weights initialization called on netG and netD def weights_init(m): classname = m.__class__.__name__ if classname.find('Conv') != -1: m.weight.data.normal_(0.0, 0.02) elif classname.find('BatchNorm') != -1: m.weight.data.normal_(1.0, 0.02) m.bias.data.fill_(0) netG = Generator(ngpu).to(device) netG.apply(weights_init) netD = Discriminator(ngpu).to(device) netD.apply(weights_init) Batch Normalization “All weights were initialized from a zero-centered Normal distribution with standard deviation 0.02.” weight bias

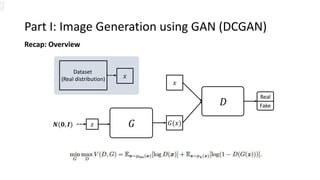

- 27. Part I: Image Generation using GAN (DCGAN) Recap: Overview 𝐷 𝐺𝑧𝑵(𝟎, 𝑰) Dataset (Real distribution) 𝑥 𝐺(𝑥) Real Fake 𝑥

- 28. Part I: Image Generation using GAN (DCGAN) Recap: Training of Discriminator (Real → Real) 𝐷 𝐺𝑧𝑵(𝟎, 𝑰) Dataset (Real distribution) 𝑥 𝐺(𝑥) Real Fake 𝑥

- 29. Part I: Image Generation using GAN (DCGAN) Recap: Training of Discriminator (Fake → Fake) 𝐷 𝐺𝑧𝑵(𝟎, 𝑰) Dataset (Real distribution) 𝑥 𝐺(𝑥) Real Fake 𝑥

- 30. Part I: Image Generation using GAN (DCGAN) Recap: Training of Discriminator (Fake → Fake) We will abide by some of the best practice shown in: https://github.com/soumith/ganhacks Constructing different mini-batches for real and fake is known as better practice.

- 31. Part I: Image Generation using GAN (DCGAN) Recap: Training of Generator (Fake → Real) 𝐷 𝐺𝑧𝑵(𝟎, 𝑰) Dataset (Real distribution) 𝑥 𝐺(𝑥) Real Fake 𝑥

- 32. Part I: Image Generation using GAN (DCGAN) Loss function and Optimization Maximize : Maximize : : D rejecting G(x) with high confidence. prob. of D predicting that Real data is genuine. prob. of D predicting that fake data is NOT genuine. Minimize : prob. of D predicting that fake data is genuine. prob. of D predicting that fake data is NOT genuine. Discriminator Generator max max min max log 𝑥 log 𝑠𝑔𝑚(𝑥) Gradient vanishing

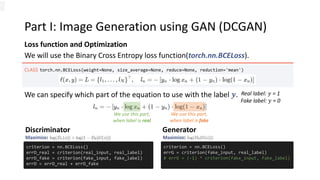

- 33. Part I: Image Generation using GAN (DCGAN) Loss function and Optimization CLASS torch.nn.BCELoss(weight=None, size_average=None, reduce=None, reduction='mean') We will use the Binary Cross Entropy loss function(torch.nn.BCELoss). Real label: 𝑦 = 1 Fake label: 𝑦 = 0 We use this part, when label is real We can specify which part of the equation to use with the label 𝒚. We use this part, when label is fake criterion = nn.BCELoss() errD_real = criterion(real_input, real_label) errD_fake = criterion(fake_input, fake_label) errD = errD_real + errD_fake criterion = nn.BCELoss() errG = criterion(fake_input, real_label) # errG = (-1) * criterion(fake_input, fake_label) Discriminator Generator Maximize: Maximize:

- 34. Part I: Image Generation using GAN (DCGAN) Loss function # train D: max log(D(x)) + log(1 - D(G(z))) ############################################# # train with real output = netD(input) errD_real = criterion(output, real_label) errD_real.backward() # train with fake fake = netG(torch.randn(batch_size, nz, 1, 1)) output = netD(fake.detach()) errD_fake = criterion(output, fake_label) errD_fake.backward() # update network optimD.step() # train G: maximize log(D(G(z))) ############################################# fake = netG(torch.randn(batch_size, nz, 1, 1)) output = netD(fake) errG = criterion(output, real_label) errG.backward() # update network optimG.step() netD = Discriminator(ngpu).to(device) netG = Generator(ngpu).to(device) netD.apply(weights_init) netG.apply(weights_init) optimD = optim.Adam(netD.parameters(), ... ) optimG = optim.Adam(netG.parameters(), ... ) criterion = nn.BCELoss() real_label = torch.full((input.size(0),), 1) fake_label = torch.full((input.size(0),), 0) for epoch in range(opt.niter): for i, data in enumerate(dataloader, 0): # training loop

- 35. Part I: Image Generation using GAN (DCGAN) Result We can check the qualitative results under the path: ./output (default) Real samples Fake samples(25 epochs)

- 36. Part II: Image Translation using GAN (CycleGAN)

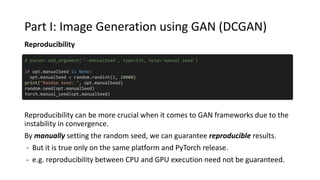

- 37. Part II: Image Translation using GAN (CycleGAN) Get ready for the codes, • https://github.com/aitorzip/PyTorch-CycleGAN # Clone with HTTPS. # Suppose working path is:/st1/whshin/workspace/ whshin@ai2:/st1/whshin/workspace/$ git clone https://github.com/aitorzip/PyTorch-CycleGAN.git Cloning into ' PyTorch-CycleGAN '... **omitted** Checking connectivity... done. # Just for brevity. whshin@ai2:/st1/whshin/workspace/$ mv PyTorch-CycleGAN/ cyclegan whshin@ai2:/st1/whshin/workspace/$ cd cyclegan and the dataset! • horse2zebra : This is the one we will use today! whshin@ai2:/st1/whshin/workspace/cyclegan$ ./download_dataset horse2zebra

- 38. Part II: Image Translation using GAN (CycleGAN) # Code Explorer -tutorial_gan/ ㄴdcgan/ ㄴcyclegan/ ㄴdatasets/ ㄴhorse2zebra/ ㄴtest/ ㄴA/ n02381460_1000.jpg, ... ㄴB/ n02391049_1000.jpg, ... ㄴtrain/ ㄴA/ n02381460_1001.jpg, ... ㄴB/ n02391049_10007.jpg, ... ㄴoutput/ fake_A.png, fake_B.png, loss_D.png, ... ㄴABA/ A_0001.png, AB_0001.png, ABA_0001.png, ... ㄴBAB/ B_0001.png, BA_0001.png, BAB_0001.png, ... ㄴdatasets.py ㄴmodels.py # Residual block, Generator, Discriminator ㄴtest.py ㄴtrain.py ㄴutils.py # Logger, ReplayBuffer, LambdaLR, weight initializer This is what you can see when you are ready.

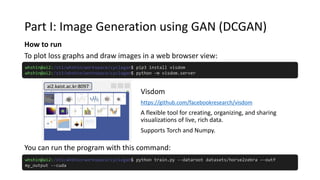

- 39. Part I: Image Generation using GAN (DCGAN) How to run You can run the program with this command: whshin@ai2:/st1/whshin/workspace/cyclegan$ python train.py --dataroot datasets/horse2zebra –-outf my_output --cuda whshin@ai2:/st1/whshin/workspace/cyclegan$ pip3 install visdom whshin@ai2:/st1/whshin/workspace/cyclegan$ python –m visdom.server To plot loss graphs and draw images in a web browser view: Visdom https://github.com/facebookresearch/visdom A flexible tool for creating, organizing, and sharing visualizations of live, rich data. Supports Torch and Numpy. ai2.kaist.ac.kr:8097

- 40. for epoch in range(opt.epoch, opt.n_epochs): for i, batch in enumerate(dataloader): ###### Generators A2B and B2A ###### # 1. Identity loss # 2. GAN loss # 3. Cycle loss # 4. Total loss ###### Discriminator A ##### # 1. Real loss # 2. Fake loss # 3. Total loss ###### Discriminator A ##### # 1. Real loss # 2. Fake loss # 3. Total loss # Update learning rate # Save models checkpoints Part II: Image Translation using GAN (CycleGAN) Let’s focus primarily on the train.py, and check the other modules whenever they are actually called from the this code. import modules parser.add_argument() # Networks (from model.py) netG_A2B, netG_B2A, netD_A, netD_B # Lossess criterion_GAN, criterion_cycle, criterion_identity # Optimizers & Dataset loader optimizer_G, optimizer_D_A, optimizer_D_B # LR schedulers & replay buffer (from utils.py) lr_scheduler_G, lr_scheduler_D_A, lr_scheduler_D_B fake_A_buffer, fake_B_buffer # Dataset loader dataloader # Logger (from utils.py) logger

- 41. Part II: Image Translation using GAN (CycleGAN) Module Import(train.py) import argparse import itertools import torchvision.transforms as transforms from torch.utils.data import DataLoader from torch.autograd import Variable from PIL import Image import torch from models import Generator from models import Discriminator from utils import ReplayBuffer from utils import LambdaLR from utils import Logger from utils import weights_init_normal from datasets import ImageDataset

- 42. Part II: Image Translation using GAN (CycleGAN) Parsers(train.py) – 11 arguments parser = argparse.ArgumentParser() parser.add_argument('--epoch', type=int, default=0, help='starting epoch') parser.add_argument('--n_epochs', type=int, default=200, help='number of epochs of training') parser.add_argument('--batchSize', type=int, default=1, help='size of the batches') parser.add_argument('--dataroot', type=str, default='horse2zebra/’, help='root directory of the dataset') parser.add_argument('--lr', type=float, default=0.0002, help='initial learning rate') parser.add_argument('--decay_epoch', type=int, default=100, help='epoch to start linearly decaying the learning rate to 0') parser.add_argument('--size', type=int, default=256, help='size of the data crop (squared assumed)') parser.add_argument('--input_nc', type=int, default=3, help='number of channels of input data') parser.add_argument('--output_nc', type=int, default=3, help='number of channels of output data') parser.add_argument('--cuda', action='store_true', help='use GPU computation') parser.add_argument('--n_cpu', type=int, default=8, help='number of cpu threads to use during batch generation’) parser.add_argument('--outf', default='output', help='folder to output images and model checkpoints')

- 43. Part II: Image Translation using GAN (CycleGAN) Networks (train.py) # Networks netG_A2B = Generator(opt.input_nc, opt.output_nc) netG_B2A = Generator(opt.output_nc, opt.input_nc) netD_A = Discriminator(opt.input_nc) netD_B = Discriminator(opt.output_nc) if opt.cuda: netG_A2B.cuda() netG_B2A.cuda() netD_A.cuda() netD_B.cuda() netG_A2B.apply(weights_init_normal) netG_B2A.apply(weights_init_normal) netD_A.apply(weights_init_normal) netD_B.apply(weights_init_normal) weigths_init_normal() is the same function as the one we used for DCGAN.

- 44. Part II: Image Translation using GAN (CycleGAN) Networks Overview

- 45. Part II: Image Translation using GAN (CycleGAN) Networks Overview : Generators(A2B & B2A) training Real Cycle Loss (L1) (Optional) LSGAN Loss (MSE)Identity Loss (L1)

- 46. Part II: Image Translation using GAN (CycleGAN) Networks Overview : Discriminator(B) training (Already generated during G training) Real Fake LSGAN Loss (MSE)

- 47. Part II: Image Translation using GAN (CycleGAN) Networks - Generator Initial layer Downsampling Residual blocks (x9) Upsampling Output layer

- 48. Part II: Image Translation using GAN (CycleGAN) Networks (model.py) - Generator # Residual blocks for _ in range(n_residual_blocks): model += [ResidualBlock(in_features)] # Upsampling out_features = in_features//2 for _ in range(2): model += [ nn.ConvTranspose2d(in_features, out_features, kernel_size=3, stride=2, padding=1, output_padding=1), nn.InstanceNorm2d(out_features), nn.ReLU(inplace=True) ] in_features = out_features out_features = in_features//2 # Output layer model += [ nn.ReflectionPad2d(3), nn.Conv2d(64, output_nc, 7), nn.Tanh() ] self.model = nn.Sequential(*model) def forward(self, x): return self.model(x) class Generator(nn.Module): def __init__( self, input_nc, output_nc, n_residual_blocks=9): super(Generator, self).__init__() # Initial convolution block model = [ nn.ReflectionPad2d(3), nn.Conv2d(input_nc, 64, 7), nn.InstanceNorm2d(64), nn.ReLU(inplace=True) ] # Downsampling in_features = 64 out_features = in_features*2 for _ in range(2): model += [ nn.Conv2d(in_features, out_features, kernel_size=3, stride=2, padding=1), nn.InstanceNorm2d(out_features), nn.ReLU(inplace=True) ] in_features = out_features out_features = in_features*2

- 49. Part II: Image Translation using GAN (CycleGAN) Networks (model.py) - Generator (Residual Block) class ResidualBlock(nn.Module): def __init__(self, in_features): super(ResidualBlock, self).__init__() conv_block = [ nn.ReflectionPad2d(1), nn.Conv2d(in_features, in_features, kernel_size=3), nn.InstanceNorm2d(in_features), nn.ReLU(inplace=True), nn.ReflectionPad2d(1), nn.Conv2d(in_features, in_features, kernel_size=3), nn.InstanceNorm2d(in_features) ] self.conv_block = nn.Sequential(*conv_block) def forward(self, x): return x + self.conv_block(x)

- 50. Part II: Image Translation using GAN (CycleGAN) Networks - Discriminator (from PatchGAN) Correlation between the pixels decreases proportional to the distance. PatchGAN discriminate the real and the fake in overlapping patches of a certain size rather than considering the whole image.

- 51. Part II: Image Translation using GAN (CycleGAN) Networks - Discriminator (from PatchGAN) Patch-level discriminator has.. 1. Less number of parameters. 2. Smaller computational cost. 3. Flexible input size.

- 52. Part II: Image Translation using GAN (CycleGAN) Networks (model.py) - Discriminator (from PatchGAN) # FCN classification layer model += [nn.Conv2d(512, 1, 4, padding=1)] self.model = nn.Sequential(*model) def forward(self, x): x = self.model(x) # Average pooling and flatten return F.avg_pool2d(x, x.size()[2:]).view(x.size()[0], -1) class Discriminator(nn.Module): def __init__(self, input_nc): super(Discriminator, self).__init__() # A bunch of convolutions one after another model = [ nn.Conv2d(input_nc, 64, 4, stride=2, padding=1), nn.LeakyReLU(0.2, inplace=True) ] model += [ nn.Conv2d(64, 128, 4, stride=2, padding=1), nn.InstanceNorm2d(128), nn.LeakyReLU(0.2, inplace=True) ] model += [ nn.Conv2d(128, 256, 4, stride=2, padding=1), nn.InstanceNorm2d(256), nn.LeakyReLU(0.2, inplace=True) ] model += [ nn.Conv2d(256, 512, 4, padding=1), nn.InstanceNorm2d(512), nn.LeakyReLU(0.2, inplace=True) ] (1) LSGAN does NOT need sigmoid function at the last layer. (2) The author applies average pooling resulting in 1-D label for each instance.

- 53. Part II: Image Translation using GAN (CycleGAN) Losses (train.py) # Lossess criterion_GAN = torch.nn.MSELoss() # We will use LSGAN loss criterion_cycle = torch.nn.L1Loss() criterion_identity = torch.nn.L1Loss() + Identity Loss (Optional): 𝑮 𝒚 − 𝒚 𝟏 GAN Loss Cycle Loss

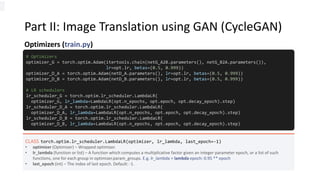

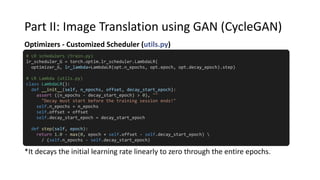

- 54. Part II: Image Translation using GAN (CycleGAN) Optimizers (train.py) # Optimizers optimizer_G = torch.optim.Adam(itertools.chain(netG_A2B.parameters(), netG_B2A.parameters()), lr=opt.lr, betas=(0.5, 0.999)) optimizer_D_A = torch.optim.Adam(netD_A.parameters(), lr=opt.lr, betas=(0.5, 0.999)) optimizer_D_B = torch.optim.Adam(netD_B.parameters(), lr=opt.lr, betas=(0.5, 0.999)) # LR schedulers lr_scheduler_G = torch.optim.lr_scheduler.LambdaLR( optimizer_G, lr_lambda=LambdaLR(opt.n_epochs, opt.epoch, opt.decay_epoch).step) lr_scheduler_D_A = torch.optim.lr_scheduler.LambdaLR( optimizer_D_A, lr_lambda=LambdaLR(opt.n_epochs, opt.epoch, opt.decay_epoch).step) lr_scheduler_D_B = torch.optim.lr_scheduler.LambdaLR( optimizer_D_B, lr_lambda=LambdaLR(opt.n_epochs, opt.epoch, opt.decay_epoch).step) CLASS torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda, last_epoch=-1) • optimizer (Optimizer) – Wrapped optimizer. • lr_lambda (function or list) – A function which computes a multiplicative factor given an integer parameter epoch, or a list of such functions, one for each group in optimizer.param_groups. E.g. lr_lambda = lambda epoch: 0.95 ** epoch • last_epoch (int) – The index of last epoch. Default: -1.

- 55. Part II: Image Translation using GAN (CycleGAN) Optimizers - Customized Scheduler (utils.py) # LR schedulers (train.py) lr_scheduler_G = torch.optim.lr_scheduler.LambdaLR( optimizer_G, lr_lambda=LambdaLR(opt.n_epochs, opt.epoch, opt.decay_epoch).step) # LR Lambda (utils.py) class LambdaLR(): def __init__(self, n_epochs, offset, decay_start_epoch): assert ((n_epochs - decay_start_epoch) > 0), "" "Decay must start before the training session ends!" self.n_epochs = n_epochs self.offset = offset self.decay_start_epoch = decay_start_epoch def step(self, epoch): return 1.0 - max(0, epoch + self.offset - self.decay_start_epoch) / (self.n_epochs - self.decay_start_epoch) *It decays the initial learning rate linearly to zero through the entire epochs.

- 56. Part II: Image Translation using GAN (CycleGAN) Replay Buffer(train.py and utils.py): Update D using a history of generated images. If the buffer is NOT full; keep inserting current images to the buffer. If the buffer is full; (1) By 50% chance, the buffer will return a previously stored image, and insert the current image into the buffer. (2) By another 50% chance, the buffer will return current image. for element in data.data: element = torch.unsqueeze(element, 0) if len(self.data) < self.max_size: self.data.append(element) to_return.append(element) else: if random.uniform(0,1) > 0.5: i = random.randint(0, self.max_size-1) to_return.append(self.data[i].clone()) self.data[i] = element else: to_return.append(element) return Variable(torch.cat(to_return)) # train.py fake_A_buffer = ReplayBuffer() fake_B_buffer = ReplayBuffer() # utils.py class ReplayBuffer(): def __init__(self, max_size=50): assert (max_size > 0), 'Empty buffer ' 'or trying to create a black hole. ‘ 'Be careful.’ self.max_size = max_size self.data = [] def push_and_pop(self, data): to_return = []

- 57. Part II: Image Translation using GAN (CycleGAN) Dataloader(train.py) # Dataset loader (train.py) transforms_ = [ transforms.Resize(int(opt.size*1.12), Image.BICUBIC), transforms.RandomCrop(opt.size), transforms.RandomHorizontalFlip(), transforms.ToTensor(), transforms.Normalize((0.5,0.5,0.5), (0.5,0.5,0.5)) ] dataset = ImageDataset(opt.dataroot, transforms_=transforms_, unaligned=True) dataloader = DataLoader(dataset, batch_size=opt.batchSize, shuffle=True, num_workers=opt.n_cpu)

- 58. Part II: Image Translation using GAN (CycleGAN) Dataloader(datasets.py) - Customized Dataset def __getitem__(self, index): item_A = self.transform(Image.open( self.files_A[index % len(self.files_A)])) if self.unaligned: item_B = self.transform(Image.open( self.files_B[random.randint( 0, len(self.files_B) - 1)])) else: item_B = self.transform(Image.open( self.files_B[index % len(self.files_B)])) return {'A': item_A, 'B': item_B} def __len__(self): return max(len(self.files_A), len(self.files_B)) # Dataset loader (datasets.py) import glob import random import os from torch.utils.data import Dataset from PIL import Image import torchvision.transforms as transforms class ImageDataset(Dataset): def __init__(self, root, transforms_=None, unaligned=False, mode='train’): self.transform = transforms.Compose(transforms_) self.unaligned = unaligned self.files_A = sorted(glob.glob(os.path.join( root, '%s/A' % mode) + '/*.*’)) self.files_B = sorted(glob.glob(os.path.join( root, '%s/B' % mode) + '/*.*'))

- 59. for epoch in range(opt.epoch, opt.n_epochs): for i, batch in enumerate(dataloader): # Set model input ###### Generators A2B and B2A ###### # Identity loss # GAN loss # Cycle loss ###### Discriminator A ###### # Real loss # Fake loss ###### Discriminator B ###### # Real loss # Fake loss # logger.log # Update learning rate # Save models checkpoints Part II: Image Translation using GAN (CycleGAN) Training (train.py) + Identity Loss: BtoA(A) = A GAN Loss Cycle Loss

- 60. Part II: Image Translation using GAN (CycleGAN) Training (train.py) – Generators A2B & B2A ###### Generators A2B and B2A ###### optimizer_G.zero_grad() loss_G = 0.0 ###### 1. Identity loss (L1 Loss) ###### # G_A2B(B) should equal B same_B = netG_A2B(real_B) loss_G += criterion_identity(same_B, real_B) * 5.0 # G_B2A(A) should equal A same_A = netG_B2A(real_A) loss_G += criterion_identity(same_A, real_A) * 5.0 ###### 2. LSGAN loss (MSE Loss) ###### # G_A2B(A) should fool D_B fake_B = netG_A2B(real_A) pred_fake = netD_B(fake_B) loss_G += criterion_GAN(pred_fake, target_real) # G_B2A(B) should fool D_A fake_A = netG_B2A(real_B) pred_fake = netD_A(fake_A) loss_G += criterion_GAN(pred_fake, target_real) ###### 3. Cycle loss (L1 Loss) ###### # G_B2A(G_A2B(A)) should equal A recon_A = netG_B2A(fake_B) loss_G += criterion_cycle(recon_A, real_A) * 10.0 # G_A2B(G_B2A(B)) should equal B recon_B = netG_A2B(fake_A) loss_G = criterion_cycle(recon_B, real_B) * 10.0 ###### Update both Gs ###### loss_G.backward() optimizer_G.step()

- 61. Part II: Image Translation using GAN (CycleGAN) Training (train.py) – Discriminator A & B ###### Discriminator A ###### optimizer_D_A.zero_grad() # Real loss (MSE Loss) pred_real = netD_A(real_A) loss_D_real = criterion_GAN(pred_real, target_real) # Fake loss (MSE Loss) fake_A = fake_A_buffer.push_and_pop(fake_A) pred_fake = netD_A(fake_A.detach()) loss_D_fake = criterion_GAN(pred_fake, target_fake) # Total loss loss_D_A = (loss_D_real + loss_D_fake) * 0.5 loss_D_A.backward() optimizer_D_A.step() ###### Discriminator B ###### optimizer_D_B.zero_grad() # Real loss (MSE Loss) pred_real = netD_B(real_B) loss_D_real = criterion_GAN(pred_real, target_real) # Fake loss (MSE Loss) fake_B = fake_B_buffer.push_and_pop(fake_B) pred_fake = netD_B(fake_B.detach()) loss_D_fake = criterion_GAN(pred_fake, target_fake) # Total loss loss_D_B = (loss_D_real + loss_D_fake) * 0.5 loss_D_B.backward() optimizer_D_B.step()

- 62. Part II: Image Translation using GAN (CycleGAN) Update learning rate & save models checkpoints # Update learning rates lr_scheduler_G.step() lr_scheduler_D_A.step() lr_scheduler_D_B.step() # Save models checkpoints torch.save(netG_A2B.state_dict(), '%s/netG_A2B.pth' % (opt.opt.outf)) torch.save(netG_B2A.state_dict(), '%s/netG_B2A.pth' % (opt.opt.outf)) torch.save(netD_A.state_dict(), '%s/netD_A.pth' % (opt.opt.outf)) torch.save(netD_B.state_dict(), '%s/netD_B.pth' % (opt.opt.outf))

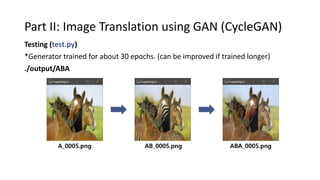

- 63. Part II: Image Translation using GAN (CycleGAN) Testing (test.py) whshin@ai2:/st1/whshin/workspace/cyclegan$ python test.py --dataroot datasets/horse2zebra –-outf my_output --cuda You can run the test code with this command: -tutorial_gan/ ㄴcyclegan/ ㄴoutput/ netG_A2B.path, netG_B2A.pth, ... # Pretrained models # Test result will be saved under <outf>/ABA and <outf>/BAB ㄴABA/ A_0001.png, AB_0001.png, ABA_0001.png, ... ㄴBAB/ B_0001.png, BA_0001.png, BAB_0001.png, ... You can load the model parameters trained for about 30 epochs (--outf output), and result will be saved under the same path. (ABA, BAB)

- 64. Part II: Image Translation using GAN (CycleGAN) A_0005.png AB_0005.png ABA_0005.png Testing (test.py) ./output/ABA *Generator trained for about 30 epochs. (can be improved if trained longer)

- 65. Part II: Image Translation using GAN (CycleGAN) Results (Images) A → G_AB(A) B → G_BA(B)

- 66. Part II: Image Translation using GAN (CycleGAN) G_Identity G_GAN G_Cycle Generator Discriminator Results (Plots)

- 67. Part II: Image Translation using GAN (CycleGAN) Results (Visdom)

- 68. Any questions? Next lecture – Meta learning

![Part I: Image Generation using GAN (DCGAN)

Data Loading and Processing

Next, we are going to gear up for the data.

if opt.dataset in ['imagenet', 'folder', 'lfw’]:

dataset = ...

nc = 3

elif opt.dataset == 'lsun’:

dataset = ...

nc = 3

elif opt.dataset == 'cifar10’:

dataset = ...

nc = 3

elif opt.dataset == 'mnist’:

dataset = ...

nc = 1

elif opt.dataset == 'fake’:

dataset = ...

nc = 3

And they we would like to treat them differently according to the name.

So the next question is, “how can we handle those data more efficiently?”](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-16-320.jpg)

![Part I: Image Generation using GAN (DCGAN)

Data Loading and Processing: Dataset

torch.utils.data.Dataset is an abstract class representing a dataset.

Your custom dataset should inherit Dataset and override the following methods:

• __len__ so that len(dataset) returns the size of the dataset.

• __getitem__ to support the indexing such that dataset[i] can be used to get 𝑖-th sample

• We can handle our data more easily by using torch.utils.data.Dataset.

• We can make our custom dataset by subclassing this abstract class:

from torch.utils.data import Dataset

class MyDataset(Dataset):

...

dataset = MyDataset(...)

• Or, you can just use torchvision package providing some common datasets:

import torchvision.datasets

# All datasets in this package are subclasses of torch.utils.data.Dataset

dataset = datasets.MNIST(root=opt.dataroot)](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-18-320.jpg)

![Part I: Image Generation using GAN (DCGAN)

Data Loading and Processing: Transforms

• It is recommendable to use a callable transform class to preprocess the data.

• These callable transforms can be merged into a single transform as follows:

# Make custom class

class Transform(Dataset):

def __init__(self, *args, **kwargs):

...

def __call__(self, sample):

...

transform = Transform(*init_args_list)

transformed_sample = transform(sample)

• After all, a (composed) transform class can be passed as an argument for:

dataset = datasets.MNIST(root=opt.dataroot, transform=composed_transform)

import torchvision.transforms as transforms

composed_transform = transforms.Compose(

[Transform_1(*init_arg_1), Transform_2(*init_arg_2), ... , Transform_n(*init_arg_n)])

transformed_sample = composed_transform(sample)

# Load transforms on PIL Image

import torchvision.transforms as transforms

transform = transforms.CenterCrop(*args)

# transform = transforms.ColorJitter(*args)

# transform = transforms.Grayscale(*args)

transformed_sample = transform(sample)

or](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-19-320.jpg)

![Part I: Image Generation using GAN (DCGAN)

Data Loading and Processing: DataLoader

• Now, you can iterate through the processed dataset with simple for loop.

dataset = datasets.MNIST(root=opt.dataroot, transform=composed_transform)

for i in range(len(dataset)):

sample = dataset[i]

do_something(sample)

...

• The more sophisticated way of doing that is to use Torch.utils.data.DataLoader,

which is an iterator that can help with batching, shuffling, and multiprocessing.

from torch.utils.data import DataLoader

dataset = datasets.MNIST(root=opt.dataroot, transform=composed_transform)

dataloader = DataLoader(dataset, batch_size=opt.batchSize, shuffle=True, num_workers=int(opt.workers))

for i, batch in enumerate(dataloader):

do_something(batch)

...](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-20-320.jpg)

![Part II: Image Translation using GAN (CycleGAN)

Networks (model.py) - Generator

# Residual blocks

for _ in range(n_residual_blocks):

model += [ResidualBlock(in_features)]

# Upsampling

out_features = in_features//2

for _ in range(2):

model += [ nn.ConvTranspose2d(in_features, out_features,

kernel_size=3, stride=2,

padding=1, output_padding=1),

nn.InstanceNorm2d(out_features),

nn.ReLU(inplace=True) ]

in_features = out_features

out_features = in_features//2

# Output layer

model += [ nn.ReflectionPad2d(3),

nn.Conv2d(64, output_nc, 7),

nn.Tanh() ]

self.model = nn.Sequential(*model)

def forward(self, x):

return self.model(x)

class Generator(nn.Module):

def __init__(

self, input_nc, output_nc, n_residual_blocks=9):

super(Generator, self).__init__()

# Initial convolution block

model = [ nn.ReflectionPad2d(3),

nn.Conv2d(input_nc, 64, 7),

nn.InstanceNorm2d(64),

nn.ReLU(inplace=True) ]

# Downsampling

in_features = 64

out_features = in_features*2

for _ in range(2):

model += [ nn.Conv2d(in_features, out_features,

kernel_size=3, stride=2,

padding=1),

nn.InstanceNorm2d(out_features),

nn.ReLU(inplace=True) ]

in_features = out_features

out_features = in_features*2](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-48-320.jpg)

![Part II: Image Translation using GAN (CycleGAN)

Networks (model.py) - Generator (Residual Block)

class ResidualBlock(nn.Module):

def __init__(self, in_features):

super(ResidualBlock, self).__init__()

conv_block = [ nn.ReflectionPad2d(1),

nn.Conv2d(in_features, in_features,

kernel_size=3),

nn.InstanceNorm2d(in_features),

nn.ReLU(inplace=True),

nn.ReflectionPad2d(1),

nn.Conv2d(in_features, in_features,

kernel_size=3),

nn.InstanceNorm2d(in_features) ]

self.conv_block = nn.Sequential(*conv_block)

def forward(self, x):

return x + self.conv_block(x)](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-49-320.jpg)

![Part II: Image Translation using GAN (CycleGAN)

Networks (model.py) - Discriminator (from PatchGAN)

# FCN classification layer

model += [nn.Conv2d(512, 1, 4, padding=1)]

self.model = nn.Sequential(*model)

def forward(self, x):

x = self.model(x)

# Average pooling and flatten

return F.avg_pool2d(x, x.size()[2:]).view(x.size()[0], -1)

class Discriminator(nn.Module):

def __init__(self, input_nc):

super(Discriminator, self).__init__()

# A bunch of convolutions one after another

model = [ nn.Conv2d(input_nc, 64, 4,

stride=2, padding=1),

nn.LeakyReLU(0.2, inplace=True) ]

model += [ nn.Conv2d(64, 128, 4,

stride=2, padding=1),

nn.InstanceNorm2d(128),

nn.LeakyReLU(0.2, inplace=True) ]

model += [ nn.Conv2d(128, 256, 4, stride=2, padding=1),

nn.InstanceNorm2d(256),

nn.LeakyReLU(0.2, inplace=True) ]

model += [ nn.Conv2d(256, 512, 4, padding=1),

nn.InstanceNorm2d(512),

nn.LeakyReLU(0.2, inplace=True) ]

(1) LSGAN does NOT need sigmoid function at the last layer.

(2) The author applies average pooling resulting in 1-D label for each instance.](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-52-320.jpg)

![Part II: Image Translation using GAN (CycleGAN)

Replay Buffer(train.py and utils.py): Update D using a history of generated images.

If the buffer is NOT full; keep inserting current images to the buffer.

If the buffer is full; (1) By 50% chance, the buffer will return a previously stored image,

and insert the current image into the buffer.

(2) By another 50% chance, the buffer will return current image.

for element in data.data:

element = torch.unsqueeze(element, 0)

if len(self.data) < self.max_size:

self.data.append(element)

to_return.append(element)

else:

if random.uniform(0,1) > 0.5:

i = random.randint(0, self.max_size-1)

to_return.append(self.data[i].clone())

self.data[i] = element

else:

to_return.append(element)

return Variable(torch.cat(to_return))

# train.py

fake_A_buffer = ReplayBuffer()

fake_B_buffer = ReplayBuffer()

# utils.py

class ReplayBuffer():

def __init__(self, max_size=50):

assert (max_size > 0), 'Empty buffer '

'or trying to create a black hole. ‘

'Be careful.’

self.max_size = max_size

self.data = []

def push_and_pop(self, data):

to_return = []](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-56-320.jpg)

![Part II: Image Translation using GAN (CycleGAN)

Dataloader(train.py)

# Dataset loader (train.py)

transforms_ = [ transforms.Resize(int(opt.size*1.12), Image.BICUBIC),

transforms.RandomCrop(opt.size),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5), (0.5,0.5,0.5)) ]

dataset = ImageDataset(opt.dataroot, transforms_=transforms_, unaligned=True)

dataloader = DataLoader(dataset, batch_size=opt.batchSize, shuffle=True, num_workers=opt.n_cpu)](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-57-320.jpg)

![Part II: Image Translation using GAN (CycleGAN)

Dataloader(datasets.py) - Customized Dataset

def __getitem__(self, index):

item_A = self.transform(Image.open(

self.files_A[index % len(self.files_A)]))

if self.unaligned:

item_B = self.transform(Image.open(

self.files_B[random.randint(

0, len(self.files_B) - 1)]))

else:

item_B = self.transform(Image.open(

self.files_B[index % len(self.files_B)]))

return {'A': item_A, 'B': item_B}

def __len__(self):

return max(len(self.files_A), len(self.files_B))

# Dataset loader (datasets.py)

import glob

import random

import os

from torch.utils.data import Dataset

from PIL import Image

import torchvision.transforms as transforms

class ImageDataset(Dataset):

def __init__(self, root, transforms_=None,

unaligned=False, mode='train’):

self.transform = transforms.Compose(transforms_)

self.unaligned = unaligned

self.files_A = sorted(glob.glob(os.path.join(

root, '%s/A' % mode) + '/*.*’))

self.files_B = sorted(glob.glob(os.path.join(

root, '%s/B' % mode) + '/*.*'))](https://image.slidesharecdn.com/tutorialimagegan-190725103615/85/Tutorial-Image-Generation-and-Image-to-Image-Translation-using-GAN-58-320.jpg)