Unified Data Access with Gimel

- 1. Unified Data Access with Gimel Vladimir Bacvanski Anisha Nainani Deepak Chandramouli

- 2. About us Vladimir Bacvanski [email protected] Twitter: @OnSoftware • Principal Architect, Strategic Architecture at PayPal • In previous life: CTO of a development and consulting firm • PhD in Computer Science from RWTH Aachen, Germany • O’Reilly author: Courses on Big Data, Kafka Deepak Chandramouli [email protected] LinkedIn: @deepakmc • MT2 Software Engineer, Data Platform Services at PayPal • Data Enthusiast • Tech lead • Gimel (Big Data Framework for Apache Spark) • Unified Data Catalog – PayPal’s Enterprise Data Catalog Anisha Nainani [email protected] LinkedIn: @anishanainani • Senior Software Engineer • Big Data • Data Platform Services

- 3. AGENDA ❑ PayPal - Introduction ❑ Why Gimel ❑ Gimel Deep Dive ❑ What’s next? ❑ Questions

- 4. PayPal – Key Metrics and Analytics Ecosystem 4

- 5. PayPal | Q3-2020 | Key Metrics 5https://investor.pypl.com/home/default.aspx

- 6. PayPal | Data Growth 6 160+ PB Data200,000+ YARN jobs/day One of the largest Aerospike, Teradata, Hortonworks and Oracle installations Compute supported: Spark, Hive, MR, BigQuery 20+ On-Premise clusters GPU co-located with Hadoop Cloud Migration Adjacencies

- 7. 7 Developer Data scientist Analyst Operator Gimel SDK Notebooks UDC Data API Infrastructure services leveraged for elasticity and redundancy Multi-DC Public cloudPredictive resource allocation Logging Monitoring Alerting Security Application Lifecycle Management Compute Frameworkand APIs GimelData Platform User Experience andAccess R Studio BI tools PayPal | Data Landscape

- 8. Why Gimel?

- 9. 9 Challenges | Data Access Code | Cumbersome & Fragile Spark Read From Hbase Spark Read From Elastic Search Spark Read From AeroSpike Spark Read From Druid Illustration Purpose Not Meant to Read Spark Read From Hbase

- 10. 10 Challenges | Data Processing Can Be Multi-Mode & Polyglot Batch

- 11. 11 Challenges with Data App Lifecycle Learn Code Optimize Build Deploy RunOnboarding Big Data Apps Learn Code Optimize Build Deploy RunCompute Version Upgraded Learn Code Optimize Build Deploy RunStorage API Changed Learn Code Optimize Build Deploy RunStorage Connector Upgraded Learn Code Optimize Build Deploy RunStorage Hosts Migrated Learn Code Optimize Build Deploy RunStorage Changed Learn Code Optimize Build Deploy Run*********************

- 12. 12 Gimel Simplifies Data Application Lifecycle Data Application Lifecycle - With Data API Learn Code Optimize Build Deploy RunOnboarding Big Data Apps Compute Version Upgraded Storage API Changed Storage Connector Upgraded Storage Hosts Migrated Storage Changed ********************* Run Run Run Run Run Run

- 13. 13 Challenges | Instrumentation Required at multiple touchpoints Catalog / Classification Platform Centric Interceptors id name address 1 XXXX XXXX 2 XXXX XXXX Visibility Security Data User / App Data Stores

- 14. 14 Putting it all together… id First_name Last_name address 1 XXXX XXXX XXXX 2 XXXX XXXX XXXX 3 XXXX XXXX XXXX Data User Data App Data Stores Catalog / Classification Alert Platform Centric InterceptorsSecurity Data / SQL API App App App App … ….

- 15. 15 Query Routing – Concept 15 Spark / Gimel Application Notebooks Developer/Analyst/Data Scientist User / App needs transaction data • NRT (Streaming) • 7 days (Analytics Cache) • 2 Years (cold storage) 1. Submits query to GSQL Kernel 2. Submits query to GTS Where txn_dt = last_7_days Fast Access Via Cache A P P • Gimel looks at logical dataset in UDC • Interpret filter criteria and route query to appropriate storage Where txn_dt = last_30_mins Where txn_dt = last_2_years

- 16. 16 Query Routing – Concept 16 • Alluxio Caching – fast access to remote data • Enterprise Catalog Service – provides config for various stores • Logical Dataset in catalog - abstracts multiple target stores underneath • Query pattern (filter) based routing – provides ability to serve data dynamically

- 17. Code

- 19. Gimel – Deep Dive Peek into implementation 19

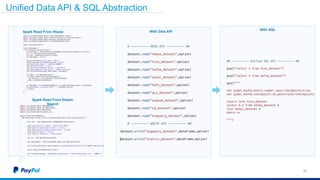

- 20. Unified Data API & SQL Abstraction 20 With Data APISpark Read From Hbase Spark Read From Elastic Search With SQL

- 21. Unified Data API & Unified Config Unified Data API Unified Connector Config

- 22. Set gimel.catalog.provider=UDC CatalogProvider.getDataSetProperties(“dataSetName”) Metadata Services Set gimel.catalog.provider=USER CatalogProvider.getDataSetProperties(“dataSetName”) Set gimel.catalog.provider=HIVE CatalogProvider.getDataSetProperties(“dataSetName”) sql> set dataSetProperties={ "key.deserializer":"org.apache.kafka.common.serialization.StringDeserializer", "auto.offset.reset":"earliest", "gimel.kafka.checkpoint.zookeeper.host":"zookeeper:2181", "gimel.storage.type":"kafka", "gimel.kafka.whitelist.topics":"kafka_topic", "datasetName":"test_table1", "value.deserializer":"org.apache.kafka.common.serialization.ByteArrayDeserializer ", "value.serializer":"org.apache.kafka.common.serialization.ByteArraySerializer", "gimel.kafka.checkpoint.zookeeper.path":"/pcatalog/kafka_consumer/checkpoint", "gimel.kafka.avro.schema.source":"CSR", "gimel.kafka.zookeeper.connection.timeout.ms":"10000", "gimel.kafka.avro.schema.source.url":"http://schema_registry:8081", "key.serializer":"org.apache.kafka.common.serialization.StringSerializer", "gimel.kafka.avro.schema.source.wrapper.key":"schema_registry_key", "gimel.kafka.bootstrap.servers":"localhost:9092" } sql> Select * from pcatalog.test_table1. spark.sql("set gimel.catalog.provider=USER"); val dataSetOptions = DataSetProperties( "KAFKA", Array(Field("payload","string",true)) , Array(), Map( "datasetName" -> "test_table1", "auto.offset.reset"-> "earliest", "gimel.kafka.bootstrap.servers"-> "localhost:9092", "gimel.kafka.avro.schema.source"-> "CSR", "gimel.kafka.avro.schema.source.url"-> "http://schema_registry:8081", "gimel.kafka.avro.schema.source.wrapper.key"-> "schema_registry_key", "gimel.kafka.checkpoint.zookeeper.host"-> "zookeeper:2181", "gimel.kafka.checkpoint.zookeeper.path"-> "/pcatalog/kafka_consumer/checkpoint", "gimel.kafka.whitelist.topics"-> "kafka_topic", "gimel.kafka.zookeeper.connection.timeout.ms"-> "10000", "gimel.storage.type"-> "kafka", "key.serializer"-> "org.apache.kafka.common.serialization.StringSerializer", "value.serializer"-> "org.apache.kafka.common.serialization.ByteArraySerializer" ) ) dataSet.read(”test_table1",Map("dataSetProperties"->dataSetOptions)) CREATE EXTERNAL TABLE `pcatalog.test_table1` (payload string) LOCATION 'hdfs://tmp/' TBLPROPERTIES ( "datasetName" -> "dummy", "auto.offset.reset"-> "earliest", "gimel.kafka.bootstrap.servers"-> "localhost:9092", "gimel.kafka.avro.schema.source"-> "CSR", "gimel.kafka.avro.schema.source.url"-> "http://schema_registry:8081", "gimel.kafka.avro.schema.source.wrapper.key"-> "schema_registry_key", "gimel.kafka.checkpoint.zookeeper.host"-> "zookeeper:2181", "gimel.kafka.checkpoint.zookeeper.path"-> "/pcatalog/kafka_consumer/checkpoint", "gimel.kafka.whitelist.topics"-> "kafka_topic", "gimel.kafka.zookeeper.connection.timeout.ms"-> "10000", "gimel.storage.type"-> "kafka", "key.serializer"-> "org.apache.kafka.common.serialization.StringSerializer", "value.serializer"-> "org.apache.kafka.common.serialization.ByteArraySerializer" ); Spark-sql> Select * from pcatalog.test_table1 Scala> dataSet.read(”test_table1",Map("dataSetProperties"->dataSetOptions)) Anatomy of Catalog Provider Metadata Set gimel.catalog.provider=YOUR_CATALOG CatalogProvider.getDataSetProperties(“dataSetName”) { // Implement this ! }

- 23. gimel.dataset.factory { KafkaDataSet ElasticSearchDataSet DruidDataSet HiveDataSet AerospikeDataSet HbaseDataSet CassandraDataSet JDBCDataSet } Metadata Services dataSet.read(“dataSetName”,options) dataSet.write(dataToWrite,”dataSetName”, options) dataStream.read(“dataSetName”, options) val storageDataSet = getFromFactory(type=“Hive”) { Core Connector Implementation, example – Kafka Combination of Open Source Connector and In-house implementations Open source connector such as DataStax / SHC / ES-Spark } Anatomy of API gimel.datastream.factory { KafkaDataStream } CatalogProvider.getDataSetProperties(“dataSetName”) val storageDataStream = getFromStreamFactory(type=“kafka”) kafkaDataSet.read(“dataSetName”,options) hiveDataSet.write(dataToWrite,”dataSetName”, options) storageDataStream.read(“dataSetName”, options) dataSet.write(”pcatalog.HIVE_dataset”,readDf , options) val dataSet : gimel.DataSet = DataSet(sparkSession) val df1 = dataSet.read(“pcatalog.KAFKA_dataset”, options); df1.createGlobalTempView(“tmp_abc123”) Val resolvedSelectSQL = selectSQL.replace(“pcatalog.KAFKA_dataset”,”tmp_abc123”) Val readDf : DataFrame = sparkSession.sql(resolvedSelectSQL); select kafka_ds.*,gimel_load_id ,substr(commit_timestamp,1,4) as yyyy ,substr(commit_timestamp,6,2) as mm ,substr(commit_timestamp,9,2) as dd ,substr(commit_timestamp,12,2) as hh from pcatalog.KAFKA_dataset kafka_ds join default.geo_lkp lkp on kafka_ds.zip = geo_lkp.zip where geo_lkp.region = ‘MIDWEST’ %%gimel insert into pcatalog.HIVE_dataset partition(yyyy,mm,dd,hh,mi) -- Establish 10 concurrent connections per Topic-Partition set gimel.kafka.throttle.batch.parallelsPerPartition=10; -- Fetch at max - 10 M messages from each partition set gimel.kafka.throttle.batch.maxRecordsPerPartition=10,000,000;

- 24. Next Steps

- 25. What’s Next? • Expand Catalog Provider • Google Data Catalog • Cloud Support • BigQuery • PubSub • GCS • AWS Redshift • Gimel SQL • Expand to Cloud Stores • Query / Access Optimization • Pre-empt runaway queries • Graph Support • Neo4j • ML/NLP Support • ML-Lib • Spark-NLP

- 27. Thank You!

- 28. Appendix

- 29. Gimel Thrift Server @ PayPal 29

- 30. ▪ HiveServer2 service that allows a remote client to submit requests to Hive using a variety of programming languages (C++, Java, Python) and retrieve results BLOG ▪ Built on Apache Thrift Concepts ▪ Spark Thrift Server Similar to HiveServer2, executes in spark Engine as compared to Hive (MR /TEZ) What is GTS? • Gimel Thrift Server Spark Thrift Server + Gimel + PayPal’s - Unified Data Catalog + Security & other PP specific features

- 31. Depending upon the cluster capacity and traffic user has to wait for the session 31 Why GTS? 31 Needs to read data from Hive through SQL PayPal Notebooks Developer/Analyst/Data Scientist 2. Starts Spark Session on cluster 3. Spark session Started 1. Get a Spark Session 4. Submits the query Select * from pymtdba.wtransaction_p2 5. Reads from Store CLI Host APP

- 32. 32 How does GTS Work? 32 Gimel Thrift Server Paypal Notebooks Developer/Analyst/Data Scientist Needs to read data from Hive Select * from pymtdba.wtransaction_p2 1. Submits query to GSQL Kernal 2. Submits query to GTS 3. Read from Store A P P Connect via Java JDBC / Python

- 33. Challenges in platform management 33

- 34. 34 Challenges | Audit & Monitoring | Multifaceted DBQLog s Audit Table Cloud Audit Logs *** Lack of Unified View of Data Processed on Spark PubSub Use r

- 35. 35 Platform management Complexities Store Specific Interceptors PubSub Store Operator s App Developer s App s Instrumentation By App Developer

- 36. GTS Key Features Out-of-box Auditing: Logging, Monitoring, Dashboards Alerting (beta/internal) Security Apache Ranger Teradata Proxy User Part of Ecosystem Notebooks – GSQL UDC –Datasets SCAAS – DML/DDL Low Latency User Experience SQL to Any Store Stores supported by Gimel Highly available architecture Software & Hardware Query via REST (work in progress) REST Query Guard Kills run away queries