UNIT 3.2 -Mining Frquent Patterns (part1).ppt

- 1. 1 1 Data Mining: Concepts and Techniques (3rd ed.) — Chapter 6 — Jiawei Han, Micheline Kamber, and Jian Pei University of Illinois at Urbana-Champaign & Simon Fraser University ©2011 Han, Kamber & Pei. All rights reserved.

- 2. 2 Chapter 5: Mining Frequent Patterns, Association and Correlations: Basic Concepts and Methods Basic Concepts Frequent Itemset Mining Methods Which Patterns Are Interesting?—Pattern Evaluation Methods Summary

- 3. 3 What Is Frequent Pattern Analysis? Frequent pattern A pattern that occurs frequently in a data set . (a set of items,subsequences,substructures, etc.)

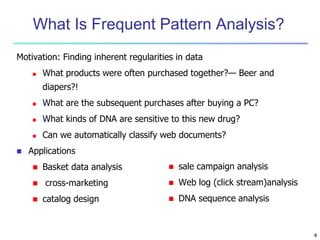

- 4. What Is Frequent Pattern Analysis? Motivation: Finding inherent regularities in data What products were often purchased together?— Beer and diapers?! What are the subsequent purchases after buying a PC? What kinds of DNA are sensitive to this new drug? Can we automatically classify web documents? Applications Basket data analysis cross-marketing catalog design sale campaign analysis Web log (click stream)analysis DNA sequence analysis 4

- 5. 5 Why Is Freq. Pattern Mining Important? Freq. pattern: An intrinsic and important property of datasets Foundation for many essential data mining tasks Association, correlation, and causality analysis Sequential, structural (e.g., sub-graph) patterns Pattern analysis in spatiotemporal, multimedia, time- series, and stream data Classification: discriminative, frequent pattern analysis Cluster analysis: frequent pattern-based clustering Data warehousing: iceberg cube and cube-gradient Semantic data compression: fascicles Broad applications

- 6. 6 Basic Concepts: Frequent Patterns itemset: A set of one or more items k-itemset X = {x1, …, xk} (absolute) support, or, support count of X: Frequency or occurrence of an itemset X (relative) support, s, is the fraction of transactions that contains X (i.e., the probability that a transaction contains X) An itemset X is frequent if X’s support is no less than a minsup threshold Customer buys diaper Customer buys both Customer buys beer Tid Items bought 10 Beer, Nuts, Diaper 20 Beer, Coffee, Diaper 30 Beer, Diaper, Eggs 40 Nuts, Eggs, Milk 50 Nuts, Coffee, Diaper, Eggs, Milk

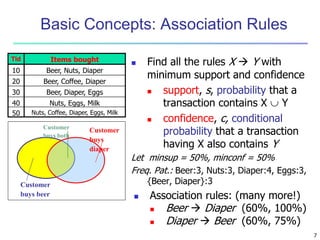

- 7. 7 Basic Concepts: Association Rules Find all the rules X Y with minimum support and confidence support, s, probability that a transaction contains X Y confidence, c, conditional probability that a transaction having X also contains Y Let minsup = 50%, minconf = 50% Freq. Pat.: Beer:3, Nuts:3, Diaper:4, Eggs:3, {Beer, Diaper}:3 Customer buys diaper Customer buys both Customer buys beer Nuts, Eggs, Milk 40 Nuts, Coffee, Diaper, Eggs, Milk 50 Beer, Diaper, Eggs 30 Beer, Coffee, Diaper 20 Beer, Nuts, Diaper 10 Items bought Tid Association rules: (many more!) Beer Diaper (60%, 100%) Diaper Beer (60%, 75%)

- 8. 8 Closed Patterns and Max-Patterns A long pattern contains a combinatorial number of sub- patterns, e.g., {a1, …, a100} contains (100 1) + (100 2) + … + (1 1 0 0 0 0) = 2100 – 1 = 1.27*1030 sub-patterns! Solution: Mine closed frequent item set(patterns) and maximal frequent item set(patterns) instead An item set X is closed if X is frequent and there exists no super-pattern Y כ X, with the same support as X (proposed by Pasquier, et al. @ ICDT’99) An itemset X is a max-pattern if X is frequent and there exists no frequent super-pattern Y כ X (proposed by Bayardo @ SIGMOD’98) Closed pattern is a lossless compression of freq. patterns Reducing the # of patterns and rules

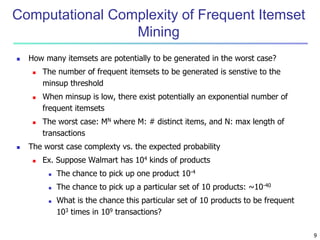

- 9. 9 Computational Complexity of Frequent Itemset Mining How many itemsets are potentially to be generated in the worst case? The number of frequent itemsets to be generated is senstive to the minsup threshold When minsup is low, there exist potentially an exponential number of frequent itemsets The worst case: MN where M: # distinct items, and N: max length of transactions The worst case complexty vs. the expected probability Ex. Suppose Walmart has 104 kinds of products The chance to pick up one product 10-4 The chance to pick up a particular set of 10 products: ~10-40 What is the chance this particular set of 10 products to be frequent 103 times in 109 transactions?

- 10. 10 Chapter 5: Mining Frequent Patterns, Association and Correlations: Basic Concepts and Methods Basic Concepts Frequent Itemset Mining Methods Which Patterns Are Interesting?—Pattern Evaluation Methods Summary

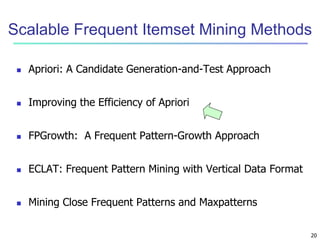

- 11. 11 Scalable Frequent Itemset Mining Methods Apriori: A Candidate Generation-and-Test Approach Improving the Efficiency of Apriori FPGrowth: A Frequent Pattern-Growth Approach ECLAT: Frequent Pattern Mining with Vertical Data Format

- 12. 12 Apriori: A Candidate Generation & Test Approach Method: Initially, scan DB once to get frequent 1-itemset Generate length (k+1) candidate itemsets from length k frequent itemsets Test the candidates against DB Terminate when no frequent or candidate set can be generated “How is the Apriori property used in the algorithm?” The join step The prune step

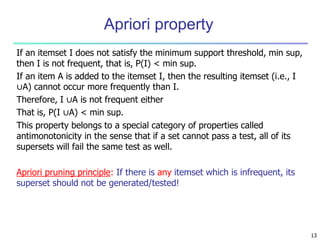

- 13. Apriori property If an itemset I does not satisfy the minimum support threshold, min sup, then I is not frequent, that is, P(I) < min sup. If an item A is added to the itemset I, then the resulting itemset (i.e., I ∪A) cannot occur more frequently than I. Therefore, I ∪A is not frequent either That is, P(I ∪A) < min sup. This property belongs to a special category of properties called antimonotonicity in the sense that if a set cannot pass a test, all of its supersets will fail the same test as well. Apriori pruning principle: If there is any itemset which is infrequent, its superset should not be generated/tested! 13

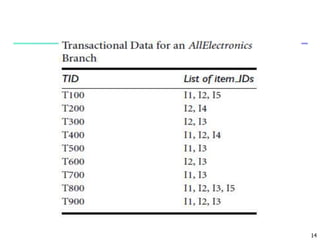

- 14. 14

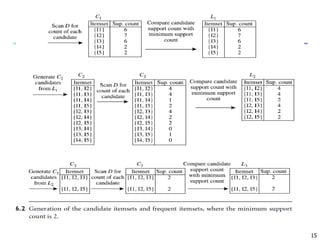

- 15. 15

- 16. 16 The Apriori Algorithm (Pseudo-Code) Ck: Candidate itemset of size k Lk : frequent itemset of size k L1 = {frequent items}; for (k = 1; Lk !=; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support end return k Lk;

- 17. 17

- 18. Generating Association Rules from Frequent Itemsets For each frequent itemset l, generate all nonempty subsets of l. For every nonempty subset s of l, output the rule “s ⇒ (l − s)” The data contain frequent itemset X = {I1, I2, I5}. What are the association rules that can be generated from X? The nonempty subsets of X are {I1, I2} {I1, I5} {I2, I5} {I1} {I2} {I5}. 18

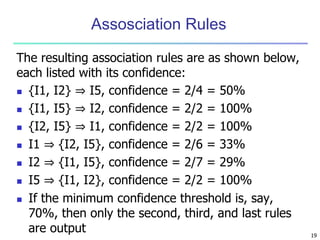

- 19. Assosciation Rules The resulting association rules are as shown below, each listed with its confidence: {I1, I2} ⇒ I5, confidence = 2/4 = 50% {I1, I5} ⇒ I2, confidence = 2/2 = 100% {I2, I5} ⇒ I1, confidence = 2/2 = 100% I1 ⇒ {I2, I5}, confidence = 2/6 = 33% I2 ⇒ {I1, I5}, confidence = 2/7 = 29% I5 ⇒ {I1, I2}, confidence = 2/2 = 100% If the minimum confidence threshold is, say, 70%, then only the second, third, and last rules are output 19

- 20. 20 Scalable Frequent Itemset Mining Methods Apriori: A Candidate Generation-and-Test Approach Improving the Efficiency of Apriori FPGrowth: A Frequent Pattern-Growth Approach ECLAT: Frequent Pattern Mining with Vertical Data Format Mining Close Frequent Patterns and Maxpatterns

- 21. 21 Further Improvement of the Apriori Method Major computational challenges Multiple scans of transaction database Huge number of candidates Tedious workload of support counting for candidates Improving Apriori: general ideas Reduce passes of transaction database scans Shrink number of candidates Facilitate support counting of candidates

- 22. Partition: Scan Database Only Twice Any itemset that is potentially frequent in DB must be frequent in at least one of the partitions of DB Scan 1: partition database and find local frequent patterns Scan 2: consolidate global frequent patterns

- 23. 23

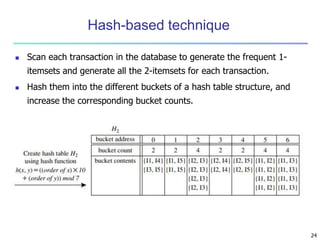

- 24. 24 Hash-based technique Scan each transaction in the database to generate the frequent 1- itemsets and generate all the 2-itemsets for each transaction. Hash them into the different buckets of a hash table structure, and increase the corresponding bucket counts.

- 25. 25

- 26. Transaction reduction A transaction that does not contain any frequent k-itemsets cannot contain any frequent (k + 1)-itemsets . Therefore, such a transaction can be marked or removed from further consideration because subsequent database scans. 26

- 27. 27

- 28. 28 Sampling for Frequent Patterns Select a sample of original database, mine frequent patterns within sample using Apriori Scan database once to verify frequent itemsets found in sample, only borders of closure of frequent patterns are checked Example: check abcd instead of ab, ac, …, etc. Scan database again to find missed frequent patterns

- 29. 29 Scalable Frequent Itemset Mining Methods Apriori: A Candidate Generation-and-Test Approach Improving the Efficiency of Apriori FPGrowth: A Frequent Pattern-Growth Approach ECLAT: Frequent Pattern Mining with Vertical Data Format Mining Close Frequent Patterns and Maxpatterns

- 30. 30 Pattern-Growth Approach: Mining Frequent Patterns Without Candidate Generation Bottlenecks of the Apriori approach Breadth-first (i.e., level-wise) search Candidate generation and test Often generates a huge number of candidates The FPGrowth Approach Depth-first search Avoid explicit candidate generation

- 31. 31

- 32. 32

- 33. 33

- 34. 34 Benefits of the FP-tree Structure Completeness Preserve complete information for frequent pattern mining Never break a long pattern of any transaction Compactness Reduce irrelevant info—infrequent items are gone Items in frequency descending order: the more frequently occurring, the more likely to be shared Never be larger than the original database (not count node-links and the count field)

- 35. 35 Advantages of the Pattern Growth Approach Divide-and-conquer: Decompose both the mining task and DB according to the frequent patterns obtained so far Lead to focused search of smaller databases Other factors No candidate generation, no candidate test Compressed database: FP-tree structure No repeated scan of entire database Basic ops: counting local freq items and building sub FP-tree, no pattern search and matching A good open-source implementation and refinement of FPGrowth FPGrowth+ (Grahne and J. Zhu, FIMI'03)

- 36. 36 Scalable Frequent Itemset Mining Methods Apriori: A Candidate Generation-and-Test Approach Improving the Efficiency of Apriori FPGrowth: A Frequent Pattern-Growth Approach ECLAT: Frequent Pattern Mining with Vertical Data Format Mining Close Frequent Patterns and Maxpatterns

- 37. 37 ECLAT: Mining by Exploring Vertical Data Format Vertical format: t(AB) = {T11, T25, …} tid-list: list of trans.-ids containing an itemset Deriving frequent patterns based on vertical intersections t(X) = t(Y): X and Y always happen together t(X) t(Y): transaction having X always has Y Using diffset to accelerate mining Only keep track of differences of tids t(X) = {T1, T2, T3}, t(XY) = {T1, T3} Diffset (XY, X) = {T2} Eclat- Equivalence class transformation- A depth first search algorithm using set intersection. (Zaki et al. @KDD’97)

- 38. 38

- 39. 39 Scalable Frequent Itemset Mining Methods Apriori: A Candidate Generation-and-Test Approach Improving the Efficiency of Apriori FPGrowth: A Frequent Pattern-Growth Approach ECLAT: Frequent Pattern Mining with Vertical Data Format Mining Close Frequent Patterns and Maxpatterns

- 40. Mining Frequent Closed Patterns Item merging: If every transaction containing a frequent itemset X also contains an itemset Y but not any proper superset of Y , then X ∪Y forms a frequent closed itemset and there is no need to search for any itemset containing X but no Y Sub-itemset pruning: If a frequent itemset X is a proper subset of an already found fre quent closed itemset Y and support count(X)=support count(Y), then X and all of X’s descendants in the set enumeration tree cannot be frequent closed itemsets and thus can be pruned.

- 41. Item skipping: In the depth-first mining of closed itemsets, at each level, there will be a prefix itemset X associated with a header table and a projected database. If a local frequent item p has the same support in several header tables at different levels, we can safely prune p from the header tables at higher levels. 41

- 42. CLOSET+: Mining Closed Itemsets by Pattern-Growth Itemset merging: if Y appears in every occurrence of X, then Y is merged with X Sub-itemset pruning: if Y כ X, and sup(X) = sup(Y), X and all of X’s descendants in the set enumeration tree can be pruned Hybrid tree projection Bottom-up physical tree-projection Top-down pseudo tree-projection Item skipping: if a local frequent item has the same support in several header tables at different levels, one can prune it from the header table at higher levels Efficient subset checking

- 43. MaxMiner: Mining Max-Patterns 1st scan: find frequent items A, B, C, D, E 2nd scan: find support for AB, AC, AD, AE, ABCDE BC, BD, BE, BCDE CD, CE, CDE, DE Since BCDE is a max-pattern, no need to check BCD, BDE, CDE in later scan Tid Items 10 A, B, C, D, E 20 B, C, D, E, 30 A, C, D, F Potential max-patterns

- 44. 44 Chapter 5: Mining Frequent Patterns, Association and Correlations: Basic Concepts and Methods Basic Concepts Frequent Itemset Mining Methods Which Patterns Are Interesting?—Pattern Evaluation Methods Summary

- 45. Strong Rules Are Not Necessarily Interesting A misleading “strong” association rule Of the 10,000 transactions analyzed, the data show that 6000 of the customer transactions included computer games, while 7500 included videos, and 4000 included both computer games and videos. Misleading because the probability of purchasing videos is 75%, which is even larger than 66%. In fact, computer games and videos are negatively associated because the purchase of one of these items actually decreases the likelihood of purchasing the other 45

- 46. From Association Analysis to Correlation Analysis A correlation measure should be used to augment the support–confidence framework for association rules. A ⇒ B [support, confidence, correlation] 46

- 47. 47 Interestingness Measure: Correlations (Lift) play basketball eat cereal [40%, 66.7%] is misleading The overall % of students eating cereal is 75% > 66.7%. play basketball not eat cereal [20%, 33.3%] is more accurate, although with lower support and confidence Measure of dependent/correlated events: lift 89 . 0 5000 / 3750 * 5000 / 3000 5000 / 2000 ) , ( C B lift Basketball Not basketball Sum (row) Cereal 2000 1750 3750 Not cereal 1000 250 1250 Sum(col.) 3000 2000 5000 ) ( ) ( ) ( B P A P B A P lift 33 . 1 5000 / 1250 * 5000 / 3000 5000 / 1000 ) , ( C B lift

- 48. χ 2 measure 48

- 49. 49 χ 2 value is greater than 1, and the observed value of the slot (game, video) = 4000, which is less than the expected value of 4500, buying game and buying video are negatively correlated

- 50. Other Pattern Evaluation Measures all confidence max confidence Kulczynski cosine 50

- 51. Comparison of Pattern Evaluation Measures 51

- 52. Which Null-Invariant Measure Is Better? IR (Imbalance Ratio): measure the imbalance of two itemsets A and B in rule implications Kulczynski and Imbalance Ratio (IR) together present a clear picture for all the three datasets D4 through D6 D4 is balanced & neutral D5 is imbalanced & neutral D6 is very imbalanced & neutral

- 53. 53 Chapter 5: Mining Frequent Patterns, Association and Correlations: Basic Concepts and Methods Basic Concepts Frequent Itemset Mining Methods Which Patterns Are Interesting?—Pattern Evaluation Methods Summary

- 54. 54 Summary Basic concepts: association rules, support- confident framework, closed and max-patterns Scalable frequent pattern mining methods Apriori (Candidate generation & test) Projection-based (FPgrowth, CLOSET+, ...) Vertical format approach (ECLAT, CHARM, ...) Which patterns are interesting? Pattern evaluation methods

![From Association Analysis to

Correlation Analysis

A correlation measure should be used to

augment the support–confidence framework for

association rules.

A ⇒ B [support, confidence, correlation]

46](https://image.slidesharecdn.com/unit3-240318193912-f23e90d0/85/UNIT-3-2-Mining-Frquent-Patterns-part1-ppt-46-320.jpg)

![47

Interestingness Measure: Correlations (Lift)

play basketball eat cereal [40%, 66.7%] is misleading

The overall % of students eating cereal is 75% > 66.7%.

play basketball not eat cereal [20%, 33.3%] is more accurate,

although with lower support and confidence

Measure of dependent/correlated events: lift

89

.

0

5000

/

3750

*

5000

/

3000

5000

/

2000

)

,

(

C

B

lift

Basketball Not basketball Sum (row)

Cereal 2000 1750 3750

Not cereal 1000 250 1250

Sum(col.) 3000 2000 5000

)

(

)

(

)

(

B

P

A

P

B

A

P

lift

33

.

1

5000

/

1250

*

5000

/

3000

5000

/

1000

)

,

(

C

B

lift](https://image.slidesharecdn.com/unit3-240318193912-f23e90d0/85/UNIT-3-2-Mining-Frquent-Patterns-part1-ppt-47-320.jpg)