Useing PSO to optimize logit model with Tensorflow

- 1. Using logit model and particle swarm optimization The example to combine evolutionary algorithm and machine learning Markliou 10/22/2017 ALLPPT.com _ Free PowerPoint Templates, Diagrams and Charts

- 2. About the speaker • Education – Bioinformatics and Systems Biology – Biotechnology – Life Science • Experiences – Data Scientist in Light Up Biotech. Corp. – Postdoc fellow in NCTU – Research Assistant in NCTU – 桃園市106年資訊組長出階及進階研習計畫 (Docker 助教) 2017/10/22 2

- 3. Outline • The concept of machine learning – Linear regression -- Logistic regression • The concept of evolutionary algorithm – Particle swarm optimization • Combine the machine learning with evolutionary algorithm – Using the logit model and PSO as example – Aims to use PSO to solve the parameters of logit model 2017/10/22 3

- 4. The concept of machine learning 2017/10/22 4 1. Users have data 2. Users want to predict the unknown data Gathered data Unknown data model results training test Trivia : “test” is not “testing” Using existing data to find the relation between variables? It’s statistics

- 5. Why logistic regression? 2017/10/22 5 為 什 麼 用 邏 吉 思 迴 歸 Some concepts in machine learning is also from the statistics. They are quite similar!! You can find the logit models in: 1. Traditional statistics – ex. Survival analysis 2. Machine learning

- 6. Logistic regression – from statistic 2017/10/22 6 Ideally Set: All the dependent variables are linear continuous : least square errors categorical : continuous with some errors Y = a1x1 + a2x2 + …. + anxn + b Normal distribution Y = a1x1 + a2x2 + …. + anxn + b Never forget : the least square assume the normal distribution This concept make a straight line error Extreme error would not often happen

- 7. Logistic regression – from statistic 2017/10/22 7 Classification case Set: All the dependent variables are linear continuous : least square errors categorical : continuous with some errors Y = a1x1 + a2x2 + …. + anxn + b NOT Normal distribution Y = a1x1 + a2x2 + …. + anxn + b If you want to fit this line …. This concept also make a straight line It will be like this …. error It is obviously : the errors between the line and data won’t be normal distribution That’s why the classification problem never use MSE as loss ( you can use MSE, but it will make a tragedy )

- 8. Logistic regression – from statistic 2017/10/22 8 To solve this problem, we already know the distributi on of data will not be normal distribution… SO……… http://www.appstate.edu/~whiteheadjc/service/logit/logit.gif

- 9. Logistic regression – from statistic 2017/10/22 9 Regularly, the upper bond and lower bond is from 0~1 Why not use “ratio”? 𝑙𝑜𝑔 𝑃/(1 − 𝑃) = 𝑘=𝑖 𝑛 𝑎𝑖 𝑥𝑖 + 𝑏 case Control (total samples except the cases) According to “maximum likelihood”, using the optimization method can get the odds ratio Odds ratio 1. Newton 2. Gradient decent http://janda.org/workshop/Discriminant%20analysis/Talk/talk01.htm

- 10. Maximum likelihood V.S. cross entropy • In machine learning, the loss usually use cross entropy • In statistics, the loss usually use maximum likelihood • But Don’t worry, they are similar … 2017/10/22 10 Alarm !!!!! Math time~~

- 11. The relation between BCE and ML • BCE = binary cross entropy ML = maximum likelihood • Set : the problem is simple as bi-classification – The ML can applied as Bernoulli 2017/10/22 11 p 𝑦|𝜃 = ς𝑖=1 𝑛 𝜃𝑖 𝑦𝑖 (1 − 𝜃𝑖)1−𝑦𝑖 Bernoulli This is a distribution from model, so.. Let p(x|θ’) denote the training model θ p 𝑦|𝑥, 𝜃′ = ς𝑖=1 𝑛 𝑝𝜃′(𝑦|𝑥𝑖) 𝑦𝑖 (1 − 𝑝𝜃′ (𝑦|𝑥𝑖))1−𝑦𝑖 How about give a ‘log’ ? 𝑓 𝜃; 𝑥, 𝑦 = 𝑖=1 𝑛 𝑦𝑖 𝑙𝑜𝑔 𝑝 𝜃′ 𝑦 𝑥𝑖 + 1 − 𝑦𝑖 log(1 − 𝑝 𝜃′ 𝑦 𝑥𝑖 ) BCE https://stats.stackexchange.com/questions/260505/machine-learning-shoul d-i-use-a-categorical-cross-entropy-or-binary-cross-entro

- 12. The math time is over ~~~ • Alarm release…. • The conclusion is that – Using BCE is similar to use ML – Most often … they are the same • But … can I interpret the weights which are given using the machine learning technique? – The answer is “it’s not suitable” • Because of the relation of the matrix and the samples 2017/10/22 12

- 13. THEN … HOW TO GET THE COEFFICIENT IS INTERESTING 2017/10/22 13 Newton? Gradient Decent? This time we use particle swarm optimization …

- 14. Optimized solution search landscape Use protein folding as example http://www.nature.com/nsmb/journ al/v16/n6/fig_tab/nsmb.1591_F1.ht ml https://parasol.tamu.edu/groups/amat ogroup/research/computationalBio/sli de/EnergyLandscape.gif

- 16. The problems to look for solutions • The only way to get the best solution is to scan all the space. – This will take long time. • If we cannot find the best solution, the acceptable solution would be desired. – Traditional method (numerical analysis based) – Heuristic algorithm (random based)

- 17. Gradient Descent Assume we have some cost-function: and we want minimize over continuous variables X1,X2,..,Xn 1. Compute the gradient : 2. Take a small step downhill in the direction of the gradient: 3. Check if 4. If true then accept move, if not reject. 5. Repeat. 1( ,..., )nC x x 1( ,..., )n i C x x i x 1' ( ,..., )i i i n i x x x C x x i x 1 1( ,.., ',.., ) ( ,.., ,.., )i n i nC x x x C x x x https://www.ics.uci.edu/~welling/teaching/.../LocalSearch271f09.pdf

- 18. Problems • Gradient decent and learning rate http://sebastianraschka.com/Articles/2015_singlelayer_neurons.html

- 19. Why heuristic algorithm • This kind of method has change to fly over the hill tops. • Classical and heuristic algorithms are widely apply in many practical areas. – Ex: Machine learning

- 20. Concepts of GA Environment (fitness) 高血壓 = 𝑓 𝑥 = A1 ∗ 年齡 + A2*身高 + A3*體重 + A4*姓名 A1 A2 A3 A4 Fitness 設定為是否罹患高血壓 A1 A2 A3 A4 A1 A2 A3 A4 A1 A2 A3 A4 A1 A2 A3 A4 A1 A2 A3 A4 丟回原本的環境後,fitness高的 個體就可以存活下來並且有機會 把優秀的基因遺傳給下一代

- 21. Simple schema of GA I forget the sources, if anyone know please tell me..

- 22. Swarm intelligence • Nature provides inspiration to computer scientists in many ways. One source of such inspiration is the way in which natural organisms behave • In other words, if we consider the group itself as an individual – the swarm – in some ways, at least, the swarm seems to be more intelligent than any of the individuals within it when they are in groups. • -- David Corne, Alan Reynolds and Eric Bonabeau, “Swarm Intelligence ”

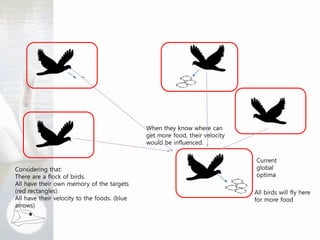

- 23. Particle swarm optimization (PSO) • Inference from the birds finding the foods • All the birds are served as particles in PSO system • The particles all have some characteristics – The memory of current global optima – maybe provided from other birds – The memory of current local optima – provided from themselves – The velocity of the particle

- 24. • Boid = Bird – oid (like, mimic etc) • The birds will move toward to the foods • But after they passing, the would found another food source. Hypothesis: The place has more food, the nearby place would have more than more-food Original target Updated target Personal optimal solution

- 25. Considering that: There are a flock of birds. All have their own memory of the targets (red rectangles). All have their velocity to the foods. (blue arrows) When they know where can get more food, their velocity would be influenced. Current global optima All birds will fly here for more food

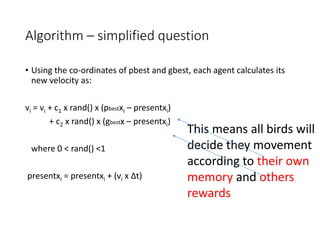

- 26. Algorithm – simplified question • Using the co-ordinates of pbest and gbest, each agent calculates its new velocity as: vi = vi + c1 x rand() x (pbestxi – presentxi) + c2 x rand() x (gbestx – presentxi) where 0 < rand() <1 presentxi = presentxi + (vi x Δt) This means all birds will decide they movement according to their own memory and others rewards

- 27. Algorithm – complex question • In n-dimensional space :

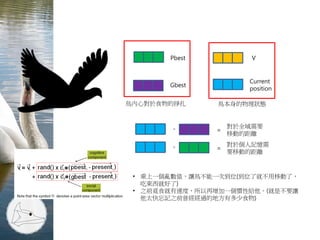

- 28. VPbest Gbest Current position - = 對於全域需要 移動的距離 - = 對於個人記憶需 要移動的距離 • 乘上一個亂數值,讓鳥不能一次到位(到位了就不用移動了, 吃東西就好了) • 之前覓食就有速度,所以再增加一個慣性給他。(就是不要讓 他太快忘記之前曾經經過的地方有多少食物) 鳥本身的物理狀態鳥內心對於食物的掙扎

- 29. Code implementation • In this work, we need two basic codes and we need to merge them – Logistic regression • aymericdamien : TensorFlow-Examples • https://goo.gl/LkQxDx – PSO • Nathan A. Rooy • https://goo.gl/89vcSA – Dataset • Since the original LR use the MNIST, we use the IRIS.csv to instead. • Use the in house script to split the data (also available in Github) – https://github.com/markliou/LR_PSO_Tensorflow 2017/10/22 29

- 30. Some tips in this work • The flowchart of this script 2017/10/22 30 PSO Logit model 1. Make the weights and bias tensor 2. Feed this tensor into logit model • Return the logit model performances (as the fitness) Tips: 1. Calculating the fitness need to put the tensors in to the session – we need to deliver the session object in to PSO 2. The PSO tensors are made outside the session. Use the implement of “tf.Variables” would acceler ate the speeds

- 32. THANKS FOR YOUR ATTENTION Any questions ?? 2017/10/22 32