Virtualization Cloud computing technology

- 2. Virtualization •Section - 1 CLOUD COMPUTING

- 3. Virtualization • It is a technique, which allows to share single physical instance of an application or resource among multiple organizations or tenants. • Splitting a physical resource into as many logical resources as we want e.g. CPU, Memory. • Virtualization is a technology that transforms hardware into software. • Examples: Virtual classroom, Virtual-holographic 3d imagery, Virtual machine

- 4. Splitting •Adding (internal memory + memory card)

- 5. Case Study • Data centre setup • 2000 ft2 data centre • 100 physical servers (30% for infra and 70% for application) • Cooling system for 100 servers • 100 physical disks for servers • Cabling for 100 servers and powerwhip • Data centre staff (OpEx) • Electricity and power backup (OpEx) Total Cost - ₹1,00,00,000

- 6. Virtualization of Data Centre •Servers - x10 RAM – 64 GB HDD – 2 TB CPU – 32 Cores •Hypervisors – x1 on 1 server. Ex. – Oracle VirtualBox, VMWare Workstation etc. •VMs - x10 on 1 server. RAM - 4 GB Each HDD – 100 GB Each CPU – 2 Cores Each No interaction with other VMs. Total Cost - ₹10,00,000 Server Hypervisor VM VM VM Billing DHCP Firewall

- 7. Data Centre Traditional Setup ✖CapEx & OpEx for 100 Servers. ✖Single application on single hardware. ✖Single OS on single hardware. Virtualized Setup ✔CapEx and OpEx for 10 Servers only. (90% less cost with no issues) ✔Multiple applications on single hardware. ✔Multiple OS on single hardware.

- 8. Virtualization Concept • Creating a virtual machine over existing operating system and hardware is referred as Hardware Virtualization. • Virtual Machines provide an environment that is logically separated from the underlying hardware. • The machine on which the virtual machine is created is known as host machine and virtual machine is referred as a guest machine. • This virtual machine is managed by a software or firmware, which is known as hypervisor.

- 9. What are the advantages of virtualization? • It optimizes hardware resource utilization, saves energy and costs. • makes it possible to run multiple applications and various operating systems on the same SERVER at the same time. • It increases the utilization, efficiency and flexibility of existing computer hardware. • Provides ability to manage resources effectively. • Increases efficiency of IT operations. • Provides for easier backup and disaster recovery. • Increases cost savings with reduced hardware expenditure.

- 10. What are the disadvantages of virtualization? • Software licensing costs. • Necessity to train IT staff in virtualization.

- 11. Hypervisor •Section - 2 CLOUD COMPUTING

- 12. Hypervisor • The hypervisor is a firmware or low-level program that acts as a Virtual Machine Manager. • It sits between the hardware and the operating system, • assigns the amount of access that the applications and operating systems have with the processor and other hardware resources.

- 13. Types of Hypervisor • Hypervisor is also known as a virtualization manager (VMM). • There are two types of hypervisor: • Type 1 Hypervisor (firmware/bare metal Hypervisor) executes on bare system (does not have any host OS). • It has direct access of hardware. • Type 2 Hypervisor (hosted Hypervisor) is a software interface that emulates the devices with which a system normally interacts. •It accesses hardware through OS. •If OS fails, all VMs also fail (i.e. it has extra dependency).

- 14. Types of Hypervisor Criteria Type -1 Hypervisor Type-2 Hypervisor type Bare Metal & Native Hosted Virtualization Hardware Virtualization OS Virtualization Operation Guest OS and applications run on the hypervisor Runs as an application on the host OS. Scalability Better Scalability Not so much because of its resilience on the underlying OS. System Independence Has direct access to hardware along with Virtual Machines it hosts. Not allowed to directly access the host hardware and its resources. Performance Higher performance as there is no middle layer. Comparatively has reduced performance rate as it runs with extra overhead. Security More secure Less secure, as any problems in the base OS affect the entire system. Example VMWare ESXi, hyper-V, Xenserver, etc. VMWare Workstation.

- 15. LynxSecure, RTS Hypervisor, Oracle VM, Sun xVM Server, VirtualLogic VLX are examples of Type 1 Hypervisor KVM, Microsoft Hyper V, VMWare Fusion, Virtual Server 2005 R2, Windows Virtual PC and VMWare workstation 6.0 are examples of Type 2 Hypervisor. System Hardware Organizations Learning Testing

- 16. VMWare vSphere •Section - 3 CLOUD COMPUTING

- 17. vSphere (Formerly VMware Infrastructure) 6.5/6.7 • vSphere is an umbrella term for VMware's virtualization platform. • The term vSphere encompasses several distinct products and technologies that work together to provide a complete infrastructure for virtualization. • it consists of : • ESXi • vCenter Server • vSphere client • vSphere web client • Features like vMotion, HA, FT, DRS,

- 18. ESXi, vSphere client, vCentre server •ESXi • Bare Metal Hypervisor • Elastic Sky X Integrated • vSphere client • vSphere client is a GUI to connect remotely to an (a single) ESX or ESXi host from Windows PC. • This client can be used to access and manage virtual machines on the ESXi host and also perform other management and configuration task. • vCentre server • If we need all the ESX or ESXi host in a single console then we need vCentre server.

- 19. vSphere client Hardware ESXi VM VM VM vSphere client

- 20. vCentre server (vMotion, HA, FT, DRS) Hardware ESXi VM VM VM vCentre server Hardware ESXi VM VM VM Hardware ESXi VM VM VM

- 21. VM Migration •Section - 4 CLOUD COMPUTING

- 22. VM Migration and High Availability Level of agreement Downtime per Year 99% 87 hrs. 99.9% 8.76 hrs. 99.99% 52 minutes 99.999% 5 minutes • High Availability in VMware deals with ESXi host failure and what happens to the VMs running on those hosts. • Availability is to make sure that downtime is very less in the event of failure and machines are always up. • SLA & violation cost

- 23. VM Migration • Migration is the technique of a moving a Virtual Machine from one host to another host or from one datastore to another datastore. • Datastore stores Virtual Machine files, log files, Virtual disk and ISO images. • VMFS • NFS Types of Migration Cold Migration Suspended Migration vMotion Physical to Virtual (P2V) Virtual to Virtual (V2V)

- 24. Cold Migration • Movement of Virtual Machine to another host in power-off state. • VM must be powered-off during migration. • Cold migration is more flexible than vMotion. • Cold migrations can be used to move a virtual machine between datacenters, as long as both datacenters are on the same vCenter server instance. • Chances of failure are less in cold migration, in comparison to migration of VM in power- on state.

- 25. Suspended Migration • Migrating a VM in powered- on suspended state. • Suspended state is like paused state in which you resume from same point on later state. • Suspended and vMotion migration are considered hot because in both cases, the VM is running. • The primary reason to suspend a VM on an ESXi host is for troubleshooting.

- 26. vMotion (Live Migration) • Migrating a VM that is in powered-on state. This is very useful as this does not cause any downtime for the VM. • In VMWare vMotion, machine is migrated from one ESXi host to another in powered-on state, whereas in storage vMotion, machine migrates from one datastore to another datastore in powered-on state. • vMotion moves a running Virtual Machine to a different ESXi host in the same cluster. • It is also known as Live Migration.

- 27. Physical to Virtual (P2V) & Virtual to Virtual (V2V) VM Migration P2V Migration • Converts a physical computer into a virtual one. • Ex. – You have a webserver running on physical hardware. You can run VMWare vCenter Converter, target the webserver, and have a copy of the Physical Server created on an ESXi Host. V2V Migration • V2V migrations are exactly like P2V migrations except that the source machine is already a Virtual Machine. • Ex. – Migrating from hyper-V and VMWare Workstation to ESXi would be considered as a V2V Migration.

- 28. High Availability & Fault Tolerance •Section - 5 CLOUD COMPUTING

- 29. High Availability and Fault Tolerance High Availability (HA) In HA, when the host crashes or fails, the VM gets restarted on another host so there is a very small downtime which is only related to the time taken for VM to restart. Automatic Detection of Server Failure – HA is a completely automated process and does not need any admin interference as there is no time to recover machine if host is about to crash. No passive standby ESXi host requires any extra VM. The VM for which its parent host is crashing, it can restart on any of the other running hosts. HA does not use vMotion. Enable HA on the cluster settings in order to use HA.

- 30. VM Migration • Migration is the technique of a moving a Virtual Machine from one host to another host or from one datastore to another datastore. • Datastore stores Virtual Machine files, log files, Virtual disk and ISO images. • VMFS • NFS Resource Pool ESXi Server V M O S V M O S V M O S ESXi Server V M O S V M O S V M O S ESXi Server V M O S V M O S V M O S

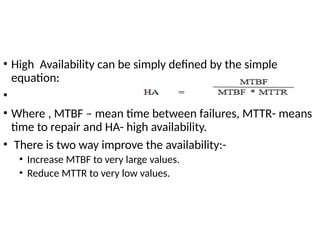

- 31. High Availability and Fault Tolerance • High Availability can be simply defined by the simple equation: • Where, MTBF – mean time between failures, MTTR- means time to repair and HA- high availability. • There are two ways to improve the availability:- • Increase MTBF to very large values. • Reduce MTTR to very low values. High Availability (HA)

- 32. High Availability and Fault Tolerance High Availability (HA) • Before HA was available, the failure of a single ESXi host meant that a large number of virtual machines that were running on it would be down. • This was referred to as ‘all of your eggs in one basket’ issue and caused some companies not to deploy virtual servers. • Resource Check Ensure that capacity is always available in order to restart all virtual machines affected by Server Failure. HA continuously monitors capacity utilization and reserves spare capacity to be able to restart VM.

- 33. High Availability and Fault Tolerance High Availability (HA) • HA works on the master and slave architecture. When you enable HA on the cluster, then election process occurs between all the hosts in the cluster. One host (which has large number of datastores mounted) has a chance to become a master server. Master server host decides that, in case of any failure, which host will take the load of existed VMs. Once the election process completes, there will be a master server and other ESXis will be considered as slave servers. If the master server goes down or crashes, then new election process will occur.

- 34. High Availability and Fault Tolerance Prerequisites for VMWare vSphere HA All hosts must be licensed for VMWare HA. You need at least two hosts in the cluster. All hosts need unique hostname. All hosts need to be configured with static IP Address. If you are using DHCP, you must ensure that the address for each host persists across reboots. VM must be located on shared, not local storage, otherwise they can’t be failed over in case of a host failure. All hosts in a VMWare cluster must have DNS configured.

- 35. High Availability and Fault Tolerance HA Failover Time • We measured the time from the point when vCenter Server VM stopped responding to the point when vSphere web client started responding to the user activity again. • With the 64 hosts / 6000 VMs, the total time is around 460 seconds (approximately 7 minutes) with about 30-40 seconds for HA to get into action. • HA works on the ESXi host level, where if any ESXi host gets failed, HA will restart those VMs onto another ESXi host. Web Client vCenter Server ESXi Host VMs

- 36. High Availability and Fault Tolerance Fault Tolerance (FT) • Aim of fault tolerance is similar to HA, but in terms of availability it provides 0% downtime and full availability as machine does not go down or restart. • This is meant for mission critical applications/servers. Ex. – Robotic surgery arm, Autopilot system, Spacecraft mission, etc. • VMWare Lockstep Technology is used in FT. • With FT, a secondary VM is created on another host using distributed resource scheduler. This VM is an exact replica of the primary VM. • A fault-tolerant VM and its second copy are not allowed to run on the same host. This restriction ensures that a host failure can’t result in the loss of both VMs. • Primary and Secondary work in lockstep i.e. the lockstep technology captures the current state and the events of primary VM and sends them to secondary VM. If primary goes down, instantly secondary VM takes over and continue operation. • It requires extra standby VM, therefore it is a costlier solution.

- 38. High Availability and Fault Tolerance Fault Tolerance (FT) • Fault Tolerance avoids ‘Split Brain’ Situation which can lead to two active copies of a VM after recovery from a failure. • FT works on VM level, therefore you can enable or disable FT on VM. • The primary and secondary VM continuously exchange heartbeat. This exchange allows the VM pair to monitor the status of one another to ensure that FT is continuously monitored.

- 39. High Availability and Fault Tolerance Virtual Machine Templates You can convert a fully configured VM into a VM template. This can be used to rapidly deploy large number of new Virtual Machines that are configured like the original VM.

- 40. Distributed Resource Scheduler •Section - 6 CLOUD COMPUTING

- 41. Distributed Resource Scheduler (DRS) • DRS is a feature of cluster which is managed by vCenter Server. It balances load of a VM across ESXi host. • A DRS enabled cluster has the following resource management capabilities: Initial VM Placement Load Balancing Power Management

- 42. Distributed Resource Scheduler (DRS) • Depending on how end users are using applications on VMs, VMs constantly expand & contract throughout the day, week or month. • The physical host becomes over- utilized or under-utilized based on VM utilization and number of VMs running over it. • vMotion is primary requirement of DRS.

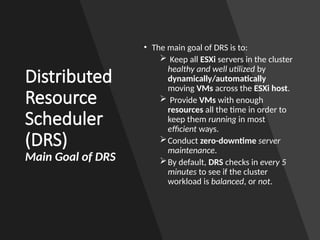

- 43. Distributed Resource Scheduler (DRS) Main Goal of DRS • The main goal of DRS is to: Keep all ESXi servers in the cluster healthy and well utilized by dynamically/automatically moving VMs across the ESXi host. Provide VMs with enough resources all the time in order to keep them running in most efficient ways. Conduct zero-downtime server maintenance. By default, DRS checks in every 5 minutes to see if the cluster workload is balanced, or not.

- 45. Distributed Resource Scheduler (DRS) Automation Level of DRS • There are three selections regarding the automation level of the DRS cluster: Manual Partially Automated Fully Automated

- 46. Distributed Resource Scheduler (DRS) No Automation (Manual) When a DRS cluster is set to manual, every time you power on a VM, the cluster prompts you to select the ESXi host where that VM should be hosted.

- 47. Distributed Resource Scheduler (DRS) Partial Automation • DRS makes automatic decisions about which host a VM should run on when it is initially powered-on • It does not prompt the user that who is performing the power-on task. • It will still prompt for all migrations on the DRS Lab. • Thus, initial VM placement is automated, but migrations are still manual.

- 48. Distributed Resource Scheduler (DRS) Full Automation • The third setting for DRS is ‘Fully Automated’. • This setting makes decision for initial placement without prompting and also makes automatic vMotion decisions based on the selected automation level.

- 51. Hardware/Server Virtualization • The basic idea is to combine many small physical servers into one large physical server, so that the processor can be used more effectively. • The operating system that is running on a physical server gets converted into a well-defined OS that runs on the virtual machine. • The hypervisor controls the processor, memory, and other components by allowing different OS to run on the same machine without the need for a source code.

- 52. Types of Hardware Virtualization • Here are the three types of hardware virtualization: • Full Virtualization • Emulation Virtualization • Paravirtualization : work in a distributed environment

- 53. Full Virtualization • The underlying hardware is completely simulated. • Guest software does not require any modification to run.

- 54. Emulation Virtualization • In Emulation, the virtual machine simulates the hardware and hence becomes independent of it. • In this, the guest operating system does not require modification.

- 55. Paravirtualization • In Paravirtualization, the hardware is not simulated. • The guest software run their own isolated domains.

- 56. Network Virtualization • It refers to the management and monitoring of a computer network as a single managerial entity from a single software-based administrator’s console. • It is intended to allow network optimization of data transfer rates, scalability, reliability, flexibility, and security. • It also automates many network administrative tasks. • Network virtualization is specifically useful for networks that experience a huge, rapid, and unpredictable traffic increase. • Network virtualization provides improved network productivity and efficiency.

- 57. Two categories: Network Virtualization • Internal: Provide network like functionality to a single system. • External: Combine many networks, or parts of networks into a virtual unit.

- 58. Storage Virtualization • Multiple network storage resources are present as a single storage device for easier and more efficient management of these resources. • Advantages • Improved storage management in a heterogeneous IT environment • Easy updates, better availability • Reduced downtime • Better storage utilization • Automated management

- 59. two types of storage virtualization: • Block- It works before the file system exists. It replaces controllers and takes over at the disk level. • File- The server that uses the storage must have software installed on it in order to enable file-level usage.

- 60. Memory Virtualization • It introduces a way to decouple memory from the server to provide a shared, distributed or networked function. • It enhances performance by providing greater memory capacity without any addition to the main memory. • That’s why a portion of the disk drive serves as an extension of the main memory.

- 61. Implementations – • Application-level integration – Applications running on connected computers directly connect to the memory pool through an API or the file system.

- 62. • Operating System Level Integration – The operating system first connects to the memory pool, and makes that pooled memory available to applications

- 63. Software Virtualization • It provides the ability to the main computer to run and create one or more virtual environments. • It is used to enable a complete computer system in order to allow a guest OS to run. • For instance letting Linux to run as a guest that is natively running a Microsoft Windows OS (or vice versa, running Windows as a guest on Linux).

- 64. Software Virtualization • Types: • Operating system • Application virtualization • Service virtualization

- 65. Data Virtualization • Without any technical details, you can easily manipulate data and know how it is formatted or where it is physically located. • It decreases the data errors and workload.

- 66. Desktop virtualization • It provides the work convenience and security. As one can access remotely, you are able to work from any location and on any PC. • It provides a lot of flexibility for employees to work from home or on the go. • It also protects confidential data from being lost or stolen by keeping it safe on central servers.

- 69. Cloud Resiliency • Cloud resiliency is the ability of a data center and its components -- servers, storage, etc. -- to continue operating in the wake of some kind of disruption, whether it's a breakdown of equipment, a power outage or even a natural disaster • By its nature, cloud computing removes single points of failure, thus provides a highly resilient computing environment.. • The failure of one node of the system has no impact on information availability and does not result in perceivable downtime. • The one major weak point is the network itself. If this fails, then cloud computing fails. Solution:-topology, redundency.

- 70. Resiliency capabilities- • The strategy combines multiple parts to mitigate risks and improve business resilience. • From a facilities perspective, we may want to implement power protection. • From a security perspective, to protect our data and applications we may want to implement remote backup, identity management, email filtering, or email archiving. • From a process perspective, we may implement identification and documentation of most critical business processes. • From a organizational perspective, we may want to implement a virtual workstation environment. • From a strategy and vision perspective, we may want to look at the kind of crisis management process. • Resiliency tiers is a common set of infra. services that are delivered to provide a corresponding set of business availability expectations.

- 71. CLOUD PROVISIONING • The cloud provisioning is the allocation of a cloud provider’s resources to a customer.” • When a cloud provider accepts a request from a customer, it must create the appropriate number of virtual machines (VMs) and allocate resources to support them. • The process is conducted in several different ways: advance provisioning, dynamic provisioning and user self- provisioning. The term provisioning simply means “to provide.” •

- 72. CLOUD PROVISIONING (cont...) • Cloud provisioning primarily defines how, what and when an organization will provision cloud services. These services can be internal, public or hybrid cloud products and solutions. • Cloud providers deliver cloud solutions through on-demand, pay-as-you-go systems as a service to customers and end users. • Cloud provider customers access cloud resources through Internet and programmatic access and are only billed for resources and services used according to a subscribed billing method.

- 73. • Infrastructure as a Service(IaaS): May include virtual servers, virtual storage and virtual desktops/computers. • Software as a Service(SaaS): Delivery of simple to complex software through the Internet. • Platform as a Service(PaaS): A combination of IaaS and SaaS delivered as a unified service.

- 75. HIGH AVAILABILITY AND DISASTER RECOVERY

- 76. HIGH AVAILABILITY • In simple words we can say that high availability refers to the availability of resources in a computer system. • In terms of cloud computing it refers to the availability of cloud services. • It provides the idea of anywhere, anytime access to service of cloud environment. • Availability is also related to reliability. • Availability is a technology issue as well as business issue.

- 77. • High Availability can be simply defined by the simple equation: • • Where , MTBF – mean time between failures, MTTR- means time to repair and HA- high availability. • There is two way improve the availability:- • Increase MTBF to very large values. • Reduce MTTR to very low values.

- 78. DISASTER RECOVERY: • Disaster recovery (DR) is the process, policies and procedures that are related to preparing for recovery or continuation of technology infrastructure which are an organization after a natural or human-induced disaster. • A disaster recovery is the process by which an organization can recover and access their software, data, and hardware. • It is necessary for faster disasters recovery to have an infrastructure supporting high availability. • The failure of disaster recovery plan mainly due to lack of high availability preparation, planning and maintenance to occurrence of the disaster.

- 79. Strategies of Disaster Recovery • RTO (Recovery Time Objective): • RTO is the period of time within which system, application, or functions must be discovered after an outage. • RPO (Recovery point objective): • RPO is the point to time to which systems and data must be recovered after an outage.

- 80. CLOUD GOVERNANCE • Cloud governance is a general term for applying specific policies or principles to the use of cloud computing services • cloud governance refers to the decision making processes, criteria and policies involved in the planning, architecture, acquisition, deployment, operation and management of a cloud computing capability.

- 81. • The governance is applied in cloud for: • Setting company policies in cloud computing. • Risk based decision which cloud provider, if any, to engage. • Assigning responsibilities for enforcing and monitoring of the policy compliance. • Set corrective action for non-compliance.

- 82. Cloud asset management (CAM) • It is a component of cloud management services focused exclusively on the management of a business's physical cloud environment, such as the products or services they use . • Cloud Asset Management or CAM is the management of an organization's Cloud usage, whether SaaS, PaaS or IaaS. • For instance: • poor visibility of organizational uptake • inability to build a centralized view of Cloud coverage • limited access to subscription data • limited access to actual usage data

- 83. Benefits of Cloud Asset Management (CAM) • Accurate discovery, inventory and consumption tracking of applications delivered in the Cloud • Overcome the limitations of Cloud portals, by providing access to a single centralized view • Expanded access to data and improved analysis and reporting • Granular insight into IaaS, PaaS and SaaS usage across your organization • Combine Cloud and on-premise deployment data for a complete end- to-end view of your IT ecosystem • Accurate, complete view of investments and their usage across the whole IT estate enables better cost control • Access all the information needed to ensure a successful migration to the Cloud

- 84. MARKET BASED MANAGEMENT OF CLOUD • Market oriented cloud computing is the presence of a virtual market place where IT Service are traded dynamically. • MOCC originated from the coordination of several components service consumers, service providers, and other entities that make trading between these two groups possible.

- 86. • There are three major components of cloud exchange are:- • Directory:-the market directory contains a listing of all the published services that are available in the cloud marketplace. • Auctioneer:-the auctioneer is in charge of keeping track of the running auctions in the market place and of verifying that the auctions for services are properly conducted and that malicious market players are prevented from performing illegal activities. • Bank:-the bank is the component that takes care of the financial aspect of all the operations happening in the virtual market place.

- 88. • The major components of architecture are:- • Brokers:-they submit their service requests from anywhere in the world to the cloud. • SLA resource allocator:-it is a kind of interface between users and cloud service provider which enable the SLA oriented resource management. • Service request examiner and admission control:-it interprets the submitted request for QoS requirement before determining whether to accept or reject the request. Based on the resource availability in the loud and other parameter decide. • Pricing:-it is in charge of billing based on the resource utilization and some factors. Some factor are request time, type etc.

- 89. • Accounting:-maintains the actual storage usage of resources by request so that the final coat can be charged to the users. • VM monitor:-keeps the track on the availability of VMs and their resources. • Dispatcher:-the dispatcher mechanism starts the execution of admitted requests on allocated VMs. • Cloud request monitor:-it keeps the track on execution of request in order to be in tune with SLA.

- 90. CLOUD FEDERATION STACK • Cloud federation requires one provider to wholesale or rent computing resources to another cloud provider. • Those resources become a temporary or permanent extension of the buyer's cloud computing environment, depending on the specific federation agreement between providers. • A federated cloud (also called cloud federation) is the deployment and management of multiple external and internal cloud computing services to match business needs. • A federation is the union of several smaller parts that perform a common action.

- 91. • Cloud federation offers two substantial benefits to cloud providers. • it allows providers to earn revenue from computing resources that would otherwise be idle or underutilized. • cloud federation enables cloud providers to expand their geographic footprints and accommodate sudden spikes in demand without having to build new points-of- presence (POPs).

- 93. Inter-Cloud • It is an interconnected global "cloud of clouds" and an extension of the Internet "network of networks" on which it is based. • Inter-Cloud computing is interconnecting multiple cloud providers' infrastructures. • The main focus is on direct interoperability between public cloud service providers.