What are Hadoop Components? Hadoop Ecosystem and Architecture | Edureka

- 1. Copyright © 2017, edureka and/or its affiliates. All rights reserved.

- 3. HADOOP CORE COMPONENTS HADOOP ARCHITECTURE www.edureka.co WHAT IS HADOOP? MAJOR HADOOP COMPONENTS

- 5. www.edureka.co WHAT IS HADOOP? HADOOP Hadoop is an open source distributed processing framework that manages data processing and storage for big data applications running in clustered systems.

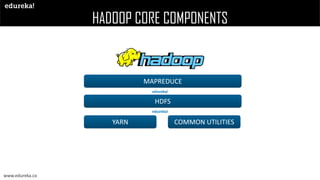

- 7. HADOOP CORE COMPONENTS MAPREDUCE COMMON UTILITIES HDFS YARN www.edureka.co

- 8. HADOOP CORE COMPONENTS NAMENODE RESOURCE MANAGER SECONDARY NAMENODE DATANODE NODEMANAGER HDFS YARN Hadoop MASTER SLAVE www.edureka.co

- 10. HADOOP ARCHITECTURE NAMENODE SECONDARY NAMENODE FS-image Edit Log Edit Log (New) FS-image Edit Log FS-image (Final) www.edureka.co

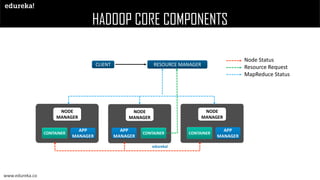

- 11. HADOOP CORE COMPONENTS NODE MANAGER APP MANAGER CONTAINER NODE MANAGER APP MANAGER CONTAINER NODE MANAGER APP MANAGER CONTAINER CLIENT RESOURCE MANAGER Node Status Resource Request MapReduce Status www.edureka.co

- 13. Storage Managers General Purpose Execution Engines Data abstraction Engines Machine Learning Engines Machine Learning Engines Database Management Engines Resource Management YARN Storage HDFS General Purpose Execution Engines General Purpose Execution Engines Hadoop Cluster Management Software Graph Processing Frameworks Realtime Data Streaming Frameworks www.edureka.co

- 14. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP STORAGE MANAGERS

- 15. MAJOR HADOOP COMPONENTS HDFS • Hadoop Distributed File System. • Primary Data Storage Unit in Hadoop. • Used in Distributed Data Processing environment. www.edureka.co

- 16. MAJOR HADOOP COMPONENTS HCATALOG • Hadoop Storage Management layer. • Exposes Tabular data of Hive metastore to other applications like Pig, MapReduce etc. www.edureka.co

- 17. MAJOR HADOOP COMPONENTS ZOOKEEPER • Centralized Open-source Server • Used to provide a distributed configuration service, synchronization service, and naming registry for large distributed systems. www.edureka.co

- 18. MAJOR HADOOP COMPONENTS OOZIE • Server-based workflow scheduling system • It Schedules jobs in Apache Hadoop Jobs • Used to manage Directed Acyclical Graphs (DAGs) www.edureka.co

- 19. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co GENERAL PURPOSE EXECUTION ENGINES

- 20. MAJOR HADOOP COMPONENTS MAPREDUCE • Software Framework for distributed processing . • It splits data into chunks to enable map, filter and other operations. • Used in Functional Programming. www.edureka.co

- 21. MAJOR HADOOP COMPONENTS SPARK • General Purpose Cluster Computing Framework. • It can perform Real-time data streaming and ETL • Used for Micro-Batch Processing. www.edureka.co

- 22. MAJOR HADOOP COMPONENTS TEZ • High performance Data processing tool. • Executes series of MapReduce Jobs as single Job • Used to Batch Processing environment www.edureka.co

- 23. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP DATABASE MANAGEMENT ENGINES

- 24. MAJOR HADOOP COMPONENTS HIVE • Data Warehouse Software Project • Enables SQL like queries for Databases. • Used in ETL, Hive DDL and DML www.edureka.co

- 25. MAJOR HADOOP COMPONENTS SPARK SQL • Distributed SQL Query engine • Enables Structured Data Processing. • Used importing data from RDDs, Hive, Parquet files etc. www.edureka.co

- 26. MAJOR HADOOP COMPONENTS IMPALA • In-Memory Processing Query engine • Integrates with HIVE metastore to share the table information between the components. • Used to process data in Hadoop Clusters www.edureka.co

- 27. MAJOR HADOOP COMPONENTS APACHE DRILL • Low Latency Distributed Query engine • Combines a variety of data stores just by using a single query. • Used to support different kinds of NoSQL Data bases. www.edureka.co

- 28. MAJOR HADOOP COMPONENTS HBASE • Open source, non-relational distributed database • Combines a variety of data stores just by using a single query. www.edureka.co

- 29. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP DATA ABSTRACTION ENGINES

- 30. MAJOR HADOOP COMPONENTS APACHE PIG • High level scripting language • Enables users to write complex data transformations • Performs ETL and analyses huge Datasets. www.edureka.co

- 31. MAJOR HADOOP COMPONENTS APACHE SQOOP • Command-line interface application for transferring data between relational databases and Hadoop. • Data Ingesting tool. • Enables to import and export structured data in an enterprise level www.edureka.co

- 32. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP REAL-TIME STREAMING FRAMEWORKS

- 33. MAJOR HADOOP COMPONENTS SPARK STREAMING • Spark Streaming is an extension of the core SparkAPI. • Enables scalable, high-throughput, fault- tolerant stream processing of live data streams • Spark Streaming provides a high-level abstraction called discretized stream for continuous data streaming. www.edureka.co

- 34. MAJOR HADOOP COMPONENTS APACHE KAFKA • Open-source stream-processing software • Ingests and moves large amounts of data very quickly. • Uses publish and subscribe to streams of records. www.edureka.co

- 35. MAJOR HADOOP COMPONENTS APACHE FLUME • Open-source Distributed and Reliable software • Architecture is based on Streaming Data Flows • Collecting, Aggregating and Moving large logs of Data. www.edureka.co

- 36. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP GRAPH PROCESSING FRAMEWORK

- 37. MAJOR HADOOP COMPONENTS APACHE GIRAPH • Iterative graph processing framework. • Utilizes Apache Hadoop's MapReduce implementation to process graphs. • Used to analyse social media data www.edureka.co

- 38. MAJOR HADOOP COMPONENTS APACHE GRAPHX • GraphX is Apache Spark's API for graphs and graph-parallel computation. • Comparable performance to the fastest specialized graph processing systems. • Seamlessly work with both graphs and collections. • Choose from a growing library of graph algorithms. www.edureka.co

- 39. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP MACHINE LEARNING FRAMEWORKS

- 40. MAJOR HADOOP COMPONENTS H2O • H2O is open-source software for big-data analysis. • H2O allows to fit thousands of potential models as part of discovering patterns in data. • H2O uses iterative methods that provide quick answers using all of the client's data. www.edureka.co

- 41. MAJOR HADOOP COMPONENTS ORYX • A generic lambda architecture tier, providing batch/speed/serving layers. • Oryx is designed with specialization for real-time large scale machine learning • End-to-End implementation of the standard ML algorithms as applications. www.edureka.co

- 42. MAJOR HADOOP COMPONENTS SPARK MLlib • Spark MLlib is a scalable Machine Learning Library. • It enables us to perform Machine Learning operations in Spark. www.edureka.co

- 43. MAJOR HADOOP COMPONENTS AVRO • Avro is a row-oriented remote procedure call and data serialization. • Used in Dynamic typing and Schema Evolution and many more. • Avro is used in Data Serialization and RPC. www.edureka.co

- 44. MAJOR HADOOP COMPONENTS THRIFT • It is an Interface definition language and binary communication protocol. • It allows users to define data types and service interfaces in a simple definition file • Thrift is used in building RPC Clients and Servers. www.edureka.co

- 45. MAJOR HADOOP COMPONENTS MAHOUT • Implementations of distributed machine learning algorithms. • Store and process big data in a distributed environment across clusters of computers using simple programming models www.edureka.co

- 46. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co www.edureka.co HADOOP CLUSTER MANAGEMENT SOFTWARE

- 47. www.edureka.co MAJOR HADOOP COMPONENTS AMBAARI • Hadoop Cluster Management Software. • Ambari enables system administrators to provision, manage and monitor a Hadoop cluster. www.edureka.co

- 48. MAJOR HADOOP COMPONENTS ZOOKEEPER • Centralized Open-source Server • Manage configuration across nodes • Implement reliable messaging • Implement redundant services • Synchronize process execution www.edureka.co

- 49. Copyright © 2017, edureka and/or its affiliates. All rights reserved. www.edureka.co

- 50. www.edureka.co