Why is dev ops for machine learning so different

- 1. Why is DevOps for Machine Learning so Different? London DevOps Oct ‘19 Ryan Dawson

- 2. Outline - Data Science vs Programming - A Traditional Programming E2E Workflow - Intro to ML E2E Workflows - Detailed ML DevOps Topics - Training - Serving - Monitoring - Advanced ML DevOps Challenges - Review

- 3. DevOps Background DevOps roles centred on CI/CD and infra Established tools Key enabler for projects - time to value & governance

- 4. MLOps Background 87% of ML projects never go live ML-related infrastructure is complex Rise of ‘MLOps’

- 5. Why So Different? Running software performs actions in response to inputs. Traditional programming codifies actions as explicit rules ML does not codify explicitly. Instead rules are indirectly set by capturing patterns from data. Different problem domains - ML more applicable to focused numerical problems.

- 6. Examples Traditional Programming ● Old terminal systems through to games ● Start with hello-world add control structures Data Science ● Classification problems, regression problems ● Start with mnist or kaggle

- 7. ML Problem Examples Regression: - Predict salary from experience, education, location, etc. - Predict sales from advertising spend, type of adverts, placement, etc. Classification: - Hand-writing samples for numbers - which number is it? - Image classification - cat or not cat?

- 8. Data Playgrounds/Exploration Data science is exploratory Interactive notebooks - great for exploration and visualization ML code shared through notebooks - model can be an artifact

- 10. Gradient Descent Compute error against training data Adjust weights and recompute

- 11. Key Points on ML Training data and code together drive fitting Closest thing to executable is a trained/weighted model (can vary with toolkit) Retraining can be necessary (e.g. online shop and fashion trends) Lots of data, long-running jobs

- 12. Traditional Programming Workflow 1. User Story 2. Write code 3. Submit PR 4. Tests run automatically 5. Review and merge 6. New version builds 7. Built executable deployed to environment 8. Further tests 9. Promote to next environment 10. More tests etc. 11. PROD 12. Monitor - stacktraces or error codes Docker as packaging Driver is a code change (git)

- 13. ML Workflows - Primer Driver might be a code change. Or new data. Data not in git. More experimental - data-driven and you’ve only a sample of data. Testing for quantifiable performance, not pass/fail. Let’s focus on offline learning to simplify.

- 14. ML E2E Workflow Intro 1. Data inputs and outputs. Preprocessed. Large. 2. Try stuff locally with a slice. 3. Try with more data as long-running experiments. 4. Collaboration - often in jupyter & git 5. Model may be pickled 6. Integrate into a running app e.g. add REST API (serving) 7. Integration test with app. 8. Monitor performance metrics

- 15. Metrics Example Online store example A/B test B leads to more conversions But… More negative reviews? Bounce-rate? Interaction-level? Latency?

- 16. What Can Happen

- 17. Role of MLOps Empower teams and break down silos Provide ways to collaborate/self-serve

- 18. New Territory Special challenges for ML. No clear standards yet. We’ll drill into: 1. Training - slice of data, train a weighted model to make predictions on unseen data. 2. Serving - call with HTTP. 3. Rollout and Monitoring - making sure it performs.

- 19. 1 Training/Experimentation For long-running, intensive training jobs there’s kubeflow pipelines, polyaxon, mlflow… Broken into steps incl. cleaning and transformation (pre-processing).

- 20. Model Training Each step can be long-running

- 21. Kubeflow - an ML platform

- 24. Training and CI Some training platforms have CI integration. Result of a run could be a model. So analogous to a CI build of an executable. But how to say that the new version is ‘good’?

- 25. 2 Serving Serving = use model via HTTP. Offline/batch is different. Some platforms have serving or there’s dedicated solutions. Seldon, Tensorflow Serving, AzureML, SageMaker Often package the model and host (bucket) so the serving solution can run it. Serving can support rollout & monitoring.

- 26. Comparison: k8s hello world apiVersion: apps/v1 kind: Deployment metadata: name: hello-world spec: selector: matchLabels: run: load-balancer-example replicas: 2 template: metadata: labels: run: load-balancer-example spec: containers: - name: hello-world image: gcr.io/google-samples/node-hello:1.0 ports: - containerPort: 8080 protocol: TCP K8s Dep using docker Hand-craft Service spec

- 27. Seldon ML Serving apiVersion: machinelearning.seldon.io/v1alpha2 kind: SeldonDeployment metadata: name: sklearn spec: name: iris predictors: - graph: children: [] implementation: SKLEARN_SERVER modelUri: gs://seldon-models/sklearn/iris name: classifier name: default replicas: 1 K8s custom resource Pods created to serve http Docker option too Data scientists like pickles

- 28. 3 Rollout and Monitoring ML model trained on a sample - need to check and keep checking against new data coming in. Rollout strategies: Canary = % of traffic to new version as check A/B Test = % split between versions for longer to monitor performance Shadowing = All traffic to old and new model. Only the live model’s responses are used

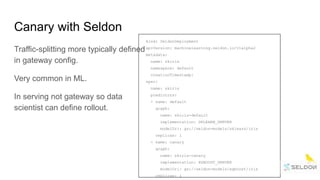

- 29. Canary with Seldon kind: SeldonDeployment apiVersion: machinelearning.seldon.io/v1alpha2 metadata: name: skiris namespace: default creationTimestamp: spec: name: skiris predictors: - name: default graph: name: skiris-default implementation: SKLEARN_SERVER modelUri: gs://seldon-models/sklearn/iris replicas: 1 - name: canary graph: name: skiris-canary implementation: XGBOOST_SERVER modelUri: gs://seldon-models/xgboost/iris replicas: 1 Traffic-splitting more typically defined in gateway config. Very common in ML. In serving not gateway so data scientist can define rollout.

- 30. A/B Test with Seldon apiVersion: machinelearning.seldon.io/v1alpha2 kind: SeldonDeployment metadata: name: mlflow-deployment spec: name: mlflow-deployment predictors: - graph: children: [] implementation: MLFLOW_SERVER modelUri: gs://seldon-models/mlflow/elasticnet_wine name: wines-classifier name: a-mlflow-deployment-dag replicas: 1 traffic: 20 - graph: children: [] implementation: MLFLOW_SERVER modelUri: gs://seldon-models/mlflow/elasticnet_wine name: wines-classifier name: b-mlflow-deployment-dag replicas: 1 traffic: 80

- 31. Seldon Metrics Out of the box basic metrics (because so commonly needed)

- 32. Seldon Request Logging Human review of predictions can be needed

- 33. Seldon UI Rollout, serving and monitoring

- 34. Advanced Topics - Serving ● Real-time inference graphs with pre-processing ● Advanced routing - multi-armed bandits. ● Outlier detection ● Concept drift

- 35. Advanced Topics - Governance ● Explainability - why did it predict that? ○ Some orgs sticking to whitebox techniques - not neural nets ○ Blackbox is possible ● Reproducibility - tracking and metadata (associating models to training runs to data to triggers) ○ Data versioning adds complexity ○ Competing tools for metadata ○ No agreed standards yet ● Bias & ethics ● Adversarial attacks

- 36. Summary MLOps is new terrain. ML is data-driven. MLOps enables with: ● Data and compute-intensive experiments and training ● Artifact tracking ● Monitoring tools ● Rollout strategies to work with monitoring

Editor's Notes

- #29: Risk that data coming in will diverge significantly from the sample taken for training. This is concept drift.

![Seldon ML Serving apiVersion: machinelearning.seldon.io/v1alpha2

kind: SeldonDeployment

metadata:

name: sklearn

spec:

name: iris

predictors:

- graph:

children: []

implementation: SKLEARN_SERVER

modelUri: gs://seldon-models/sklearn/iris

name: classifier

name: default

replicas: 1

K8s custom resource

Pods created to serve http

Docker option too

Data scientists like pickles](https://image.slidesharecdn.com/whyisdevopsformachinelearningsodifferent-191009154353/85/Why-is-dev-ops-for-machine-learning-so-different-27-320.jpg)

![A/B Test with Seldon

apiVersion: machinelearning.seldon.io/v1alpha2

kind: SeldonDeployment

metadata:

name: mlflow-deployment

spec:

name: mlflow-deployment

predictors:

- graph:

children: []

implementation: MLFLOW_SERVER

modelUri: gs://seldon-models/mlflow/elasticnet_wine

name: wines-classifier

name: a-mlflow-deployment-dag

replicas: 1

traffic: 20

- graph:

children: []

implementation: MLFLOW_SERVER

modelUri: gs://seldon-models/mlflow/elasticnet_wine

name: wines-classifier

name: b-mlflow-deployment-dag

replicas: 1

traffic: 80](https://image.slidesharecdn.com/whyisdevopsformachinelearningsodifferent-191009154353/85/Why-is-dev-ops-for-machine-learning-so-different-30-320.jpg)